You're deep in the evaluation phase, sifting through countless AI solutions, trying to discern what truly delivers on its promises. The market is flooded with tools boasting "AI-powered" everything, yet the stark reality is that nearly half of all organizations face significant challenges with AI adoption, often citing data accuracy or bias as major hurdles by 2025. You want to make a smart investment, not just chase the latest buzzword. The core challenge isn't just if AI can perform a task, but how well it integrates into human workflows and, crucially, how willingly your customers and employees adopt it.

This isn't about theoretical AI anymore. It's about practical AI adoption, designed with your end-user your customer firmly at the center.

The Imperative of Customer-Centric AI: Why User Trust is Non-Negotiable

The global AI market is projected to skyrocket to $254.5 billion by 2025, with continued growth through 2031. This isn't a trend; it's the future of business. But simply deploying AI isn't enough. You could implement the most advanced AI system available, but if your customers don't trust it, don't understand it, or find it difficult to use, it's a wasted investment.

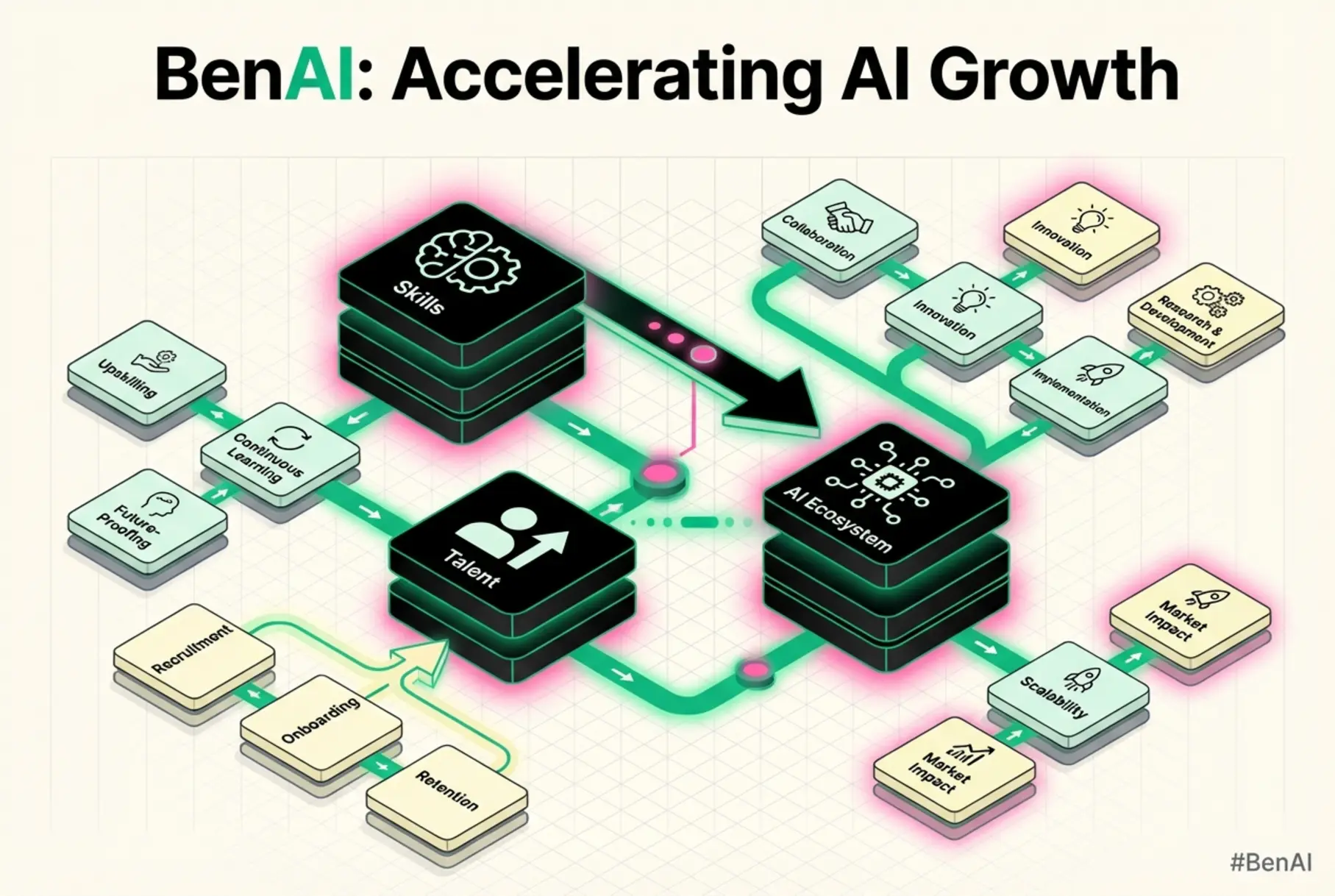

Customer-centric AI isn't a feature; it's a philosophy that ensures every AI solution is designed to genuinely solve user problems, enhance their experience, and build unwavering trust. Why? Because 14% of consumers explicitly don't trust businesses that use AI, and another 21% remain neutral. This trust deficit directly impacts adoption and ultimately, your bottom line. Our goal at BenAI is to help you bridge that gap, transforming your business into an "AI-first" entity that prioritizes practical adoption through custom implementations, training, and consulting.

Decoding User Intent: What Your Customers Really Need from AI

When evaluating an AI solution, your customers (and employees) aren't just looking at features. They're asking fundamental questions about how this technology will impact their daily lives and tasks.

Evaluation Criteria: Beyond the Specs

Customers evaluate AI solutions based on a holistic set of criteria:

- Ease of Use (UX): Is it intuitive? Does it reduce friction or add complexity?

- Reliability & Accuracy: Can they trust the output? Is it consistent? With up to 38.6% of facts used by some AI systems found to be biased, this is a critical concern, directly impacting trust.

- Ethical Alignment: Does it respect their privacy? Is it fair? Does it operate transparently? For example, 66% of Americans would avoid jobs where AI supports hiring decisions due to bias fears.

- Perceived Value: Does it genuinely solve a problem or make their life easier?

- Integration: Does it fit seamlessly into their existing workflows and other tools?

These factors aren't abstract; they are the bedrock of actual adoption.

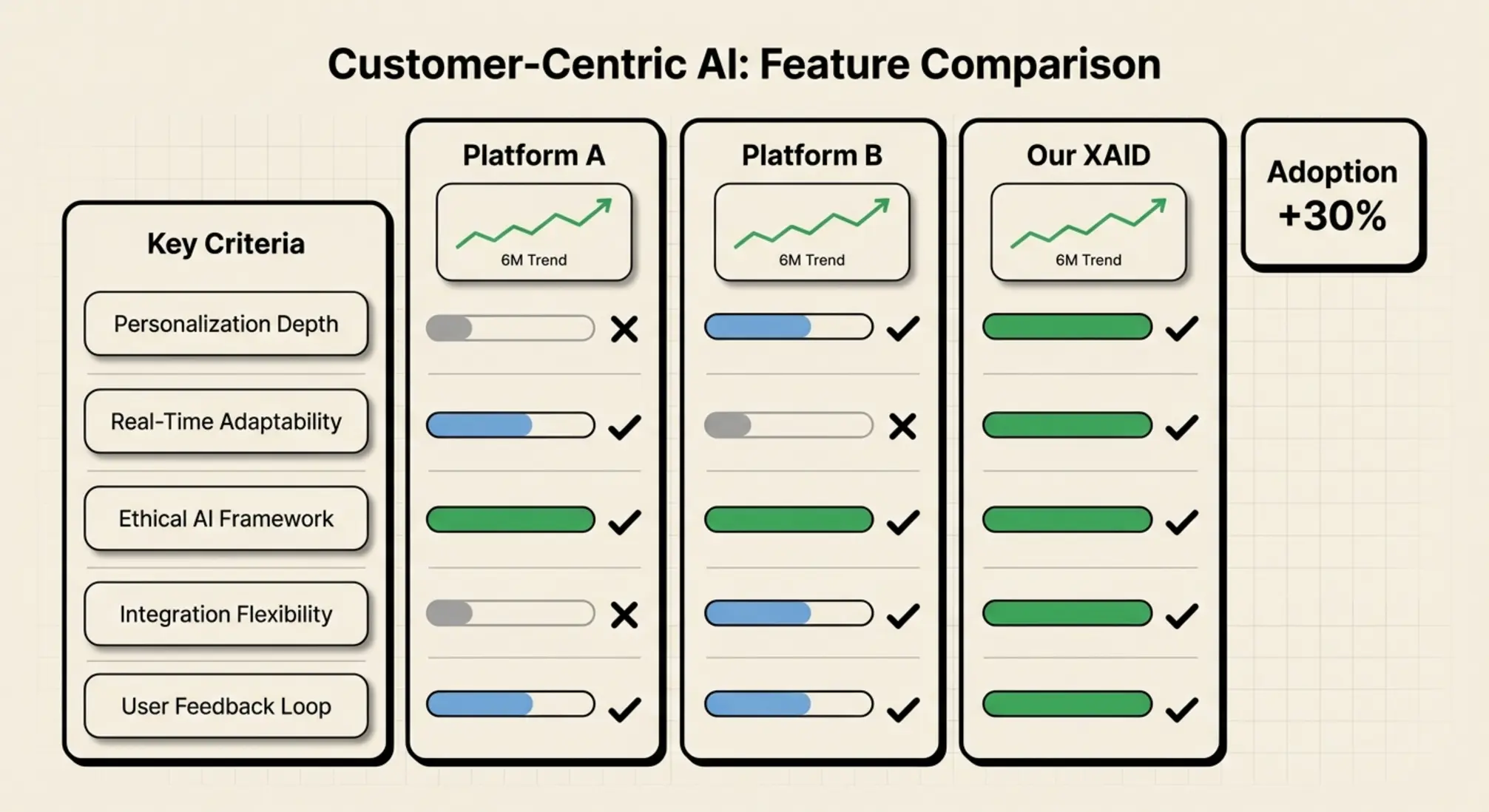

Comparison Needs: Making Sense of the AI Landscape

Your decision-makers are constantly comparing solutions, looking for clear differentiation. They're asking:

- Customization vs. Off-the-Shelf: Do we need a bespoke solution tailored to our unique needs, or can a generic tool suffice? Competitors like Qodo.ai offer enterprise-grade code review, highly customized for development, while others might focus on more generalized LLM comparisons.

- Feature Sets: Does it offer local-first LLM execution for privacy (like Pieces.app) or robust version control and automated test generation for critical codebases (Qodo)?

- Ethical Footprint: How do different platforms handle data privacy and bias mitigation? Is there a human-in-the-loop mechanism, as emphasized by Qodo?

- ROI & Cost-Effectiveness: Is the investment justified by tangible productivity gains or improved customer satisfaction? Developers using GitHub Copilot, for instance, report up to 75% higher satisfaction and 55% faster coding—clear indicators of ROI.

- Future-proofing: Can the solution evolve with emerging AI trends?

Customers need clear, practical comparisons that highlight not just what a tool does, but how it performs in real-world scenarios, especially concerning customer interaction.

Decision Factors: From Evaluation to Implementation

The ultimate decision hinges on several key factors:

- Trust: Does the vendor demonstrate expertise not just in AI, but in responsible AI deployment? This includes a commitment to ethical design and transparent operations.

- Solved Pain Points: Does the solution directly address an existing business problem, whether it's automating repetitive tasks, scaling operations without increasing headcount, or enhancing customer satisfaction?

- Implementation Support: Is there clear guidance and support for integration into existing systems? As highlighted by n8n, seamless integration with hundreds of services is crucial for building reliable automations.

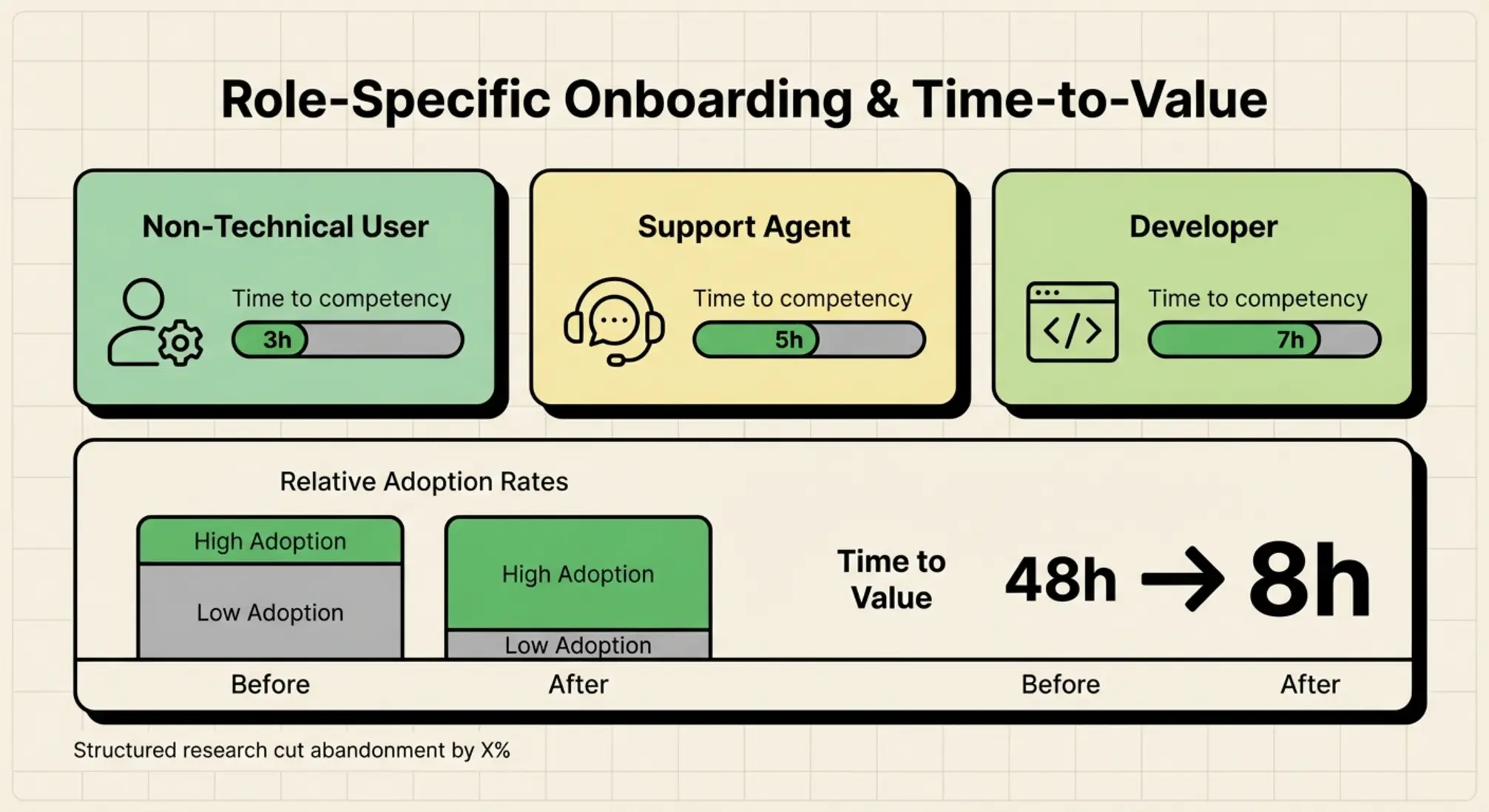

- Training & Adoption Roadmaps: How will we ensure our teams and customers effectively use this new technology? This is where comprehensive AI training from BenAI becomes indispensable.

Your decision path needs to be clear, validating concerns and providing actionable steps, just like our proven approach to strategic AI integration.

The UX of Trust: Principles of Responsible AI Experience Design (XAID)

Building trust isn't a checkbox; it's an ongoing commitment embedded in your AI's design. This is where Responsible AI Experience Design (XAID) becomes paramount.

Human-in-Control: Empowering Users, Not Replacing Them

The "30% rule" in AI suggests that AI excels at about 30% of repetitive tasks, freeing humans for the 70% requiring creativity, ethical judgment, and strategic thinking. Good XAID ensures that AI augments human capabilities rather than replaces them without oversight. Practical design patterns include:

- Clear Handoffs: When AI reaches its limits, it should intelligently hand off to a human.

- User Veto Power: Users must have the ability to override or correct AI decisions.

- Adjustable Autonomy: Allow users to set the level of AI intervention.

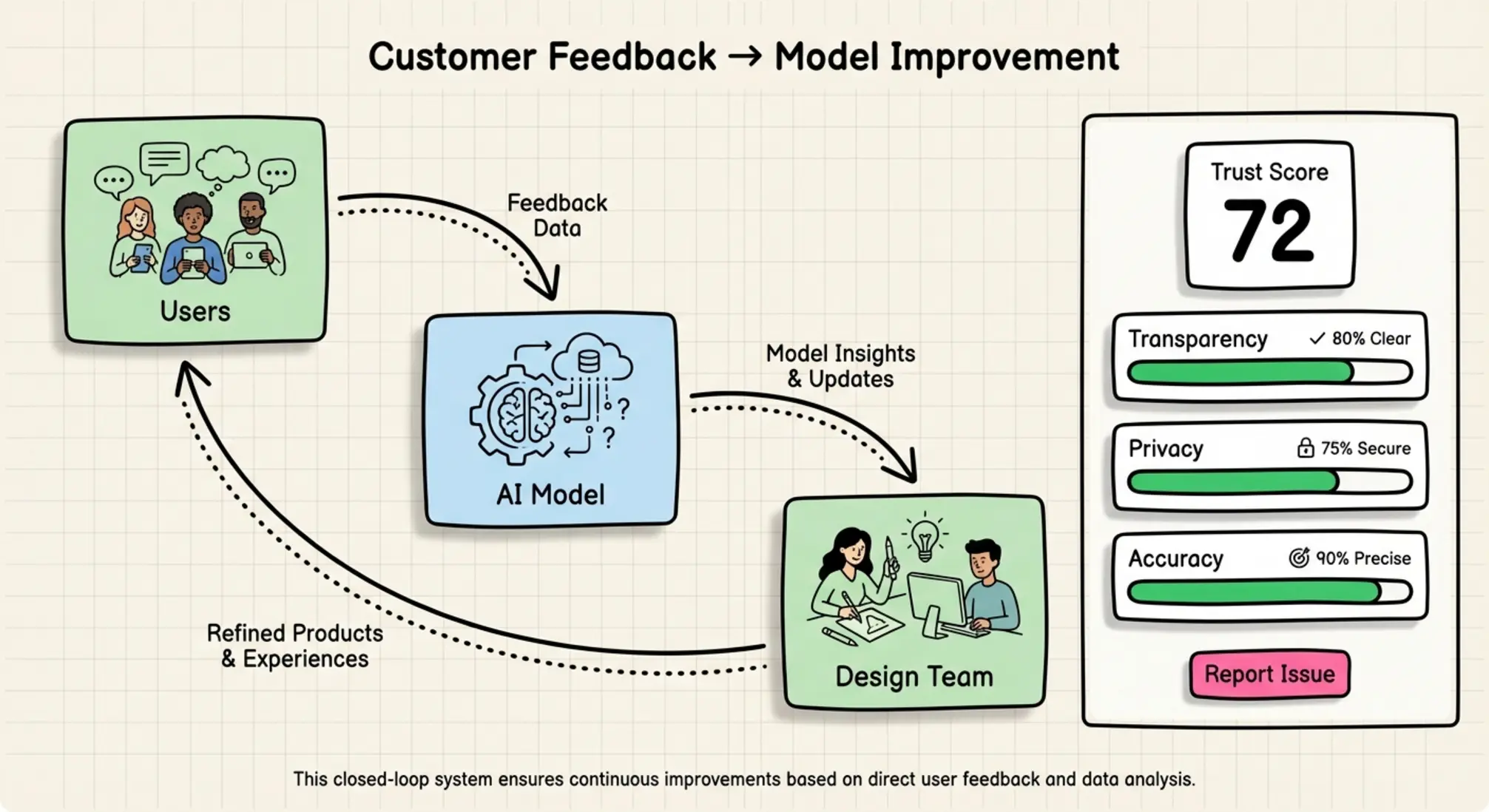

Explainable AI (XAI): Demystifying the Black Box

Customers need to understand why an AI made a particular suggestion or decision. This isn't just about technical interpretability; it's about clear, human-understandable explanations. Design for clarity by:

- Providing Context: Show the data points or rules that led to an AI's output.

- Visualizing Decisions: Use intuitive visual cues to explain complex AI logic.

- Transparency in Limitations: Clearly communicate what the AI cannot do or areas where its confidence is lower.

Bias Mitigation in UX: Actionable Design for Fairness

AI bias isn't just a data problem; it's a design problem. For example, if an AI is trained on biased recruitment data, its output will perpetuate that bias unless mitigated through thoughtful design.

- Fairness by Design: Actively design interfaces that prompt for diverse input or flag potentially biased outputs.

- User Feedback for Bias Detection: Implement clear mechanisms for users to report perceived bias, creating a feedback loop for model improvement.

- Auditable Traceability: Ensure every AI decision can be traced back to its inputs and logic, allowing for scrutiny and correction.

Privacy by Design: Protecting Customer Data from the Ground Up

With tools like Pieces for Developers offering local-first LLM execution for privacy, the expectation for data security is high. Privacy by Design means embedding data protection into every stage of your AI solution's development and deployment.

- Minimal Data Collection: Only collect data absolutely necessary for the AI's function.

- Explicit Consent: Always obtain clear, informed consent for data usage.

- Robust Anonymization: Anonymize or pseudonymize sensitive data where possible.

- Secure Infrastructure: Deploy AI on secure infrastructure, adhering to standards like SOC 2 compliance (as offered by Qodo). Our guide to AI infrastructure can help you build this foundation.

Case Studies: XAID in Action

Consider a customer service AI. If it can explain why it's suggesting a particular solution based on past interactions, customer trust increases. Conversely, if it provides generic, unhelpful responses without context, it erodes trust. Investing in world-class AI implementations means adopting AI the "right way," with XAID at its core.

From Research to Reality: User Research Methods for AI Product Development

Effective AI adoption begins long before deployment, with thorough user research.

Adapting Traditional UX Research for AI

Traditional UX methods are still invaluable:

- Interviews: Understand user pain points, expectations, and mental models surrounding AI. What are their fears? What do they hope AI can do?

- Usability Testing: Observe users interacting with AI prototypes. Does the explainability feature actually help them understand? Is the handoff mechanism clear?

- Surveys: Gather quantitative data on preferences, satisfaction, and perceived utility.

AI-Specific Research: Prototyping and Managing Expectations

Developing AI requires specialized research techniques:

- Wizard-of-Oz Prototyping: Simulate AI capabilities with human operators behind the scenes to test interactions before developing the full model. This helps gather feedback on perceived intelligence and responsiveness.

- Expectation Priming: Test how different ways of introducing AI—e.g., "AI assistant" vs. "intelligent tool"—impact user expectations and initial trust. Over-promising AI capabilities can lead to user disappointment and abandonment.

- Testing Explainability: Design specific tests to ensure users can comprehend the rationale behind the AI's actions.

Feedback Loops for AI Improvement: Designing for Perpetual Refinement

The journey doesn't end at launch. Continuous feedback is vital for refining AI models and UX. This isn't just about basic rating systems; it's about structured frameworks that integrate customer feedback directly into AI model improvement cycles.

- In-App Feedback: Embed simple, contextual feedback mechanisms directly within the AI interaction points (e.g., "Was this helpful?").

- Sentiment Analysis: Use AI itself to analyze customer interactions for sentiment, identifying areas of frustration or delight.

- Human Review & Annotation: A critical component for supervised learning. Human experts review AI outputs and user interactions, correcting errors and labeling data to retrain models. This ensures improvements are grounded in real-world scenarios.

- A/B Testing AI Features: Continuously test different AI responses, interface elements, or explainability methods to optimize for user adoption and satisfaction.

Addressing the Elephant in the Room: AI Liability & Accountability

As you evaluate AI solutions, a critical question looms: who is accountable when AI "fails"? This isn't a hypothetical. Emerging regulations, like the EU AI Act, are beginning to define legal frameworks around AI.

Shared Responsibility: Developers, Deployers, Data Providers, Users

Accountability in AI is often a shared responsibility:

- Developers: Responsible for the design, training data, and ethical considerations embedded in the model.

- Deployers (Your Company): Responsible for how the AI is used, the context of its deployment, and ensuring human oversight.

- Data Providers: Responsible for the quality, fairness, and consent of the training data.

- Users: Responsible for how they interact with and interpret the AI's outputs, especially if they have agency to override.

Designing for Accountability: Ensuring Traceability and Oversight

Proactive design can significantly mitigate liability:

- Robust Logging: Every AI decision and human interaction with the AI should be thoroughly logged.

- Audit Trails: Maintain clear audit trails of model versions, training data, and performance metrics.

- Human Oversight Mechanisms: Design clear human review and intervention points, especially for high-stakes decisions. This aligns with a "human-first, AI-second" philosophy.

Developing an "AI Agent Ecosystem" with these principles baked in is crucial, as we discuss in our AI agent ecosystem guide.

The Future is Empathetic: Emerging Trends in Customer-Centric AI

The horizon of AI is constantly expanding, offering even more powerful ways to serve your customers.

- Advanced NLP & Generative AI: Beyond simple chatbots, we're seeing AI capable of multi-step conversations, understanding subtle nuances, and generating thoughtful, human-like responses. This enables true conversational commerce and support.

- Hyper-Personalization & Predictive AI: AI can now anticipate customer needs, tailoring experiences before they even articulate them. This moves beyond basic recommendations to truly proactive engagement, driving significant customer satisfaction.

- Ethical Transparency as a Feature: Building trust through clear communication about AI's capabilities and limitations will become a primary differentiator. Tools that allow users to inspect the reasoning of an AI will lead the way.

- Agentic AI for Customer Support: Autonomous AI agents are evolving to handle complex tasks with minimal human intervention, from managing customer inquiries across channels to processing returns and troubleshooting, all while maintaining human oversight for critical exceptions. This is key for intelligent automation and RPA at scale.

FAQ: Your Customer-Centric AI Questions, Answered

Q1: How can we ensure our AI solutions don't alienate customers?

The key is to prioritize Responsible AI Experience Design (XAID), focusing on transparency, explainability, user control, and bias mitigation. Actively involve customers in the design process through adapted UX research methods, and always maintain clear human oversight and fallback options. Remember the 14% of consumers who explicitly distrust AI; proactive trust-building is crucial.

Q2: What's the best way to measure the success of customer-centric AI?

Beyond traditional ROI (like the 55% coding speed increase for developers using GitHub Copilot), focus on metrics directly related to customer experience:

- Adoption Rates: How many customers are actively using the AI feature?

- Task Success Rate: Are customers achieving their goals with the AI's help?

- Customer Satisfaction (CSAT) & Net Promoter Score (NPS): Do customers feel the AI improves their experience?

- Trust Metrics: Surveys and qualitative feedback can gauge customer trust.

- Efficiency Gains: Did the AI reduce customer effort or waiting times?

We can help you set up robust ways to measure AI performance that align with your customer goals.

Q3: Our internal teams are hesitant to adopt new AI tools. How can we overcome this?

Internal adoption is just as critical as customer adoption. Address hidden intents and concerns:

- Clarify Roles: Emphasize AI as an "assistant" that handles repetitive tasks (the 30% rule), freeing up humans for high-value strategic work.

- Comprehensive Training: Provide hands-on training that demystifies AI and demonstrates its benefits. This is a core offering from BenAI.

- Involve Them in Design: Empower employees to contribute to how AI integrates into their workflows, fostering a sense of ownership.

- Show, Don't Just Tell: Demonstrate tangible benefits like reduced manual work and higher job satisfaction.

Q4: How do we handle AI bias in customer-facing applications?

Address bias at every stage:

- Data Curation: Ensure diverse and representative training data.

- Model Design: Use bias detection tools during development.

- UX Design (XAID): Build interfaces that flag potential biases, allow user corrections, and provide transparency around AI decisions.

- Continuous Monitoring: Implement feedback loops and human-in-the-loop systems to constantly monitor for and correct emergent biases. This is essential, as 66% of Americans fear AI bias in hiring.

Q5: Is developing custom AI solutions worth the investment compared to off-the-shelf tools?

For unique business challenges and specific customer needs, custom AI solutions often yield far greater value. Off-the-shelf tools might offer a base, but they rarely integrate seamlessly or address niche requirements. Custom solutions, like those provided by BenAI, ensure the AI is precisely tailored to your customers' journey, your internal workflows, and your brand's ethical standards, leading to higher adoption and a significant competitive advantage. For enterprise clients, this customized approach, starting around $10,000+ monthly, offers world-class AI implementations, training, and consulting to truly lead the way in AI adoption.

Building a Better AI Future, Together

The shift to an AI-first business isn't just about technology; it's about people. By meticulously designing AI solutions with your customers' needs, trust, and adoption squarely in mind, you move beyond the hype and unlock the profound potential of artificial intelligence.

At BenAI, we're not just offering tools; we're providing the strategic guidance, custom implementations, and comprehensive training needed to transform your business. From automating your marketing content generation to building an intelligent content system, we ensure your AI investments create capacity and drive growth without increasing headcount.

Ready to build an AI-first business that truly resonates with your customers? Explore our tailored AI Marketing Solutions, AI Recruiting Solutions, or Enterprise Solutions.Your AI-first business starts here.

Join Our Growing AI Business Community

Get access to our AI Automations templates, 1:1 Tech support, 1:1 Solution Engineers, Step-by-step breakdowns and a community of forward-thinking business owners.

Latest Blogs

Explore our latest blog posts and insights.