The promise of AI for business transformation is clear, yet the path to becoming truly "AI-first" can often feel obscured by a dizzying array of technological choices. You’re evaluating not just tools, but the very infrastructure that will define your organization's future competitiveness. This isn't about simply adopting a new software; it's about building a robust, scalable, and intelligent foundation. Every decision, from data architecture to cloud strategy, has profound implications for your AI ambition.

Our goal here at BenAI is to cut through that complexity, providing the authoritative guidance you need to make confident, strategic decisions about your AI technology infrastructure. We understand that amidst the hype, you seek practical, proven strategies that drive tangible outcomes for your business.

Laying the Foundation: The Technology Infrastructure for AI-First Businesses

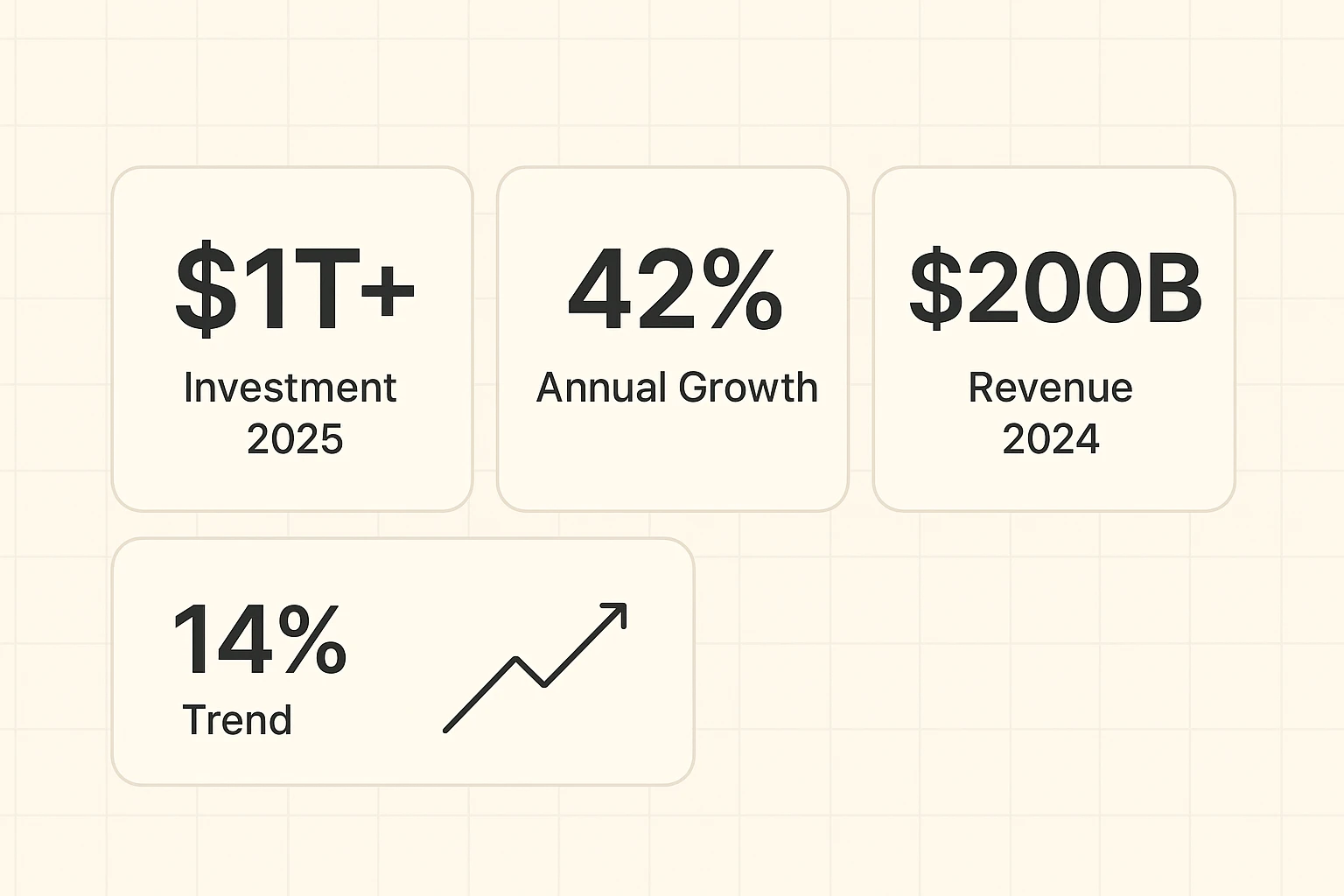

The AI infrastructure market is experiencing unprecedented growth, with investments projected to exceed $1 trillion in 2025 alone and reach $3-4 trillion by the end of the decade. Major tech players like Microsoft and Meta are pouring tens of billions into this space. (Perplexity Source 1, Source 4). This staggering investment underscores one thing: a robust, scalable, and efficient technological foundation is no longer optional for businesses aiming to leverage AI for growth.

But what does this 'foundation' truly entail? It's far more than just powerful computers. It's a layered ecosystem designed to support every stage of the AI lifecycle, from data ingestion to model deployment and continuous optimization. For an "AI-first business," this infrastructure is the strategic backbone that enables automated tasks, reduced manual work, and capacity creation without necessarily increasing headcount. It's how businesses scale 10x faster and unlock new levels of efficiency.

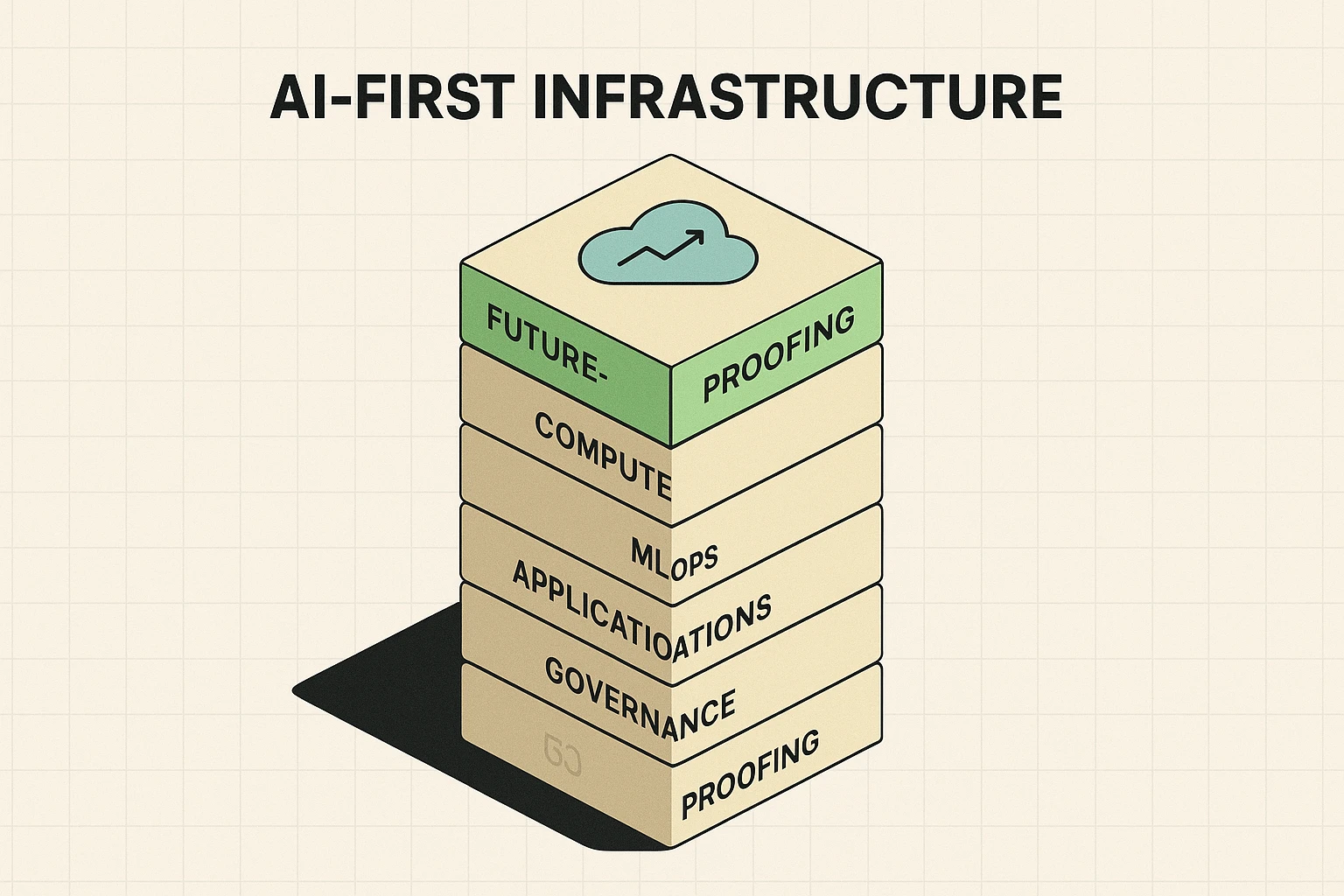

The AI Infrastructure Stack: A Layered Blueprint for Success

Think of your AI infrastructure as a multi-layered modern skyscraper, where each level supports and interacts with the others to create a powerful, cohesive whole.

Layer 1: Compute – The Engine Room

At the ground floor of this skyscraper, we find compute—the raw processing power that fuels all AI operations. This includes Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), and other specialized AI accelerators. While raw power is essential, the focus is increasingly on efficiency and specialization.

Organizations face a critical choice: on-premise, cloud, or edge computing?

- On-premise offers maximum control and data residency, but demands significant upfront capital expenditure and specialized IT teams.

- Cloud computing (like NVIDIA's cloud tools for generative AI or Google Cloud) provides flexibility, scalability, and access to cutting-edge hardware without the capital outlay. However, businesses must carefully consider cost optimization, regulatory compliance, and potential vendor lock-in. A significant 60% of organizations use private cloud, 48% prefer hybrid, and 47% use public cloud for AI data storage, highlighting a diversified approach. (Perplexity Source 1).

- Edge computing brings AI processing closer to the data source, reducing latency and bandwidth requirements, crucial for real-time applications.

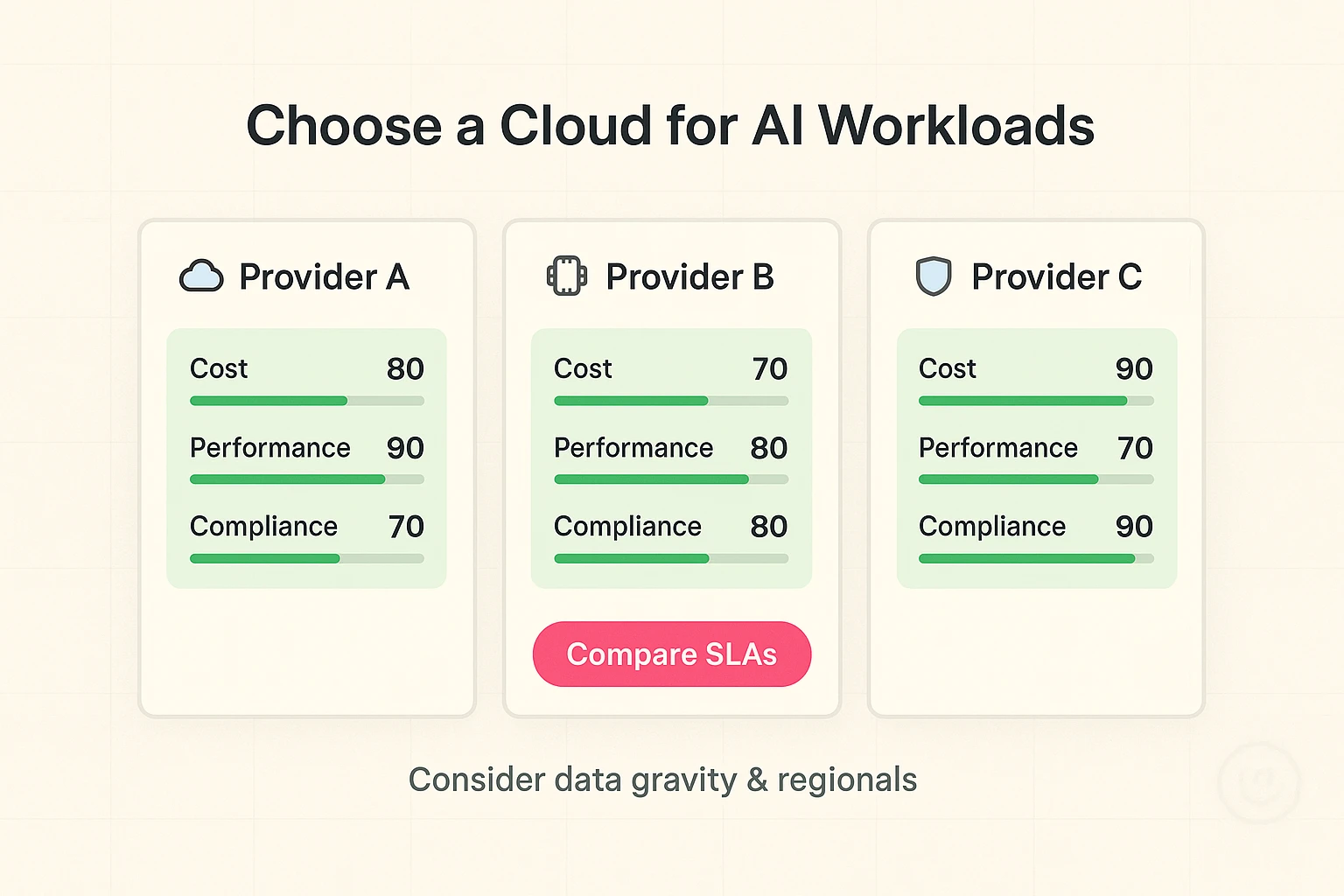

Choosing the right blend requires a strategic understanding of your unique AI workloads, data gravity, and sensitivity.

Layer 2: Data Architecture – The Lifeblood of AI

No AI model is better than the data it's trained on. This layer focuses on how you collect, store, process, and manage your data to make it "AI-ready." The concept of "garbage in, garbage out" (GIGO) remains paramount for AI accuracy. (SerpScraper "data for ai" overview). Quality, governance, and ethical sourcing of your data are non-negotiable.

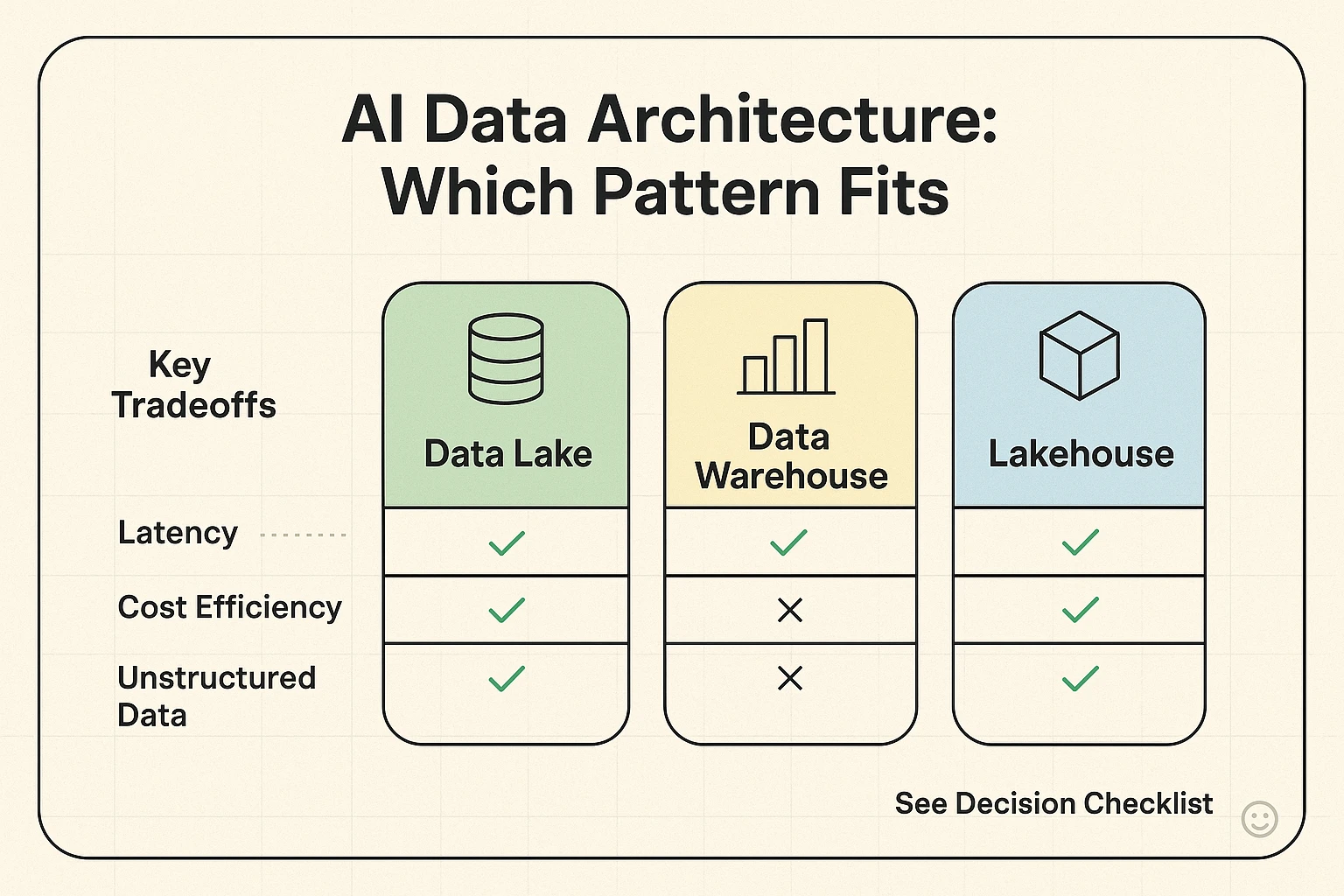

A common challenge is deciding between Data Lakes, Data Warehouses, and Data Lakehouses for AI workloads:

- Data Lakes store vast amounts of raw, unstructured, and multimodal data, ideal for exploratory analytics and newer AI models like large language models.

- Data Warehouses house structured, cleaned data for traditional business intelligence, ensuring high-quality input for specific, well-defined AI tasks.

- Data Lakehouses combine the flexibility of data lakes with the structure of data warehouses, offering a powerful, unified platform for diverse AI needs.

For modern generative AI, Vector Databases are becoming increasingly critical. They efficiently store and query high-dimensional embeddings, enabling semantic search, recommendation engines, and contextual understanding crucial for sophisticated AI applications. Our expertise in tailoring AI solutions means we guide you through these choices to optimize your data pipeline.

Layer 3: AI Development & MLOps Platforms – The Factory Floor

This layer represents where AI models are built, trained, and refined. It encompasses the tools and processes that streamline the entire AI lifecycle.

- Frameworks: Leading frameworks like PyTorch and TensorFlow provide the foundational libraries for building models. The choice often depends on the specific project requirements, community support, and existing team expertise.

- MLOps (Machine Learning Operations): This discipline is crucial for enterprise AI, focusing on automating and standardizing the development, deployment, and monitoring of ML models. MLOps platforms help bridge the gap between data scientists and operations teams, ensuring models are reliable, scalable, and perform as expected in production. Coherent Solutions emphasizes MLOps for its practical guide to building an AI tech stack.

- Explainable AI (XAI): Addressing the "black box" problem—where AI models make decisions without clear, human-understandable reasoning—is paramount. Especially in regulated industries, XAI tools and techniques provide transparency, helping to audit, interpret, and communicate AI decisions to non-technical stakeholders. Duke University's Deep Tech program highlights the governance layer as "a framework that wraps around the whole AI technology stack," emphasizing responsible deployment and security.

Layer 4: Application & Integration – The User Interface & Beyond

This layer is where AI capabilities are brought to life and integrated into your business processes. It's about how users interact with AI and how AI augments existing systems.

- APIs and Microservices: These are the conduits for seamless AI integration. By exposing AI models as APIs, businesses can embed intelligence into existing applications, websites, and workflows without rebuilding entire systems from scratch. For instance, integrating specialized AI tools for marketing automation, such as those for content generation, requires robust API strategies. Find out more about how this works with our insights on AI SEO marketing stack integration.

- Beyond Chatbots: While chatbots represent a common application, the frontier extends to innovative user experiences. Concepts like "proactive AI" (which anticipates needs) and "overnight AI" (which autonomously completes complex tasks) are emerging as impactful applications, as highlighted by Towards Data Science. This shift moves beyond conventional interfaces to truly embed AI as an intrinsic part of operations.

- "Thick Wrappers": This refers to building sophisticated applications around foundational AI models, leveraging proprietary data and domain expertise to create unique, defensible solutions. For example, if you're building an AI agent that scrapes specific datasets, you're creating a specialized application layer. Discover how to build an AI agent that scrapes anything for more.

Layer 5: Governance, Security & Compliance – The Oversight & Trust Layer

The uppermost layer ensures that your AI infrastructure operates responsibly, securely, and in adherence to legal and ethical standards.

- Ethical AI: This involves continuously monitoring for biases, ensuring fairness, and maintaining transparency in AI decision-making. Tools for bias detection and mitigation are crucial.

- Data Privacy & Regulatory Adherence: With regulations like GDPR, CCPA, and emerging frameworks like the EU AI Act, robust data privacy measures and compliance infrastructure are vital to protect sensitive information.

- Security Protocols: Securing AI models, data pipelines, and inference endpoints from cyber threats is paramount to prevent intellectual property theft, data breaches, and system manipulation.

Key Challenges & Overlooked Technical Details in AI Infrastructure

While the promise of AI is vast, building and maintaining an AI infrastructure comes with significant, often underestimated, challenges. Ignoring these can lead to costly inefficiencies and stalled AI initiatives.

- Memory Limitations: The Unseen Bottleneck. Large AI models, especially generative ones, demand enormous amounts of memory. This isn't just about storage; it's about the speed at which data can be accessed and processed. Memory bandwidth is frequently the true performance bottleneck, even more so than raw computational FLOPs. Optimizing data transfer, leveraging high-bandwidth memory (HBM), and adopting efficient memory management strategies are critical.

- Advanced Networking: North-South vs. East-West Traffic. As AI workloads become more distributed (e.g., across multiple GPUs in a cluster), the internal network traffic (East-West) between compute nodes becomes as, or even more, critical than external traffic (North-South) to and from the data center. High-speed, low-latency interconnects are essential for scalable AI training and inference.

- Power & Sustainability: Managing Extreme Energy Demands. AI workloads are incredibly power-hungry. Data centers supporting AI infrastructure are facing unprecedented power demands, with 30x+ growth by 2035 projected for the US alone. (Perplexity Source 2, Source 4). This raises concerns about sustainability, environmental impact, and the sheer availability of power. Strategic siting of data centers and investments in energy-efficient hardware are becoming paramount for long-term viability.

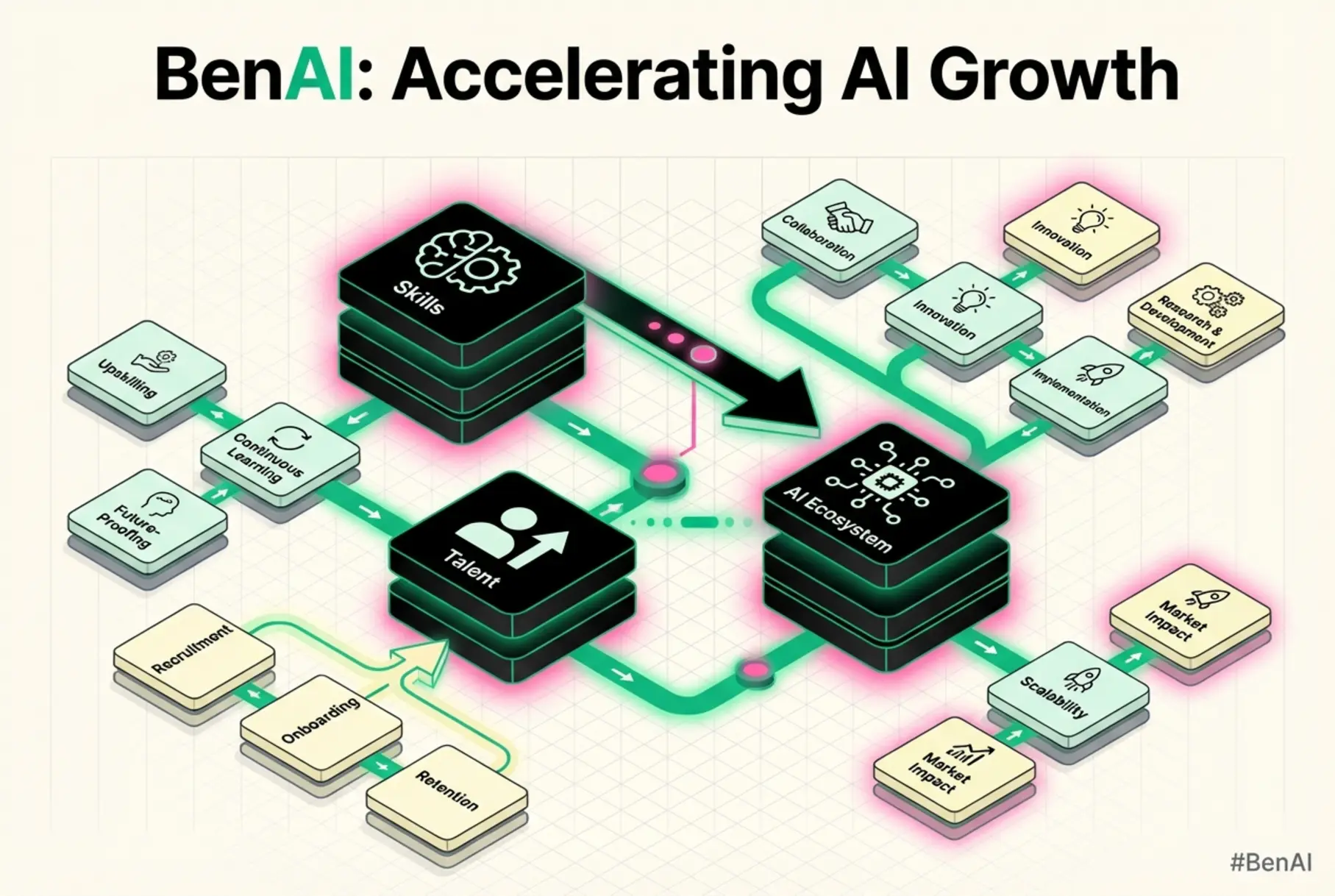

- Talent & Skills Gap: This is a silent crisis for many organizations. While the technology advances rapidly, the human expertise required to build, manage, and optimize sophisticated AI infrastructure is scarce. An alarming 86% of organizations worry about recruiting AI talent, and 61% report shortages in managing specialized AI infrastructure. (Perplexity Source 2). Building an AI-ready workforce through strategic training and recruitment is as crucial as investing in hardware.

Strategic Choices for AI-First Businesses

Successfully navigating the AI infrastructure landscape requires deliberate strategic choices that align with your business objectives.

Build vs. Buy Decisions

When developing your AI capabilities, a fundamental decision is whether to build custom infrastructure and solutions in-house or leverage existing cloud services and platforms.

- Build: Opting to build means significant investment in expertise, hardware, and ongoing maintenance. However, it offers maximum customization, control over data, and potentially lower costs at extreme scale. This is often the path for organizations with highly unique requirements or those operating in sensitive, regulated environments.

- Buy: Leveraging cloud providers (AWS, Azure, Google Cloud) or specialized AI platforms (like NVIDIA AI Enterprise) allows faster time-to-market, access to cutting-edge hardware, and reduced operational overhead. It shifts capital expenditure to operational expenditure. For selecting the right cloud partner, consider factors beyond just features.

This cloud comparison is a crucial tool in assessing the cost, performance, and compliance tradeoffs inherent in each provider, enabling decision-makers to select the optimal environment for their AI workloads.

Open-Source vs. Closed-Source Models

The landscape of AI models is a mix of open and closed-source offerings, each with its own advantages.

- Open-Source: Models like Llama 2 or Falcon offer transparency, flexibility, community support, and often lower initial costs. They allow deep customization and auditability, which is critical for explainability and compliance.

- Closed-Source: Proprietary models (e.g., from OpenAI) often provide state-of-the-art performance, robust APIs, and dedicated support. However, they come with higher costs, less transparency, and potential vendor lock-in.

A balanced approach often involves using open-source models for custom applications and fine-tuning with proprietary data, while leveraging closed-source models for tasks where raw performance is the absolute priority.

Hybrid Cloud Strategies

Given the specialized needs of AI workloads, many enterprises adopt a hybrid cloud strategy. This involves using a mix of on-premise infrastructure, private cloud environments, and public cloud services. A notable 48% of organizations already use hybrid cloud for AI data storage (Perplexity Source 1).

This approach allows businesses to:

- Keep sensitive data on-premise for security and compliance.

- Leverage public cloud for scalable, burstable AI training workloads.

- Optimize costs by running predictable workloads on owned infrastructure and dynamic ones in the cloud. We understand the need to optimize image optimization tools in a hybrid setup for peak performance.

Future-Proofing Your Stack

The AI landscape evolves at a breathtaking pace. What's state-of-the-art today might be obsolete tomorrow. Future-proofing your infrastructure means building for adaptability. This includes:

- Embracing Agentic AI: The global enterprise agentic AI market is projected to reach $24.5 billion by 2030 (46.2% CAGR), requiring infrastructure for continuous learning and autonomous decision-making (Perplexity Source 4). Your stack must support the development and deployment of these sophisticated AI agents.

- Focusing on Efficiency: Workloads are shifting from intensive training to even more widespread inference and operational use. This means optimizing infrastructure for low-latency, high-throughput inference rather than just raw training power. Inference costs for models like GPT-3.5 dropped 280-fold between late 2022 and late 2024, highlighting the rapid gains in efficiency (Perplexity Source 3, Source 6).

- Modular Architecture: Designing your infrastructure with modular components allows for easier upgrades, integration of new technologies, and swapping out inefficient parts without disrupting the entire system.

Implementation Roadmap: From Concept to Domination

Navigating these complex decisions requires a structured approach. Here's how to move from conceptual understanding to practical implementation:

- Assess Your Current State: Conduct a thorough audit of your existing infrastructure, data capabilities, and AI readiness. Identify bottlenecks, legacy systems, and skill gaps.

- Define Business Objectives & AI Use Cases: Clearly articulate what you want AI to achieve within your business. Are you automating AI SEO content generation and optimization processes, streamlining recruitment, or developing new AI-powered products? Your infrastructure must align with these goals.

- Design Your Target Architecture: Based on your objectives, design an optimal AI infrastructure stack. This involves choosing compute resources, data architecture patterns, MLOps platforms, and integration strategies.

- Prioritize & Pilot: Identify high-impact, achievable pilot projects. Start small, validate your assumptions, and demonstrate early ROI before scaling.

- Build & Integrate: Implement your new infrastructure components, integrating them with existing systems. This often involves significant data migration and API development efforts.

- Train Your Team: Invest in upskilling your workforce. Provide training on new tools, frameworks, and MLOps practices to ensure successful adoption and management.

- Monitor, Optimize & Iterate: AI infrastructure is not a set-it-and-forget-it deployment. Continuously monitor performance, costs, and model efficacy. Optimize resource utilization, fine-tune models, and iterate based on real-world feedback.

Making these strategic choices effectively will differentiate your business. Organizations that fail to address the skills gap (Perplexity Source 2) or overlook crucial details like memory limitations (Perplexity Source 1) will struggle to realize the full potential of AI.

The BenAI Difference: Your Partner in Building an AI-First Future

At BenAI, we understand that building a resilient, scalable, and ethically sound foundation for your AI-first ambitions is mission-critical. Our expertise in custom AI implementations, training, and consulting allows us to translate complex research into accessible, actionable strategies. We don't just recommend solutions; we help you integrate them, transforming your business into an AI-first entity.

Our approach emphasizes practical adoption, ensuring your AI infrastructure not only supports current needs but also future-proofs your organization against an ever-evolving technological landscape. We address the nuances that others overlook, from efficient data management to strategic cloud selection, ensuring your investment delivers maximum ROI.

Frequently Asked Questions About AI Infrastructure

Q1: What are the biggest cost drivers for AI infrastructure?

The biggest cost drivers typically include specialized hardware (GPUs, TPUs), cloud computing resources (especially for large-scale training), data storage and processing, and the recruitment/retention of skilled AI talent. Overlooked costs often include cooling, power consumption, and advanced networking infrastructure. Strategies like optimizing workloads for inference over training, which has seen costs drop significantly for foundational models (Perplexity Source 3, Source 6), and leveraging hybrid cloud models can help in cost management.

Q2: How can we ensure data privacy and compliance within our AI infrastructure?

Ensuring data privacy and compliance requires a multi-faceted approach. This includes strong data governance frameworks, encryption for data at rest and in transit, access controls, anonymization/pseudonymization techniques, and auditing mechanisms across your entire data pipeline and AI models. It's crucial to align your data architecture choices with regulatory requirements like GDPR, CCPA, and upcoming AI-specific legislation such as the EU AI Act.

Q3: What is MLOps and why is it important for AI infrastructure?

MLOps (Machine Learning Operations) is a set of practices that aims to deploy and maintain machine learning models in production reliably and efficiently. It's crucial for AI infrastructure because it automates and standardizes the lifecycle of ML models, from experimentation and development to deployment, monitoring, and retraining. Without robust MLOps, scaling AI initiatives in an enterprise environment becomes incredibly challenging, leading to inefficient resource use, unreliable models, and compliance risks.

Q4: We're a mid-sized company. Do we really need enterprise-grade AI infrastructure?

The need for "enterprise-grade" infrastructure depends on your AI ambitions and current scale. Even mid-sized companies aiming to be "AI-first"—automating core processes, scaling operations, and integrating AI deeply—will benefit from robust infrastructure design. This doesn't necessarily mean owning all hardware, but rather strategically leveraging cloud services, adopting MLOps practices, and carefully designing your data architecture to ensure scalability, reliability, and security as your AI adoption grows.

Q5: How can a specialized AI solutions provider like BenAI help us with our infrastructure challenges?

BenAI acts as your trusted advisor and implementation partner. We assess your current needs and future goals, design tailored AI infrastructure blueprints (from data architecture to cloud strategy), implement custom AI solutions, and provide comprehensive training for your teams. Our focus is on practical adoption, ensuring your infrastructure choice directly supports your business objectives, mitigates risks (like the talent gap), and future-proofs your investment against the rapidly evolving AI landscape.

Ready to build a robust, future-proof AI infrastructure that drives your business forward? Let's discuss your unique challenges and how BenAI can help.

Join Our Growing AI Business Community

Get access to our AI Automations templates, 1:1 Tech support, 1:1 Solution Engineers, Step-by-step breakdowns and a community of forward-thinking business owners.

Latest Blogs

Explore our latest blog posts and insights.