You’re beyond the initial hype of AI. You’ve seen its potential, maybe even experimented with a few tools. Now, as you evaluate how to integrate AI deep into your business operations, a critical question emerges: how do you build an AI infrastructure that can truly scale, protect your interests, and deliver on its promise?

This isn't about choosing a single AI tool; it’s about crafting a robust, resilient technology stack that supports your AI-first ambitions, transforming your business with automation and efficiency. The challenge isn't just adopting AI but building the foundational elements that allow it to thrive, adapt, and grow with your organization.

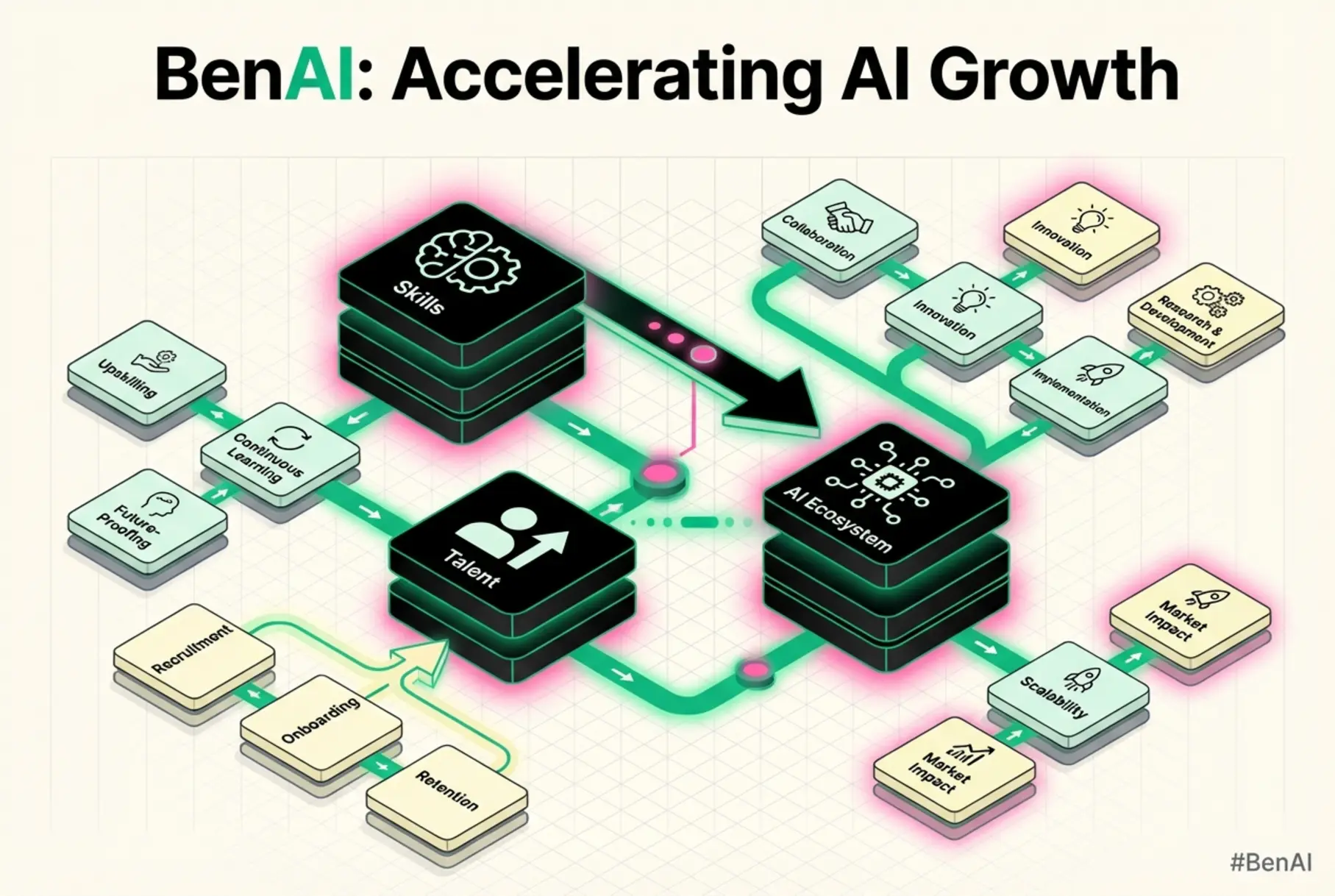

At BenAI, we understand that decision-makers like you need more than just theoretical concepts. You need authoritative guidance, backed by research and practical frameworks, to navigate the complexities of data strategies, cloud deployments, tool selection, and security. We're here to provide that trusted resource, empowering you to make confident decisions about your AI future.

The Scaling Imperative: Why a Robust AI Infrastructure Matters

The AI landscape is evolving at a breakneck pace. Training compute for AI models now doubles every five months, significantly outstripping traditional technological growth rates like Moore's Law. This exponential growth isn't just about processing power; it’s about the sheer volume of data, the complexity of models, and the demand for real-time inference.

For your business to truly embrace an "AI-first" mindset, you need more than just isolated AI applications. You require a unified, scalable AI backbone—a foundational technology stack that can handle increasing workloads, adapt to new AI advancements, and secure your most valuable assets. The focus has shifted from mere AI training to scaling intelligence across every business function, demanding robust infrastructure that can support this transition.

While 78% of enterprises reported AI use in 2024, a staggering one-third still face significant challenges in scaling AI across their organizations. This gap highlights a critical need for strategic infrastructure planning rather than ad-hoc adoption. Your ability to scale AI effectively will determine your competitive edge, drive efficiency, and unlock new growth opportunities.

Understanding the AI Infrastructure Landscape: Trends & Tiers

To build a scalable AI backbone, one must first grasp its layered components and the overarching trends shaping the infrastructure market. Think of it not as a monolithic entity, but as a carefully constructed stack, each layer interdependent on the others.

The market itself is undergoing massive transformation, projected to reach multi-trillion dollar investments by 2030, with a substantial portion dedicated to data center infrastructure alone. This indicates a clear shift towards significant capital allocation for underlying AI support systems.

Here's a breakdown of the core layers within a comprehensive AI stack:

- Hardware Layer: This is the bedrock, comprising GPUs, TPUs, specialized AI chips, CPUs, and networking infrastructure. The performance of your AI models is directly tied to the processing power and efficiency at this layer.

- Data Layer: Ingesting, storing, processing, and managing vast amounts of data—both structured and unstructured—is crucial. This includes data lakes, data warehouses, streaming platforms, and robust data governance tools.

- Model/Platform Layer: This layer houses the core AI and machine learning models, along with platforms for model development, training, validation, and deployment (e.g., TensorFlow, PyTorch, cloud ML platforms).

- Application Layer: Where AI models are integrated into business applications and workflows, creating solutions for marketing, recruiting, customer service, and more. This is where the tangible business value is realized.

- MLOps Layer: This critical layer orchestrates the entire AI lifecycle, encompassing continuous integration/continuous delivery (CI/CD) for models, monitoring, versioning, data pipeline management, and reproducibility. It bridges the gap between development and operations for AI.

Crafting a Robust Data Strategy for AI: Beyond the Basics

Ask any AI expert about the biggest hurdle to successful implementation, and data will inevitably be at the top of the list. A robust data strategy, explicitly designed for AI, is not merely a nice-to-have; it's the fundamental engine driving scalable and intelligent systems. Without it, even the most cutting-edge AI models are rendered ineffective. Research indicates that the volume of training datasets doubles approximately every eight months, emphasizing the constant need for sophisticated data management.

Your data strategy must address more than just collection. It needs to encompass quality, governance, accessibility, and security from ingestion to model deployment.

What often gets overlooked is the quantifiable impact of a poor data strategy on AI failure rates. Unclean, biased, or incomplete data leads directly to inaccurate predictions, operational inefficiencies, and ultimately, a lack of trust in your AI systems. For instance, imagine an AI recruiting solution making biased hiring recommendations due to skewed training data—the reputational and financial costs can be substantial.

To effectively harness AI, you need a proactive approach to emerging data types. Generative AI, for example, thrives on multimodal data—combining text, images, audio, and video. Your strategy must anticipate and accommodate these, ensuring your infrastructure is ready for the future.

We recommend a unique framework for building an AI-Ready Data Strategy that emphasizes cross-functional collaboration and aligns data initiatives directly with AI use cases:

- Define AI Use Cases: Clearly articulate the business problems AI will solve and the data required for each.

- Assess Data Readiness: Evaluate existing data sources against AI needs for quality, volume, and accessibility.

- Establish Data Governance: Implement clear policies for data ownership, access, privacy, and compliance.

- Implement Data Pipelines: Build automated processes for data ingestion, cleaning, transformation, and storage.

- Ensure Data Security: Protect data throughout its lifecycle with robust encryption, access controls, and compliance measures.

- Cultivate Data Literacy: Train teams to understand, utilize, and contribute to the data ecosystem.

This framework allows you to gain a consolidated readiness score, helping you prioritize your infrastructure efforts and clearly see your progress. Data governance, in particular, is a trust builder, assuring stakeholders that your AI systems are built on a foundation of integrity and compliance. For more on optimizing your data for AI, explore our guide on AI Data Management Automation.

Cloud vs. On-Premise vs. Hybrid: The Strategic AI Deployment Decision

One of the most critical decisions in building your AI infrastructure is choosing where your AI workloads will reside. This isn't just a technical specification; it’s a strategic choice impacting cost, scalability, regulatory compliance, and your overall control.

- Cloud Deployment: Offers unparalleled scalability, flexibility, and access to cutting-edge AI services without significant upfront capital investment. Providers like Google Cloud offer expansive resources, including free AI tools to get started. The trade-offs can include higher long-term operational costs, potential vendor lock-in, and data sovereignty concerns, especially for global operations.

- On-Premise Deployment: Provides maximum control over data, security, and hardware customization. This is often preferred for highly sensitive data, strict regulatory requirements, or when leveraging existing infrastructure investments. However, it demands substantial upfront capital, ongoing maintenance, and internal expertise for management and scaling.

- Hybrid Deployment: A pragmatic approach combining the best of both worlds. It allows you to run critical, sensitive, or latency-dependent workloads on-premise while leveraging the cloud's agility and scalability for less sensitive or burst capacity needs. For instance, data preprocessing might happen on-prem, with model training dispatched to cloud GPUs, retaining control over sensitive raw data.

The trend of "cloud repatriation" underscores the complexities of this decision. S&P Global Market Intelligence reports that approximately 80% of enterprises anticipate bringing some AI workloads back from the public cloud to on-premises or colocation data centers. This isn't a rejection of the cloud but a refinement of strategy, optimizing for cost, latency, and compliance.

Consider this side-by-side comparison to aid your decision:

For enterprises managing diverse data requirements and computational loads, a hybrid approach often presents the most resilient and cost-effective path. It offers the flexibility to tailor deployment models to specific use cases and regulatory environments. Dive deeper into optimizing your data architecture with our insights on Hybrid Cloud Data Architecture.

Selecting the Right AI Tools & Platforms: A Competitive Edge

The explosion of AI tools and platforms can lead to significant "tool fatigue." As you evaluate solutions, it’s not enough to simply list features; you need to understand how each tool fits into your overall strategy, supports your specific AI use cases, and integrates seamlessly with your existing technology stack. This is a critical mid-funnel evaluation, distinguishing between hype and actual value.

For instance, companies like Lindy.ai offer platforms that review a wide range of AI tools, categorizing them by use case and features. While helpful, your decision-making needs to go beyond simple comparisons.

Key decision factors for selecting AI tools and platforms include:

- Integration Capabilities: Can the tool easily integrate with your data sources, existing CRMs, marketing automation platforms, or recruiting systems? A fragmented toolchain decreases efficiency.

- Customizability and Flexibility: Can it be tailored to your unique business logic and specific AI models? Generic tools often fall short for complex, nuanced challenges.

- Ecosystem Support and Community: A vibrant community and extensive documentation accelerate adoption and problem-solving.

- Scalability and Performance: Can it handle growing data volumes and an increasing number of AI agents or models in production without performance degradation?

- Vendor Lock-in Risk: Evaluate how easily you could migrate away from a platform should your needs change or a better alternative emerge.

- Cost-Benefit Analysis: Consider not just licensing fees but also infrastructure costs, training time, and ongoing maintenance.

Here's an evaluation matrix to help you navigate:

Choosing tools that offer open APIs, support custom algorithms, and provide robust model governance features will give you a competitive edge. This ensures your AI investment is future-proof and truly aligned with your specific business needs. For insights into building an integrated AI ecosystem, consider our guide on AI Agent Ecosystems.

Ensuring AI System Security & Resilience: A Proactive Playbook

The integration of AI into core business processes introduces a new frontier of security challenges. Your AI infrastructure is only as strong as its weakest link, and overlooking security during the evaluation phase can lead to significant financial and reputational risks. IBM, for instance, emphasizes "secure by design" principles and cyber resilience as foundational elements for AI adoption.

Traditional cybersecurity measures are often insufficient for AI systems, which face unique vulnerabilities such as:

- Adversarial Attacks: Malicious inputs designed to trick AI models into making incorrect predictions (e.g., in image recognition, fraud detection).

- Model Poisoning: Contaminating training data to compromise the integrity of the AI model.

- Data Exfiltration: Unauthorized access to sensitive data used for training or inference, especially problematic with large language models.

- Bias Exploitation: Manipulating inherent biases in models to achieve targeted (malicious) outcomes.

To build a truly resilient AI backbone, you need a proactive, multi-layered security strategy that covers the entire AI lifecycle. This strategic playbook extends beyond generic IT security to address AI-specific risks:

- Secure Data Ingestion & Storage: Implement robust encryption, access controls, and auditing for all data fed into and generated by AI models.

- Model Governance & Versioning: Maintain strict control over model development, ensuring integrity, lineage, and explainability.

- Threat Modeling for AI: Identify potential attack vectors specific to your AI models and deploy corresponding safeguards.

- Adversarial Robustness Training: Incorporate techniques to make your models more resistant to adversarial attacks.

- Continuous Observability & Monitoring: Implement real-time monitoring of AI model performance, data drifts, and unusual behaviors to detect anomalies quickly.

- Incident Response Playbook: Develop specific protocols for identifying, containing, and recovering from AI-specific security incidents.

- Ethical AI Deployment: Integrate ethical guidelines and fairness checks to prevent unintended biases and ensure responsible AI use.

This staged security playbook emphasizes the importance of moving beyond theoretical concepts to practical, measurable improvements in security posture. Implementing these measures not only protects your assets but also builds trust with customers, partners, and regulators, validating your commitment to responsible AI adoption.

Building a Resilient AI Backbone: Integration & Optimization Best Practices

A truly scalable AI infrastructure is more than just a collection of powerful components; it's a seamlessly integrated ecosystem. The real value comes from how these disparate parts—data pipelines, cloud services, specialized tools, and security measures—work together harmoniously.

Integration is often where enterprise AI projects falter. You might have world-class AI models, but if they can't access clean data efficiently or are siloed from your core business applications, their impact will be severely limited. Best practices for integration include:

- API-First Design: Prioritize tools and platforms that offer robust, well-documented APIs to facilitate smooth communication and rapid integration. This also helps with future compatibility.

- Modular Architecture: Design your AI stack with loosely coupled components. This allows you to upgrade, replace, or scale individual parts without destabilizing the entire system.

- Standardized Data Formats: Enforce consistent data formats and schemas across your data pipelines to reduce integration friction and ensure data quality.

- MLOps for Automation: Leverage MLOps practices to automate the end-to-end lifecycle of your AI models, from development and deployment to monitoring and retraining. This is crucial for managing the complexity of many models in production.

Beyond integration, continuous optimization is key to maintaining a cost-effective and high-performing AI backbone. Power consumption for training frontier AI models doubles annually, highlighting the urgent need for efficiency. Strategies include:

- Resource Monitoring and Cost Management: Implement tools to track compute usage, storage, and networking costs across all AI workloads. Identify and eliminate idle resources.

- Performance Tuning: Regularly analyze model inference times, data processing speeds, and application response times to pinpoint bottlenecks and optimize performance.

- Infrastructure as Code (IaC): Use IaC principles to manage and provision your AI infrastructure, ensuring consistency, reproducibility, and efficient resource allocation.

By focusing on thoughtful integration and continuous optimization, you build an AI backbone that is not only powerful but also adaptable, efficient, and resilient enough to support your long-term AI-first vision.

The Future of Scalable AI: Emerging Technologies & Strategic Foresight

The AI journey is continuous. As you solidify your current AI infrastructure, it's vital to keep an eye on emerging trends that will shape its future:

- Edge AI: Deploying AI models closer to the data source (e.g., on IoT devices, local servers) to reduce latency, enhance privacy, and lower bandwidth costs. This will require new considerations for distributed management and security.

- Trusted and Responsible AI: Increasing emphasis on AI ethics, fairness, transparency, and accountability. Your infrastructure will need to incorporate tools for bias detection, explainability (XAI), and adherence to AI governance frameworks.

- AI-Driven Development: AI assisting in its own development, from code generation to automated testing and optimization, further accelerating the pace of innovation.

Building a scalable AI infrastructure is a strategic investment in your business's future. It demands a holistic approach, careful evaluation of technologies, and a deep understanding of your unique operational needs.

Frequently Asked Questions (FAQ)

Q1: How do I know if my current infrastructure is "AI-ready"?

An AI-ready infrastructure can handle large volumes of diverse data, provides scalable compute resources, supports continuous model deployment (MLOps), and has robust data governance and security measures in place. Our data-readiness framework can help you assess your current capabilities and identify gaps.

Q2: What is the most common mistake companies make when building AI infrastructure?

The most common mistake is underestimating the complexity of data strategy and governance. Many focus solely on powerful models or compute, neglecting the foundational work of ensuring clean, accessible, and secure data, which is critical for AI success. Another frequent error is overlooking the long-term operational costs and integration challenges.

Q3: Is a hybrid cloud model truly more cost-effective than pure cloud for AI?

Often, yes. While public cloud offers immediate scalability, the long-term operational costs for sustained, large-scale AI workloads can become very high. A hybrid model allows you to optimize by repatriating predictable or highly sensitive workloads to more cost-efficient on-premises solutions, while reserving cloud for burst capacity or specialized services. This strategy is also driven by data sovereignty and latency considerations.

Q4: How do I manage vendor lock-in when selecting AI tools and platforms?

To mitigate vendor lock-in, prioritize platforms that support open standards, offer robust APIs for data and model portability, and have a clear exit strategy for migrating your data and models. Favor modular architectures and avoid single-vendor solutions for critical components where possible. Explore our guide on AI SEO Marketing Stack Integration for specific insights into tool integration.

Q5: What are the most critical security considerations unique to AI systems?

Beyond traditional cybersecurity, AI systems face unique threats like adversarial attacks (manipulating inputs to confuse models), model poisoning (corrupting training data), and privacy breaches if sensitive data leaks from models. Securing AI requires specialized expertise in data integrity, model validation, and continuous monitoring for AI-specific anomalies, as outlined in our AI security playbook.

Ready to architect your AI future with confidence? At BenAI, we partner with businesses like yours to design, implement, and optimize scalable AI infrastructures. From developing a robust data strategy to integrating the right tools and fortifying your systems against emerging threats, we provide the world-class expertise and hands-on guidance you need to become an AI-first company.

Join Our Growing AI Business Community

Get access to our AI Automations templates, 1:1 Tech support, 1:1 Solution Engineers, Step-by-step breakdowns and a community of forward-thinking business owners.

Latest Blogs

Explore our latest blog posts and insights.