Imagine a world where AI-powered insights revolutionize your business, from predicting market trends to personalizing customer experiences. Now, imagine a different scenario: that same powerful AI is poised to analyze your most sensitive customer records, proprietary financial data, or protected health information. Suddenly, the promise of AI collides with the unyielding demands of data privacy and compliance.

This isn't a hypothetical. It's the daily reality for countless businesses across industries like healthcare, finance, and government. They crave the transformative power of artificial intelligence, but their critical, sensitive data cannot—under any circumstances—leave their secure, on-premise infrastructure. So, how do you harness cutting-edge AI without compromising ironclad data security?

The answer lies in a sophisticated dance between your private data centers and the scalable power of the cloud: Hybrid Cloud Data Architecture for AI with On-Premise Sensitive Data Requirements. This isn't just about moving some data to the cloud; it's about strategically orchestrating workloads, safeguarding privacy, and building an AI foundation that respects both innovation and regulation.

Why "Hybrid" is the AI Answer for Sensitive Data

For many organizations, the knee-jerk reaction to sensitive data is to keep everything on-premise. While this maximizes control, it often bottlenecks AI development. Training advanced machine learning models demands massive computational power, specialized hardware (like GPUs), and the ability to scale resources up and down rapidly—capabilities that cloud providers excel at offering on-demand.

Conversely, public cloud environments inherently introduce concerns about data sovereignty, access controls, and potential breaches, making them a non-starter for raw, sensitive data.

This is where hybrid cloud shines. It allows businesses to maintain core sensitive data assets within their controlled, on-premise environment while strategically leveraging public cloud resources for compute-intensive tasks, scaling, and accessing specialized AI services. It's about getting the best of both worlds: the security and control of private infrastructure, married with the agility and scale of the public cloud.

The Shared Responsibility Model: A Core Principle

Understanding the Shared Responsibility Model is fundamental. In the cloud, security is a partnership. Cloud providers are responsible for the security of the cloud (the underlying infrastructure, physical security, etc.), while you as the customer are responsible for security in the cloud (your data, applications, configurations, access controls). This model extends to a hybrid environment, where you bear the primary responsibility for your on-premise sensitive data and how it interacts with cloud services.

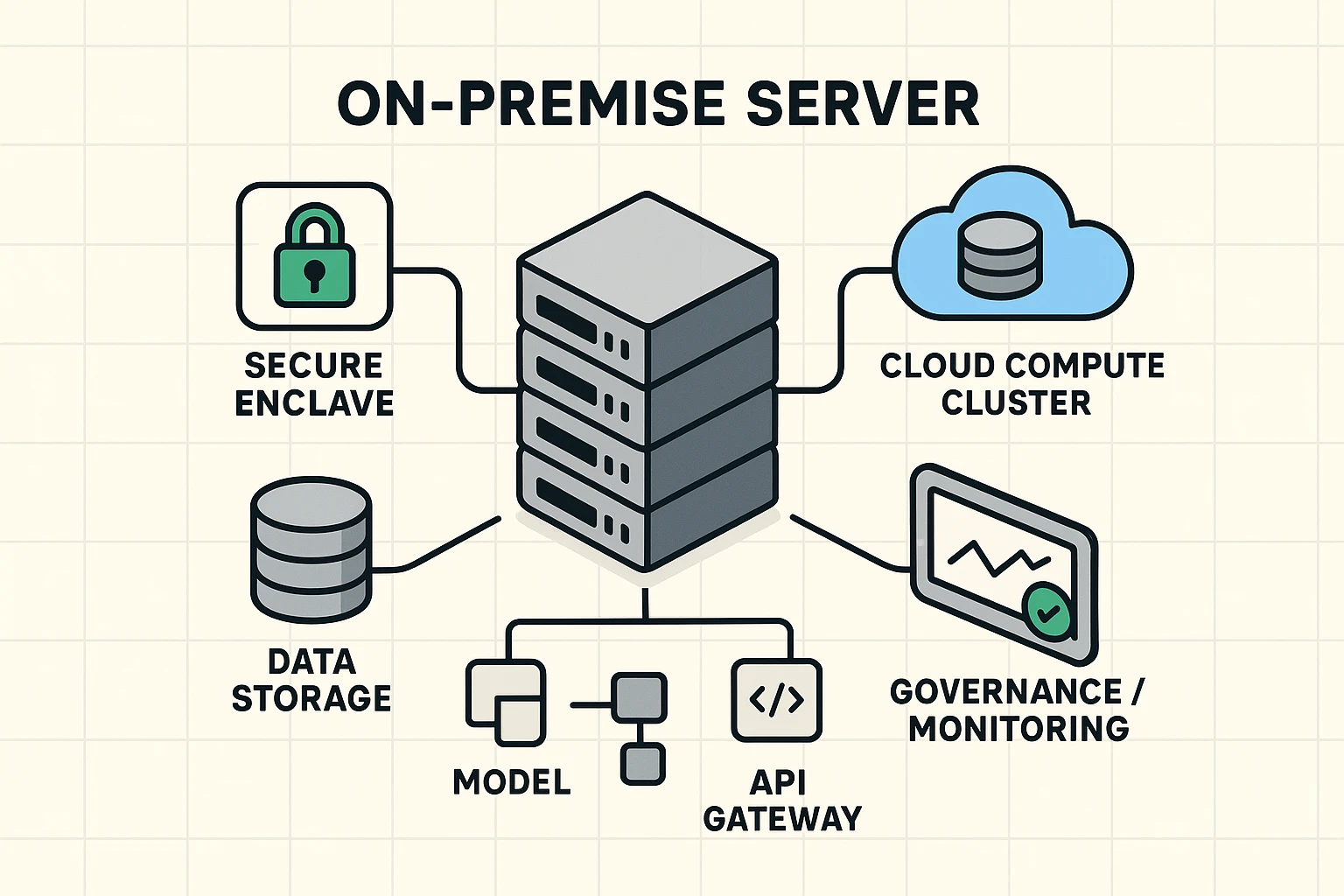

High-level hybrid AI architecture showing the on-premise data hub connected to cloud compute, confidential enclaves, and governance for secure AI workflows.

Building Your Hybrid AI Fortress: Practical Patterns

So, how do you actually make this work? It’s not a one-size-fits-all solution. Here are several architectural patterns that allow you to keep sensitive data on-premise while leveraging cloud AI capabilities:

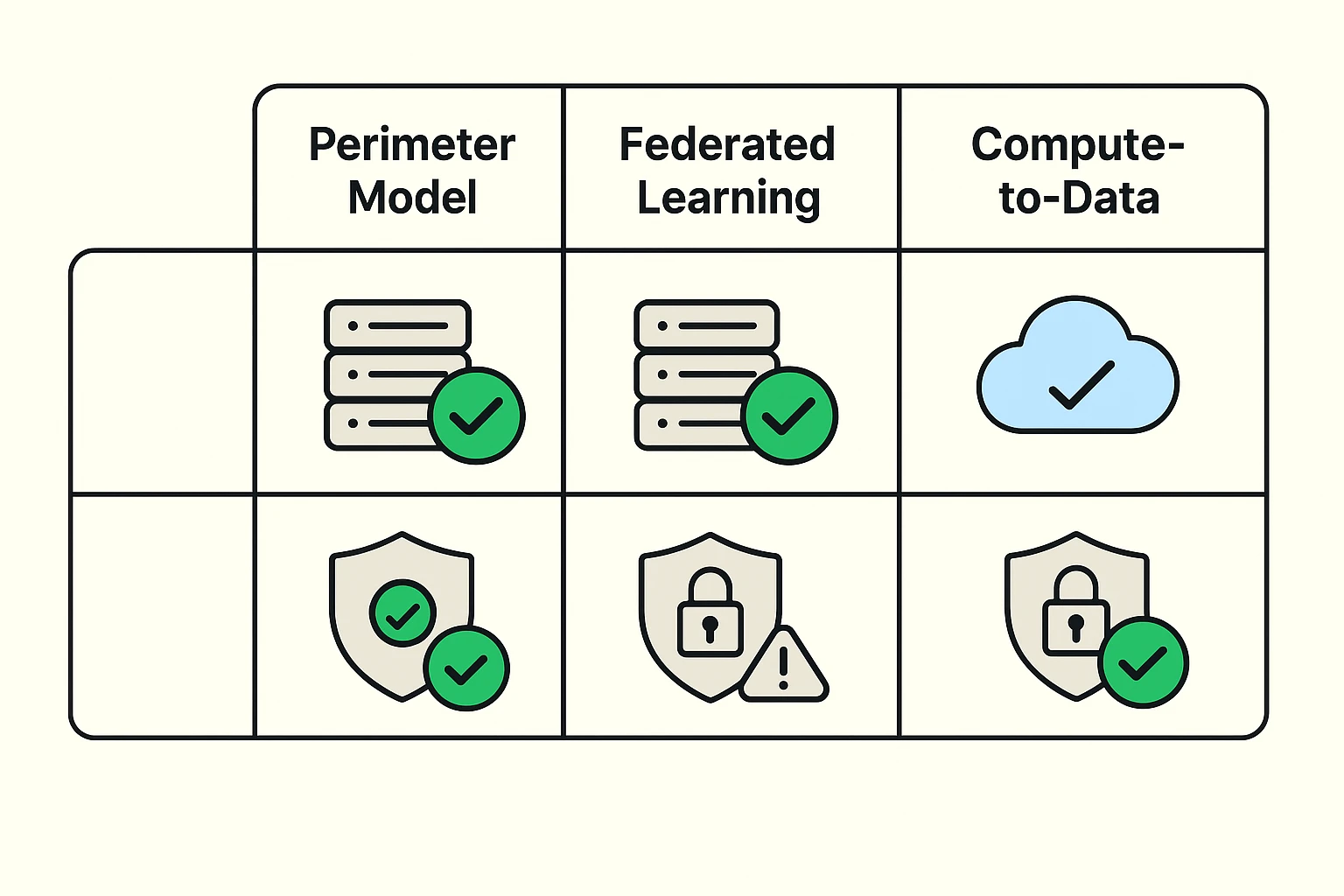

1. Data Perimeter Model (Keep Data Home, Send Compute)

In this pattern, your raw, sensitive data never leaves your on-premise data center. Instead, you develop and train AI models using a technique called "compute-to-data." Cloud compute resources (e.g., virtual machines with GPUs) are extended into your on-premise network, or AI models are developed with the specific intent of being trained on your data without the data itself moving.

How it works:

- On-Premise: Raw sensitive data, data processing, and potentially initial model fitting. All data remains within your trusted boundary.

- Cloud: Provides access to specialized AI algorithms, libraries, powerful GPUs, and scalable compute. The trained model (or its parameters) might eventually be deployed in the cloud for inference on non-sensitive, anonymized data, or brought back on-premise.

Pros: Maximum data control, minimal data egress risk.Cons: Requires strong on-premise compute capabilities for significant data volumes, can complicate cloud-native AI service integration.

2. Hybrid AI with Masked or Synthetic Data

This approach involves creating non-sensitive versions of your data that can be safely transferred to the cloud for AI model training or research.

- Masked Data: Techniques like tokenization, redaction, or anonymization replace sensitive identifiers with placeholder values or aggregate data. For example, instead of a patient's name, you use a unique token that only makes sense within the on-prem database.

- Synthetic Data: AI models generate completely artificial datasets that statistically mimic the real sensitive data but contain no actual personal or confidential information. This synthetic data can then be freely used in the cloud.

How it works:

- On-Premise: Raw sensitive data is stored, and masking/synthetic data generation processes occur under strict control.

- Cloud: AI models are trained and developed using the masked or synthetic data. This allows for rapid iteration and leveraging cloud scale for development.

Pros: Lowers risk of data exposure in the cloud, enables agile AI development.Cons: Masking can reduce data utility for complex AI tasks, generating high-quality synthetic data is a challenge in itself and requires careful validation.

3. Federated Learning

Federated learning represents a paradigm shift where the models travel to the data, rather than the data traveling to the models. This is particularly powerful when data is distributed across multiple secure locations (like different hospitals or financial institutions) or, in this context, between your on-premise environment and a cloud orchestrator.

How it works:

- On-Premise: Multiple local AI models are trained on distinct, sensitive datasets located on-premise. The raw data never leaves its source.

- Cloud (or Central Server): A central server aggregates the learned parameters (not the data!) from these local models, creating a global model. This global model is then sent back to the local instances for further refinement.

Pros: Maximizes data privacy and sovereignty, ideal for collaborative AI without data sharing.Cons: Training can be slower, requires robust security around model updates, complex infrastructure management.

4. Compute-to-Data Model (with Secure Enclaves)

This advanced pattern combines the "keep data home" principle with cutting-edge security to process sensitive data even more securely. Secure enclaves (also known as confidential computing) are isolated, hardware-protected environments that encrypt data in memory during computation, preventing unauthorized access even from the cloud provider or other processes on the same machine.

How it works:

- On-Premise: Raw sensitive data.

- Cloud (Secure Enclave): Only encrypted portions of sensitive data or the sensitive AI inference task are performed within a secure enclave in the public cloud. The AI model and data are processed in a hardware-isolated, verifiable environment where even the cloud operator cannot view it.

Pros: Offers the highest level of data confidentiality during computation in the cloud, even for sensitive operations.Cons: Still an emerging technology with performance overhead, requires specialized hardware and software support, can be complex to implement.

Side-by-side comparison of three hybrid AI patterns focused on data residency and security, highlighting trade-offs for keeping sensitive data on-premise.

Secure Data Movement & Integration: The Data Flow Choreography

Even if raw data stays put, its journey (or the journey of its derivatives) is crucial. How you manage data flow between on-premise and cloud is critical to security and compliance.

1. Robust Network Connectivity

Dedicated, private connections like VPNs (Virtual Private Networks) or direct cloud interconnects (e.g., AWS Direct Connect, Azure ExpressRoute) are essential. These create a secure, high-bandwidth bridge between your on-premise network and your chosen cloud provider, bypassing the public internet.

2. Data Anonymization and Tokenization

Before any data, however benign, crosses the boundary, consider anonymization. Tokenization replaces sensitive data elements with non-sensitive substitutes (tokens). For example, a credit card number might be replaced with a randomly generated token that only your on-prem system can map back to the original. This is a powerful technique for reducing the footprint of sensitive data in the cloud.

3. Encryption Everywhere

Any data that does cross between on-premise and cloud must be encrypted:

- In Transit: Use TLS/SSL for all communication channels.

- At Rest: Encrypt data stored in cloud object storage, databases, and any temporary storage. Use customer-managed encryption keys (CMEK) where possible to maintain control over the encryption process.

4. Data Governance and Lifecycle Management

Implement strict policies for data retention, access, and destruction across your hybrid environment. Who has access to what data, when, and for how long? This is doubly important in a hybrid setup where different teams might control different parts of the infrastructure.

Stepwise secure data flow for hybrid AI: keep raw data on-premise, anonymize before transfer, train on cloud with anonymized features, and run sensitive inference in enclaves.

AI Model Lifecycle Management in Hybrid Environments

Beyond the data itself, managing the AI models requires a similar hybrid approach.

- Training & Development: You might develop initial model architectures on anonymized data in the cloud, but conduct final training on highly sensitive (but transformed) data on-premise, or leverage federated learning.

- Deployment & Inference:

- On-Premise Inference: For real-time predictions on live, sensitive data, the fully trained model (or an optimized version) can be deployed back on-premise.

- Cloud Inference (with guardrails): If inference is needed in the cloud, it should operate only on anonymized input or within secure enclaves for highly sensitive predictions.

- Monitoring & Governance: Continuous monitoring of model performance, bias, and data drift is essential. This also includes logging all access and usage of both data and models for auditability, a critical component for compliance.

The Compliance Imperative: GDPR, HIPAA, and Beyond

Regulatory compliance isn't an afterthought; it's a foundational pillar of hybrid AI architecture for sensitive data. Here's how crucial regulations influence design decisions:

- GDPR (General Data Protection Regulation): Emphasizes data minimization, purpose limitation, and strong security. Hybrid architectures can help achieve data minimization by only sending necessary (and often anonymized) data to the cloud, and purpose limitation by clearly defining what data is used for and where.

- HIPAA (Health Insurance Portability and Accountability Act): For protected health information (PHI), HIPAA mandates strict security, privacy, and administrative safeguards. This often necessitates keeping raw PHI on-premise, leveraging data masking, or employing federated learning.

- CCPA (California Consumer Privacy Act): Focuses on consumer rights regarding their personal information. Architectures must support data subject access requests and the right to be forgotten across both on-premise and cloud components.

Each regulation has specific requirements for data residency, security controls, and accountability. Your hybrid AI architecture must be explicitly designed with these in mind, allowing you to demonstrate compliance through audit trails, robust access controls, and transparent data processing.

Advanced Considerations: Pushing the Boundaries of Trust

As you mature your hybrid AI strategy, several advanced techniques offer even greater protection:

- Homomorphic Encryption: An advanced cryptographic technique that allows computations to be performed on encrypted data without decrypting it first. This is still computationally intensive but holds immense promise for AI models that need to process highly sensitive data in untrusted environments (like the public cloud) without ever exposing the plaintext.

- Differential Privacy: A mathematical approach to adding a controlled amount of "noise" to datasets and query results, designed to prevent individual records from being re-identified, even when sharing insights derived from sensitive data. This can be layered on top of anonymized data shared with the cloud.

- Quantum Security: While the immediate threat is still nascent, understanding post-quantum cryptography and building (or planning for) quantum-resistant mechanisms will become increasingly important for long-term data protection.

FAQ: Your First Steps into Hybrid AI for Sensitive Data

Q1: What kind of data is considered "sensitive" and requires on-premise residency?

Sensitive data often includes Personally Identifiable Information (PII) like names, addresses, social security numbers, Protected Health Information (PHI), financial records, intellectual property, trade secrets, and classified government data. The exact definition depends on industry regulations (e.g., HIPAA for healthcare, GDPR for personal data) and your organization's specific policies.

Q2: Can I really train powerful AI models if my sensitive data never leaves on-premise?

Yes, absolutely! While it presents unique challenges, methods like federated learning, compute-to-data, and anonymization/synthetic data generation are specifically designed for this purpose. The key is to strategically leverage cloud for compute and specialized AI services while maintaining strict control over your raw data.

Q3: What are the biggest risks of

a hybrid cloud approach with sensitive data?The primary risks include misconfigured access controls, inadequate encryption during data transfer or at rest, compliance gaps due to incomplete understanding of data residency rules, and potential data leakage through insecure API endpoints. It requires meticulous planning and continuous monitoring.

Q4: Do I need specialized security tools for hybrid AI?

Yes, generic security tools may not be sufficient. Look for solutions that offer:

- Unified Identity and Access Management (IAM): To manage user access across both on-premise and cloud environments.

- Data Loss Prevention (DLP): To detect and prevent sensitive data from leaving authorized boundaries.

- Security Information and Event Management (SIEM): For centralized logging and threat detection across your hybrid infrastructure.

- Cloud Security Posture Management (CSPM): To continuously monitor and enforce security policies in your cloud environment.

Q5: How does this align with industry regulations like GDPR or HIPAA?

Hybrid architectures, when built correctly, can be a cornerstone of regulatory compliance. By retaining sensitive data on-premise, employing robust encryption, implementing strict access controls, and maintaining detailed audit trails, you can demonstrate adherence to data minimization, purpose limitation, and security requirements mandated by GDPR, HIPAA, and other regulations. It's about designing compliance into your architecture.

Q6: What if my on-premise infrastructure isn't very powerful?

This is a common challenge. In such cases, the focus shifts more heavily towards:

- Effective Data Anonymization/Tokenization: Preparing non-sensitive datasets on-premise for cloud training.

- Federated Learning: Leveraging the compute power available wherever the data resides.

- Compute-to-Data with Edge Devices: Bringing smaller, specialized AI models to the edge (closer to your data sources) for localized processing.While raw, sensitive data might stay on-premise, you can still leverage cloud compute by only sending out non-sensitive derivatives or model parameters.

Q7: How can AI itself help secure my hybrid cloud?

AI can play a crucial role in enhancing security:

- Threat Detection: AI-powered anomaly detection can identify unusual data access patterns or network traffic that might indicate a breach.

- Automated Compliance Checks: AI can analyze configurations and logs to ensure continuous compliance with internal policies and external regulations.

- Predictive Security: Models can learn from past incidents to prevent future attacks.

Your Path to Becoming an AI-First, Secure Business

Embracing AI while rigorously protecting sensitive data is not just an IT challenge—it's a strategic imperative. The hybrid cloud data architecture isn't merely a technical solution; it's a testament to an organization's commitment to innovation and ethical data stewardship. By carefully orchestrating your on-premise resources with the power of the cloud, you can unlock AI's full potential without ever compromising trust or compliance.

Ready to explore how a tailored AI growth system can transform your operations while safeguarding your most valuable assets? Dig deeper into how BenAI helps businesses like yours navigate the complexities of AI implementation, from automating mundane tasks with AI Agents to optimizing your entire content strategy with AI. The future of AI is here, and it's secure.

Join Our Growing AI Business Community

Get access to our AI Automations templates, 1:1 Tech support, 1:1 Solution Engineers, Step-by-step breakdowns and a community of forward-thinking business owners.

Latest Blogs

Explore our latest blog posts and insights.