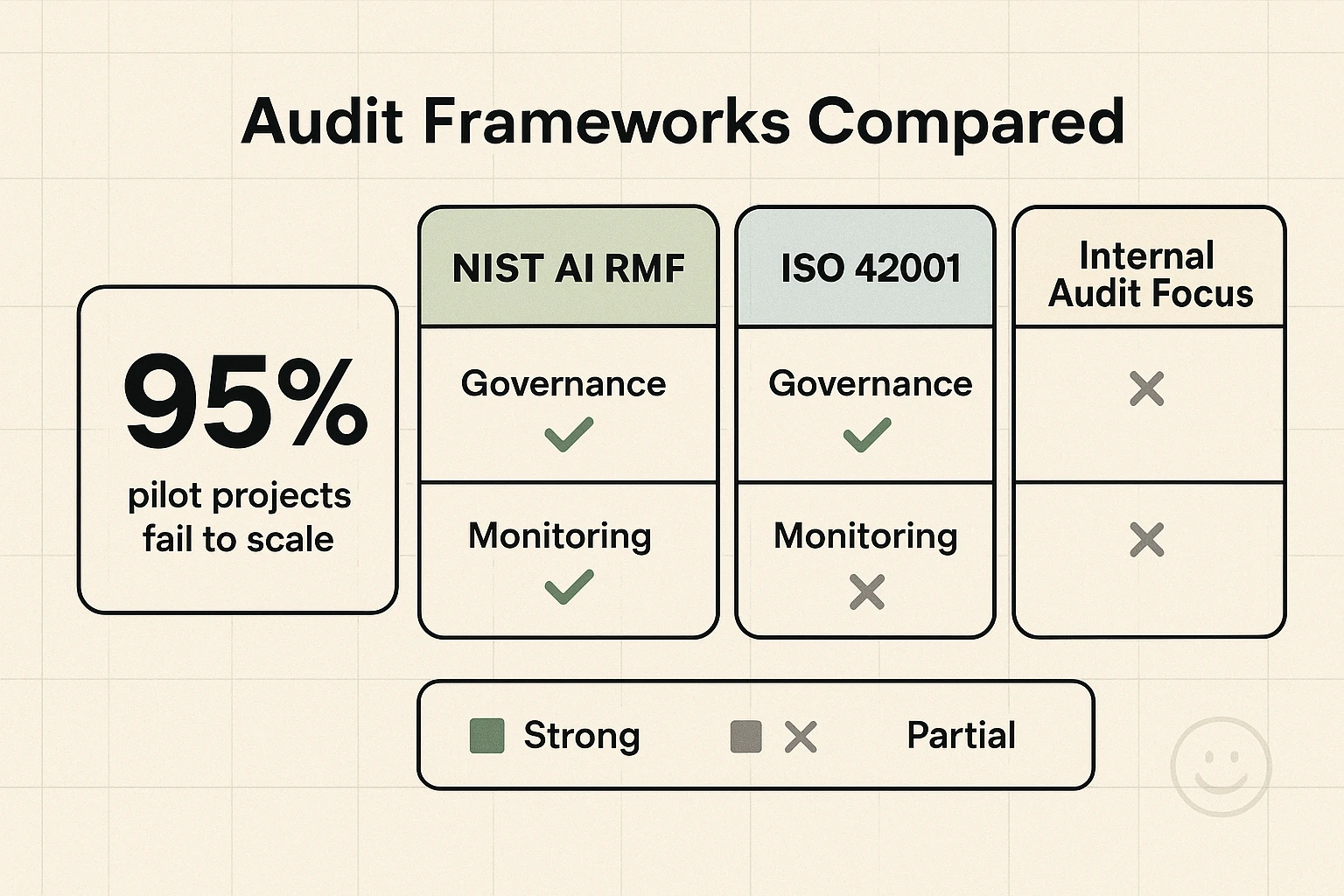

You've invested in AI, navigated the complexities of implementation, and your new systems are live. But here's the uncomfortable truth: simply "going live" with AI is often just the beginning of a much larger journey. Approximately 95% of generative AI pilot projects fail to achieve significant business impact, according to MIT research. This isn't just about initial deployment; it's about the hidden costs and missed opportunities that arise when AI systems aren't continuously monitored, audited, and improved.

If you're evaluating how to safeguard your AI investments, ensure long-term value, and mitigate escalating risks, you're looking beyond the initial hype toward sustainable, measurable impact. This guide will provide the frameworks and methodologies you need to confidently assess your AI's effectiveness and establish processes for continuous optimization.

Beyond Go-Live: Why Continuous AI Oversight Is Critical

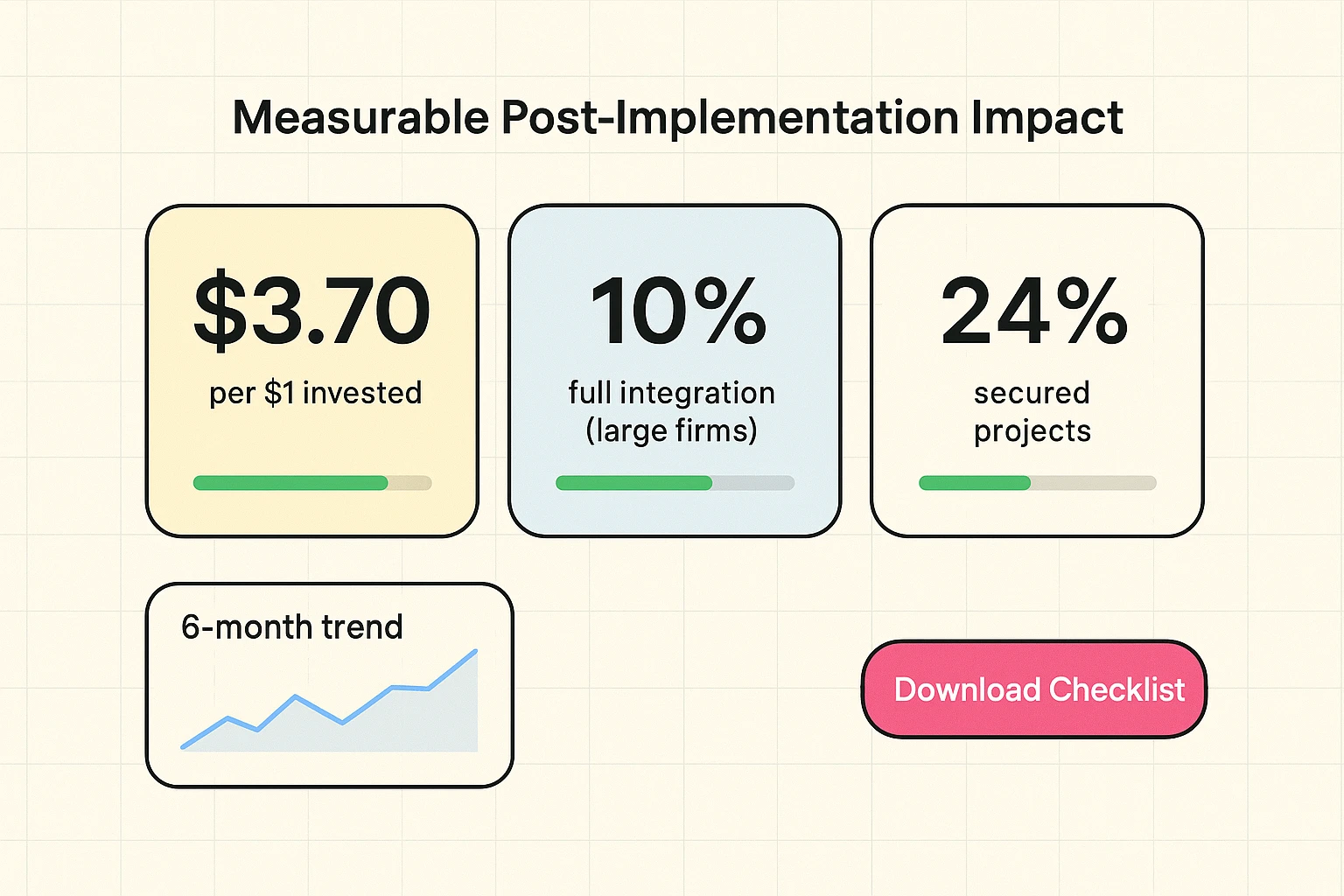

The promise of AI is transformative, enabling businesses to automate tasks, reduce manual work, and scale without increasing headcount. BenAI's core mission is to help companies become "AI-first" by implementing proven AI systems. But the reality of AI adoption involves converting pilots into production-ready, impactful solutions. The "95% failure rate" isn't a condemnation of AI itself, but a stark reminder that integration, ongoing learning, and resource allocation are paramount. In a landscape where 65% of companies adopted generative AI by 2024, but only 10% of $1-5 billion revenue companies fully integrated it, the challenge isn't adoption, it's successful, sustainable integration.

The evolving regulatory landscape—with frameworks like the EU AI Act and NIST AI Risk Management Framework—also demands robust auditing. Regulations are moving AI governance beyond mere compliance towards proactive enablement. If you aren't actively monitoring and improving your AI, you risk not only losing competitive advantage but also facing compliance failures, as 65% of AI compliance failures are linked to unnoticed monthly changes.

Phase 1: Defining the "Why" – Strategic Imperatives for AI Audit & Improvement

Before diving into the "how," it's crucial to solidify the strategic necessity of continuous AI oversight. Your stakeholders need to understand the inherent value.

Business Impact Measurement: Quantifying AI's Contribution

Measuring AI's true business impact goes beyond basic metrics. It's about demonstrating how your AI investment drives tangible results—revenue growth, cost savings, or operational efficiency gains. Successful early AI adopters report significant returns, with one study showing $3.70 gained per $1 invested in generative AI.

Key Performance Indicators (KPIs) for AI success often include:

- Model Accuracy & Precision: How well the AI performs its intended task.

- Operational Efficiency: Time savings, reduced manual effort, and throughput improvements.

- User Satisfaction: How well the AI enhances experiences for employees and customers.

- Output Quality: The quality of content, decisions, or analyses generated by the AI.

Traditional metrics often fall short when evaluating AI value. A comprehensive review ensures you're capturing the full spectrum of benefits, as well as any unintended consequences. Tracking and reporting on these KPIs is not just good practice, it's essential for justifying ongoing investment and demonstrating ROI.

Risk & Governance Landscape: Navigating Evolving Threats

The complexity of AI introduces new risks that require continuous monitoring. This includes issues like data integrity, algorithmic fairness, security vulnerabilities, and model drift. With 75% of customers worried about data security and only 24% of generative AI projects currently secured, the oversight gap is substantial.

Key areas of concern:

- AI Bias & Fairness: Ensuring your AI systems don't perpetuate or amplify existing biases. Continuous auditing helps detect and mitigate disparate impact.

- Data Integrity & Lineage: Poor data quality can cripple even the most sophisticated AI. Audits ensure the data feeding your models remains clean and reliable, especially in dynamic environments where data sources change frequently. For more insights on this, you might explore building a robust AI data strategy.

- Security Vulnerabilities: AI models can be susceptible to adversarial attacks or data breaches, making continuous security audits crucial to protect against evolving threats.

- Regulatory Compliance: New regulations are frequently emerging. Your AI systems must remain compliant with standards like ISO 42001 and the NIST AI Risk Management Framework.

Proactive risk management transforms AI governance from a reactive gatekeeping function to an enabler of responsible innovation.

Phase 2: The "How" – Methodologies for Continuous AI Audit

Once the strategic imperative is clear, the next step is establishing robust methodologies for auditing your AI systems. This isn't a one-time check; it's an ongoing process.

Establishing AI Audit Methodologies

Integrating established frameworks provides a solid foundation for your AI audit strategy:

- NIST AI Risk Management Framework (RMF): A voluntary framework that helps organizations manage risks related to AI technologies.

- ISO 42001: An international standard for AI management systems, providing guidance on how to manage AI risks and opportunities.

- COBIT (Control Objectives for Information and Related Technologies): While broader, COBIT principles can be adapted for AI governance, focusing on delivering value and managing risks.

- IIA AI Auditing Framework: Specific guidance from the Institute of Internal Auditors on auditing AI.

A structured approach, like a 7-step framework, can guide your efforts:

- Assessment: Understand current AI deployments, objectives, and potential risks.

- High-Impact Opportunities: Identify critical areas where AI audit is most needed and can deliver the most value.

- Business Case: Develop a clear rationale for continuous auditing, linking it to business outcomes.

- Technology Selection: Choose appropriate tools and platforms for monitoring and auditing.

- Phased Rollout: Implement audit processes incrementally.

- Change Management: Ensure your teams are ready for new processes and responsibilities.

- Continuous Measurement: Ongoing tracking of audit effectiveness and AI performance.

Key Audit Focus Areas

Your continuous AI audit should cover three core components: Data, Model, and Deployment.

Data Integrity & Lineage

Your AI is only as good as its data. Continuous monitoring of data integrity ensures the inputs to your AI remain high-quality. This involves confirming data sources, examining data transformations, and tracking changes over time. Overlooked technical details in data pipelines can lead to significant issues downstream.

Algorithmic Fairness & Bias

Bias isn't always intentional, but its impact can be severe. Auditing for algorithmic fairness involves continuously checking for disparate impact across different demographic groups. Tools and methodologies exist to detect and help mitigate these biases.

Model Performance & Drift

AI models, particularly in dynamic environments, don't perform perfectly forever. Model drift occurs when the relationship between input data and output changes over time, degrading performance. Continuous monitoring detects this degradation, triggering alerts for retraining or re-calibration. Without this, you might not notice your AI's effectiveness is waning until it significantly impacts your business. This is crucial for anything from AI for quality control to AI site speed analysis tools.

Security & Adversarial Robustness

Deployed AI models can be vulnerable to new types of attacks. Continuous security auditing involves testing against adversarial attacks and ensuring your AI is robust against manipulation, protecting both your data and your decision-making processes.

Human-in-the-loop and Explainability

For complex AI systems, maintaining human oversight and ensuring explainability are paramount. Audits should verify that human experts can understand and intervene in AI decisions, especially in critical areas, fostering trust and accountability. This also means making sure your AI systems are not making manual actions identification and corrections too difficult.

Phase 3: Building the "Engine" – Continuous Improvement Mechanisms

Beyond auditing, continuous improvement is about designing systems that proactively adapt and evolve. This involves setting up feedback loops and robust change management.

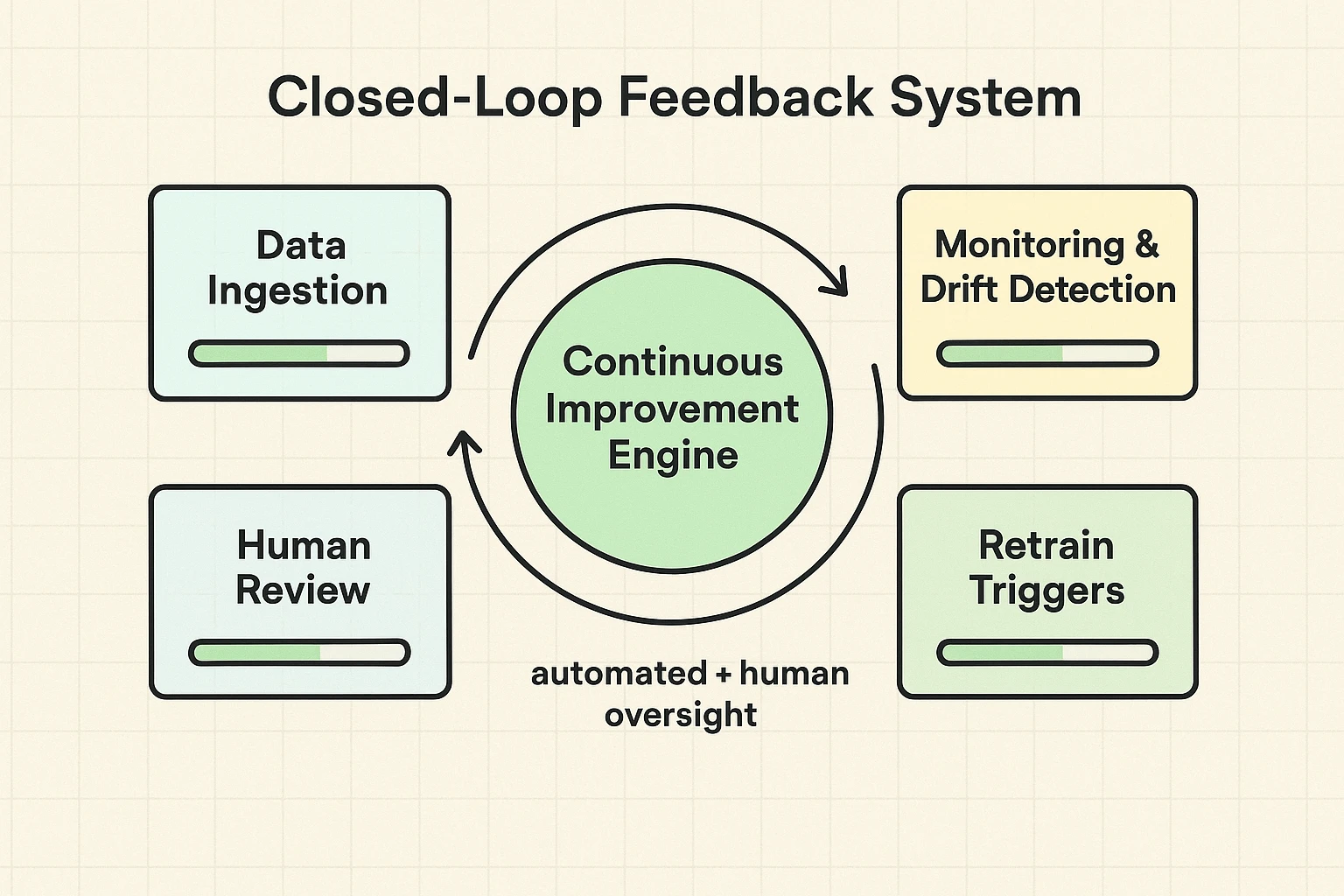

Designing Effective Feedback Loops

Feedback loops are essential for ensuring your AI models learn and improve over time. They transform insights from audits into actionable changes.

- User Interaction Analysis: Monitor how users interact with AI outputs, identifying areas for improvement or potential errors.

- Error Correction & Automated Retraining: Implement processes to correct identified errors and automatically retrain models when performance thresholds are breached or new patterns emerge. Examples include systems that automatically refine AI content refreshing recommendations based on user engagement.

- Closed-Loop Systems: Ideally, feedback should flow directly back into the AI system, allowing for self-correction. Care must be taken to avoid self-reinforcing biases during this process.

Change Management for AI

AI systems are not static. Ongoing change management ensures that upgrades, updates, and strategic shifts don't disrupt your AI's effectiveness or compliance.

- Version Control: Implement robust version control for models, data sets, and hyperparameters to track changes and roll back to previous versions if needed.

- Agile Response to Regulations: Be prepared to adapt quickly to new regulatory requirements and industry best practices.

- Infrastructure Management: Your AI will likely grow. Plan for scaling infrastructure and resources to support expanding AI capabilities.

Scaling AI Capabilities & Operationalization

As your AI systems mature and prove their value, you'll naturally want to expand their application. Strategies for scaling AI include:

- Resource Allocation: Smartly allocate compute, storage, and human resources as AI initiatives grow.

- Infrastructure Evolution: Ensure your underlying technical infrastructure can support increasingly complex AI models and higher data volumes.

- Talent Development: Continuously upskill your teams to manage, audit, and develop more sophisticated AI applications. This might involve training them on advanced topics like AI SEO reporting dashboards or AI LinkedIn campaign optimization.

Overcoming Competitive Vulnerabilities: Addressing Overlooked Challenges

Many companies offer AI solutions, but few provide truly comprehensive, post-implementation audit and continuous improvement strategies. This is where BenAI distinguishes itself. While competitors largely focus on high-level governance or generic automation, we blend strategic insight with technical depth to address the real-world complexities you face.

- Technical Deep Dive: We acknowledge and address specific, often overlooked challenges. This includes developing advanced strategies for monitoring edge cases, anticipating cascading failures in interconnected AI systems, and mitigating API risks that can compromise data flows and model integrity. Our focus is on the intricacies that other providers gloss over.

- Proof Over Promises: We emphasize auditable records, transparent dashboards, and repeatable processes. Our solutions provide definitive proof of performance and compliance, moving beyond theoretical guarantees to deliver quantifiable and verifiable results.

- Training & Upskilling: The talent shortage in AI is real, with 45% of companies lacking the necessary AI talent. We provide practical advice and solutions for building internal capabilities for AI auditing and continuous improvement. This includes tailored training and expert consulting to empower your teams, augmenting human capabilities rather than replacing them.

Conclusion: Your Roadmap to Resilient and High-Impact AI

The journey to becoming an "AI-first" business extends far beyond initial implementation. It requires a dedicated, continuous approach to auditing, monitoring, and improvement. By embracing the methodologies outlined here – focusing on strategic imperatives, implementing robust audit processes, and building effective feedback loops – you can transform your AI investments into sustainable engines of growth and efficiency.

Don't let your AI pilots fall into the 95% failure trap. Ensure compliance, mitigate risks, and maximize your ROI with a proactive approach to post-implementation AI oversight.

BenAI offers custom AI implementations, training, and consulting to help you not only adopt AI the right way but sustain its impact for years to come. Lead the way in AI adoption and make your AI resilient and continuously impactful.

Frequently Asked Questions

Q: What is the primary difference between a pre-implementation AI audit and a post-implementation one?

A pre-implementation audit focuses on assessing the readiness of your data, infrastructure, and strategy before deployment, identifying potential risks and ensuring alignment with objectives. A post-implementation audit, on the other hand, evaluates the actual performance of deployed AI systems, measuring real-world impact, detecting model drift, bias, and ensuring ongoing compliance and security.

Q: How often should we conduct a post-implementation AI audit?

The frequency depends on the criticality and dynamism of your AI system. For high-impact, rapidly evolving AI, continuous monitoring with automated alerts is recommended. Formal audits should occur at least annually, or quarterly for rapidly changing models or regulated environments. Remember, 65% of compliance failures are traced to unnoticed monthly changes, emphasizing the need for ongoing vigilance.

Q: Our internal audit team lacks AI expertise. How can we bridge this gap?

This is a common challenge, with 45% of companies lacking AI talent. BenAI helps bridge this gap through targeted AI training programs and consulting. We can upskill your existing teams, provide specialized external support, or help establish hybrid models where internal audit collaborates with AI experts to manage and oversee your AI systems effectively.

Q: What are the key metrics for measuring the ROI of continuous AI improvement?

Key metrics include reduced operational costs (via automation), increased efficiency (faster task completion, fewer errors), improved revenue (from optimized marketing or sales processes), enhanced customer satisfaction, and mitigated risks (fewer compliance issues, reduced bias incidents). We help you define custom KPIs that directly tie back to your business objectives.

Q: Can BenAI help integrate continuous improvement mechanisms into our existing AI infrastructure?

Absolutely. BenAI specializes in custom AI implementations and tailoring solutions. We work with your existing tech stack to design and implement robust feedback loops, monitoring tools, and automated retraining pipelines, ensuring seamless integration and minimal disruption. We don't just provide tools; we provide the strategic and technical guidance to make them work effectively within your unique environment.

Join Our Growing AI Business Community

Get access to our AI Automations templates, 1:1 Tech support, 1:1 Solution Engineers, Step-by-step breakdowns and a community of forward-thinking business owners.

Latest Blogs

Explore our latest blog posts and insights.