You've ventured into AI. Perhaps you’ve seen the promising results of an initial pilot project. But as you stand on the precipice of enterprise-wide adoption, questions loom large: How do you take that successful experiment and scale it across your entire organization? How do you move AI from a proof-of-concept to a reliable, revenue-driving machine?

If you’re evaluating strategies for operationalizing AI at scale, you're not alone. The stark reality is that a staggering 85% of machine learning projects fail to reach production. This isn't due to a lack of ambition or talent, but often a gap in implementing robust Machine Learning Operations (MLOps) practices that bridge the chasm between experimental success and real-world impact. The MLOps market itself is exploding, projected to reach anywhere from $16 billion to $124 billion by the early 2030s, underscoring the urgent need for effective solutions.

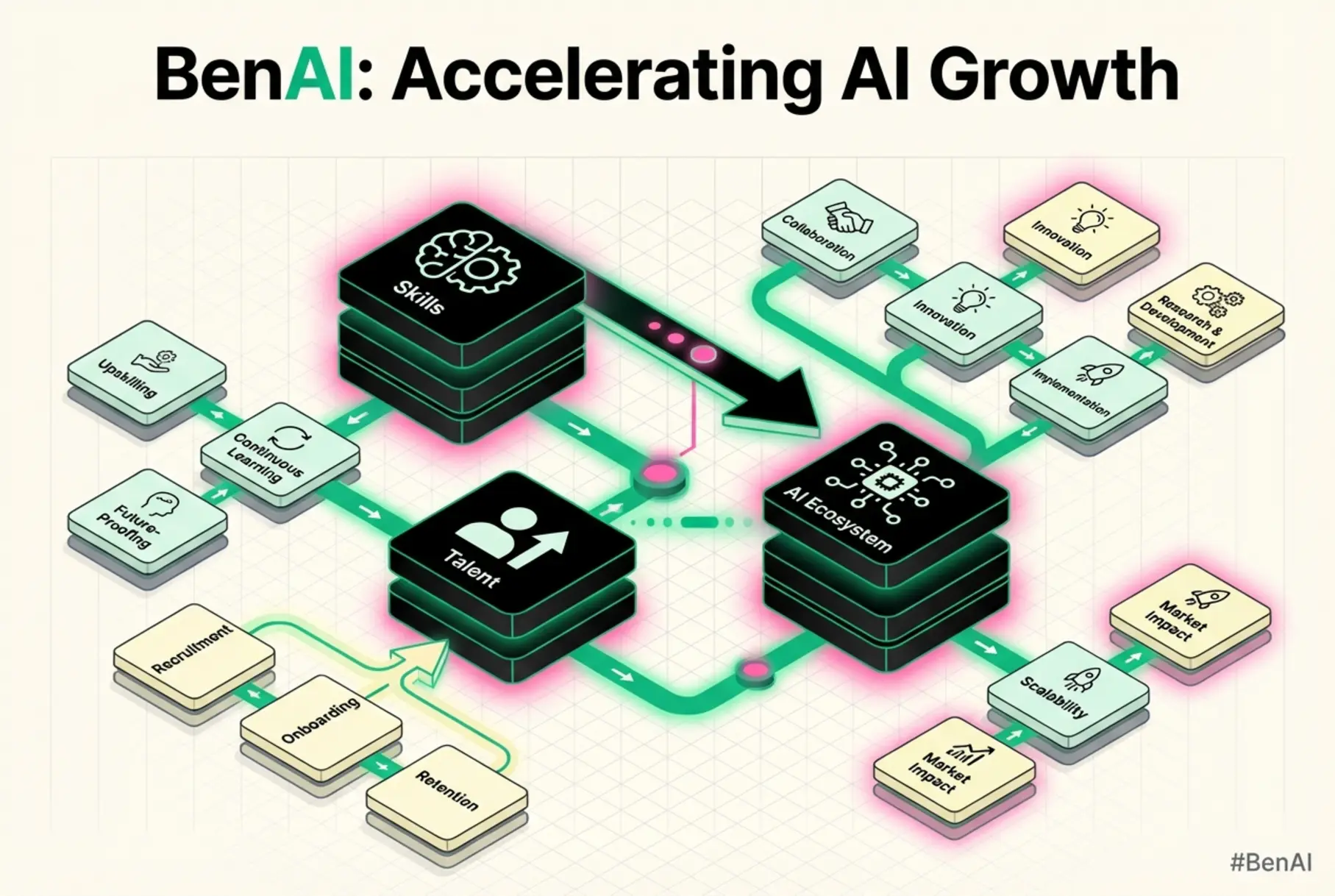

At BenAI, we understand this challenge intimately. We’ve seen firsthand how a lack of scalable infrastructure, proper model monitoring, or clear governance can derail even the most promising AI initiatives. This guide isn't about theoretical concepts; it's a practical blueprint designed to help you confidently navigate your MLOps journey, transforming your ambition into sustained, operational success.

The MLOps Imperative: Why Scaling AI Demands a New Approach

Think of MLOps as the factory floor for your AI. Just as modern manufacturing transformed craft production into scalable, repeatable processes, MLOps transforms individual AI experiments into reliable, continuously performing systems. Without it, your AI initiatives risk becoming isolated projects that never deliver their full potential.

The journey from a single model to an enterprise-wide AI ecosystem demands a systematic approach. While 88% of organizations today are experimenting with AI, only about one-third have successfully scaled it across their operations. This "scaling gap" is precisely where MLOps becomes non-negotiable. It's about establishing repeatable processes for:

- Data Management: Ensuring high-quality, versioned data feeds for continuous training.

- Model Development: Reproducible experimentation and robust version control.

- Deployment Automation: Rapid, reliable, and safe model deployment to production.

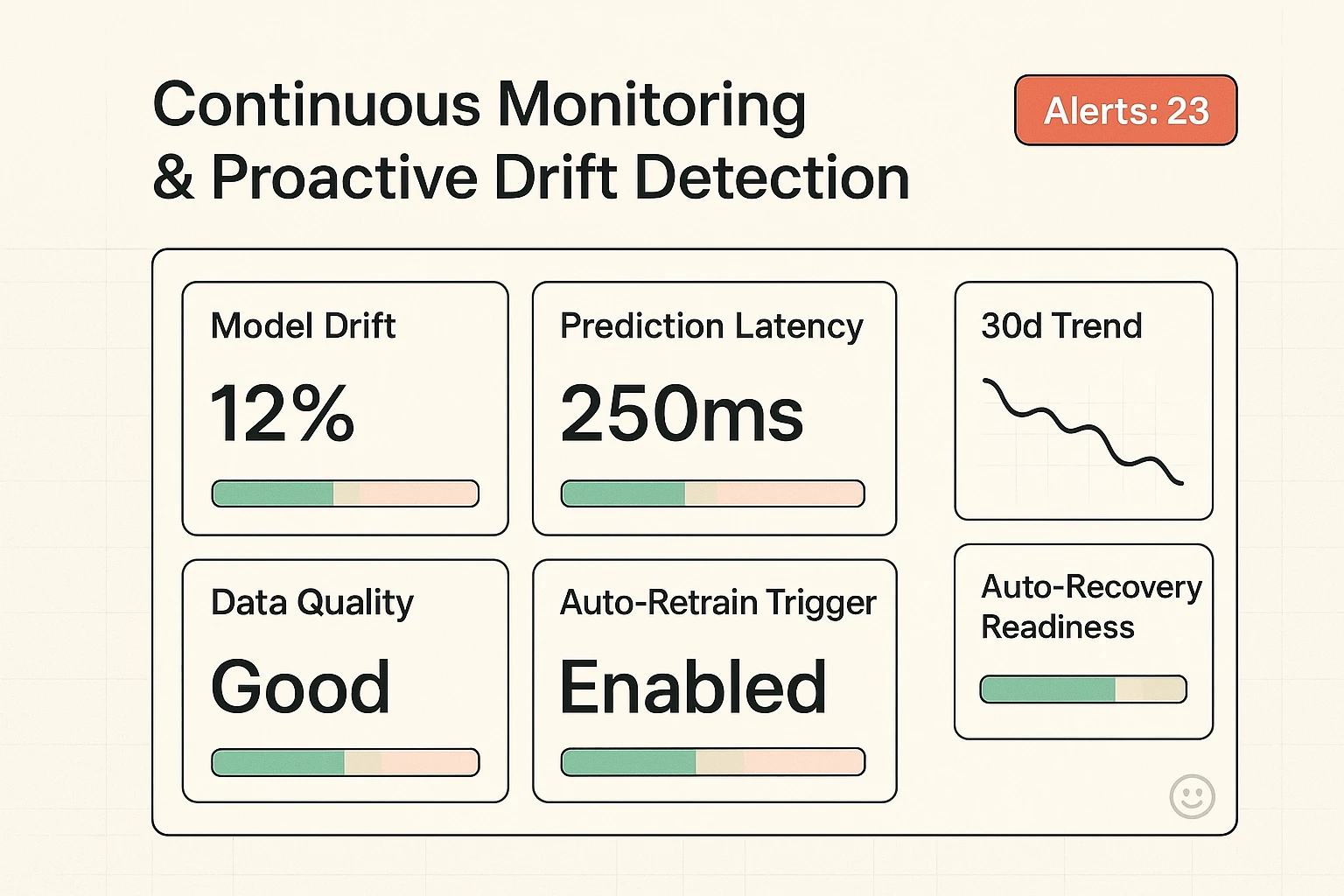

- Continuous Monitoring: Proactive detection and resolution of performance degradation.

- Governance & Compliance: Maintaining ethical, secure, and compliant AI systems.

When implemented effectively, MLOps reduces operational risks, accelerates iteration cycles, and most importantly, ensures your AI investments deliver tangible business value.

Understanding Your MLOps Maturity: Where Do You Stand?

Before you can build, you need to assess your current foundation. MLOps maturity isn't a binary state; it's a spectrum. Understanding where your organization falls helps you identify the most impactful next steps and strategically plan your resource allocation.

Consider these stages common to many organizations navigating the AI scaling journey:

Level 0: Manual & Ad-hoc. AI projects are typically R&D efforts, often isolated to data scientists’ local environments. Deployment is manual, infrequent, and prone to errors.Level 1: Automated Pipeline (Basic CI/CD). Some automation is introduced for model training and deployment, often using traditional DevOps tools. Monitoring is basic, focused on uptime.Level 2: Automated MLOps. Dedicated MLOps tools are used, with automated model retraining, versioning, and more sophisticated monitoring. Governance frameworks begin to take shape.Level 3: Enterprise-Wide MLOps. Fully integrated MLOps platform, AI-driven monitoring, automated remediation, robust governance, strong focus on security and cost optimization for thousands of models.

The "85% failure to reach production" statistic often impacts organizations operating at Level 0 or 1. Our goal at BenAI is to help you confidently progress through these stages, turning that failure rate into a success story for your business.

Building Your AI Factory Floor: Key Components of Scalable MLOps

Moving beyond isolated projects requires a holistic view of your AI ecosystem. It's not just about one shiny tool; it's about integrating multiple components into a seamless, automated workflow.

1. Robust Data Foundation: The Lifeblood of AI

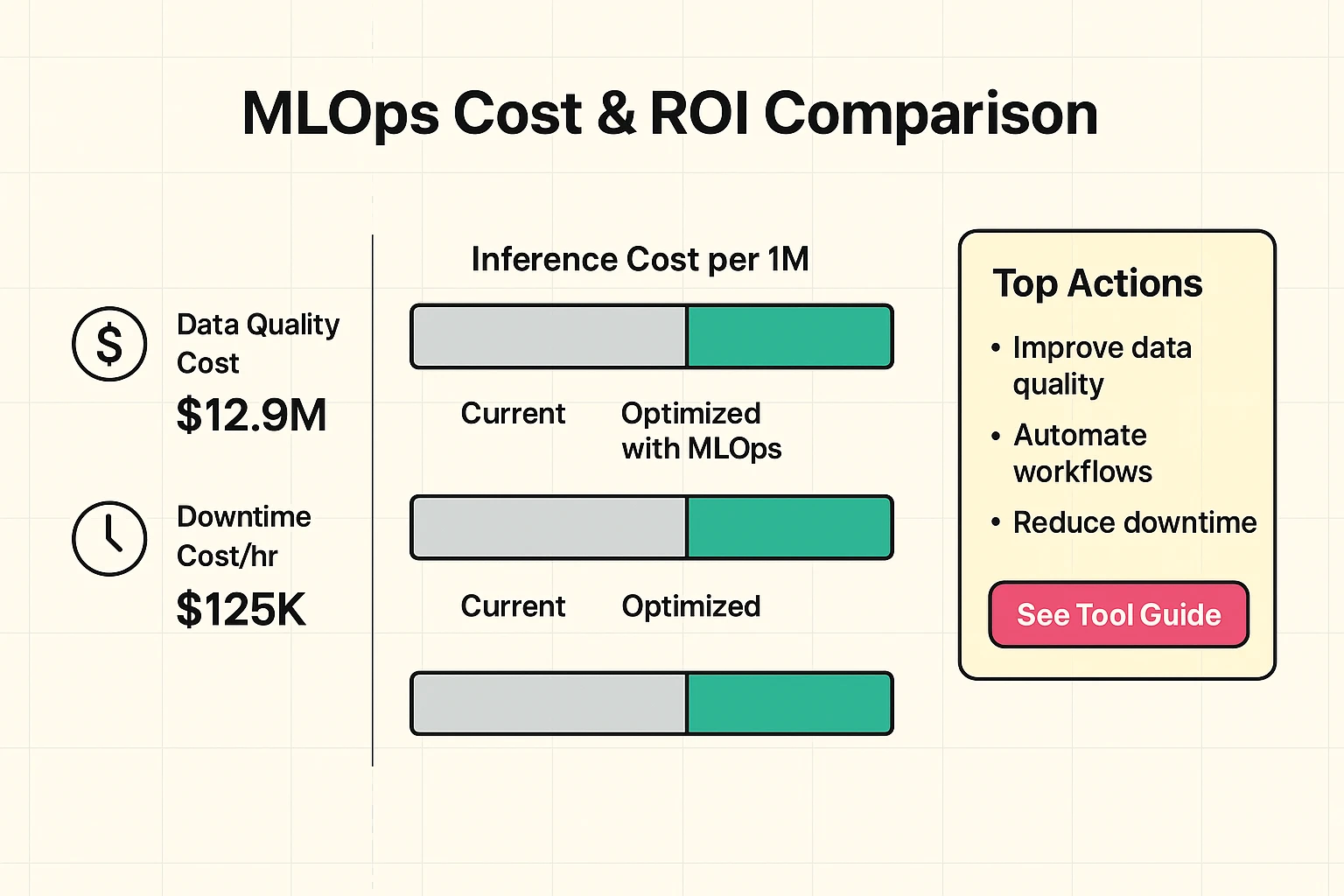

High-quality, continuously flowing data is the bedrock of any successful AI operation. In fact, engineers often spend up to 80% of their time just cleaning and preparing data. This challenge is magnified at scale, where data quality issues can cost organizations an average of $12.9 million annually.

Your Decision Factors for Data Management:

- Data Versioning: Can you track changes to your data used for training and testing? Tools like DVC or LakeFS offer robust versioning for datasets, ensuring reproducibility.

- Feature Stores: How consistently can features be engineered for both training and inference? A feature store acts as a central repository, promoting consistency and reducing data pipeline duplication.

- Data Validation & Quality Checks: Are automated checks in place to prevent bad data from poisoning your models? Proactive validation ensures data integrity before it impacts model performance.

- Data Lineage: Can you trace the origin and transformations of every piece of data? This is critical for debugging, auditing, and regulatory compliance.

2. Agile Model Development & Experimentation Management

The core of your AI factory is model creation. For scalable MLOps, this means more than just training a model; it's about managing a continuous cycle of experimentation, versioning, and collaboration.

Your Decision Factors for Model Development:

- Experiment Tracking: How do you log, compare, and reproduce ML experiments? Platforms like MLflow, neptune.ai, or Weights & Biases help data scientists keep track of metrics, parameters, and code across numerous runs.

- Model Registry: Where do you store and manage different versions of your trained models? A centralized model registry ensures discoverability, versioning, and lifecycle management (staging, production, archiving).

- Code Versioning: Is your model code and training scripts under strict version control (e.g., Git)? This ensures reproducibility and collaboration.

- Environment Management: Can you reliably recreate the exact environment (libraries, dependencies) a model was trained in? Tools like Docker or Conda are vital here.

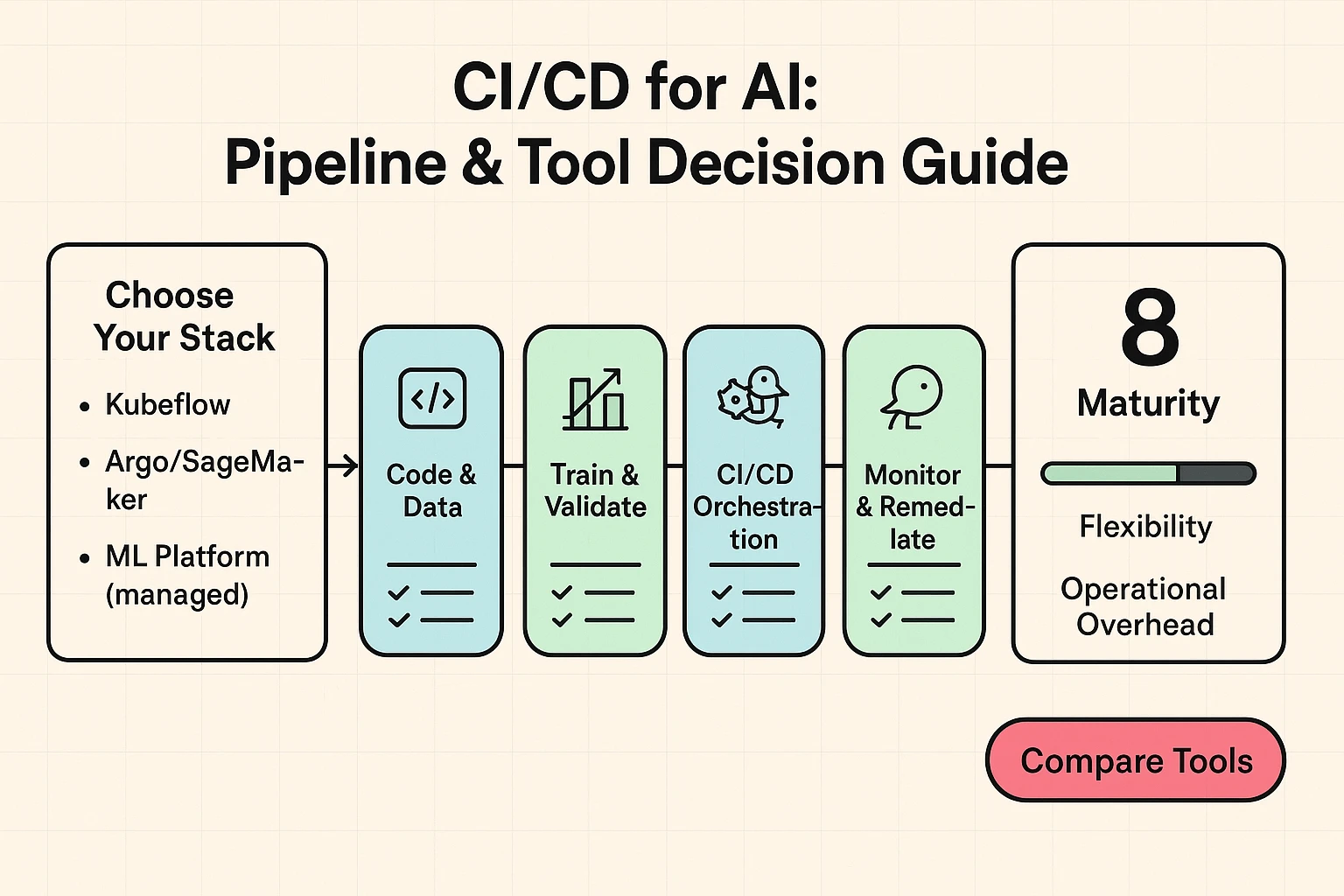

3. Streamlined CI/CD for AI: Automating Deployment from Idea to Impact

This is where the principles of DevOps meet machine learning. Continuous Integration/Continuous Deployment (CI/CD) for AI automates the process of building, testing, and deploying your ML models.

Your Decision Factors for CI/CD:

- ML-Specific CI/CD Tools: Are you using tools tailored for ML pipelines (e.g., Kubeflow Pipelines, Argo Workflows, Azure Machine Learning Pipelines)? These orchestrators understand the unique stages of an ML workflow, from data preparation to model deployment.

- Deployment Strategies: How do you safely roll out new models? Consider strategies like canary deployments, blue/green deployments, or A/B testing to minimize risk.

- Automated Testing: Are your models rigorously tested at every stage of the pipeline? This includes functional tests, integration tests, and performance tests on new data. For specific deep dives into quality control for AI, refer to our guide on AI-driven Quality Control.

- Rollback Capabilities: Can you quickly revert to a previous, stable model version if issues arise? This is a non-negotiable safety net.

4. Continuous Model Monitoring & Performance Management

Deployment is not the finish line; it’s the beginning of critical operational oversight. Predictive system downtime costs about $125,000 per hour, highlighting the need for vigilance. This is where you maintain model performance and proactively detect issues like model drift.

Your Decision Factors for Monitoring:

- Data Drift Detection: Is the distribution of your input data changing subtly over time? Tools like Fiddler AI, Datadog with its ML integration, or Evidently can help detect this, often a precursor to model decay.

- Concept Drift Detection: Is the relationship between your input features and target variable changing? This indicates that the fundamental pattern your model learned is no longer valid.

- Performance Monitoring: Beyond just accuracy, are you tracking business-relevant KPIs, latency, throughput, and resource utilization?

- Explainability & Interpretability: Can you understand why your model made a particular prediction? Tools that offer SHAP, LIME, or other explainability frameworks are crucial for trust and debugging.

- Automated Retraining Triggers: Can your system intelligently trigger retraining when drift or performance degradation exceeds predefined thresholds? This is a key step towards self-healing AI systems.

5. Governance, Security & Cost Optimization

As AI becomes embedded in your business, the considerations around ethics, security, and cost amplify. AI incidents rose 56.4% to 233 cases in 2024, showing the increasing importance of robust ethical and security frameworks.

Your Decision Factors for Governance & Cost:

- Regulatory Compliance: How do you ensure your AI systems comply with regulations like GDPR, HIPAA, or the emerging EU AI Act? MLOps facilitates audit trails and responsible data usage.

- Bias Detection & Mitigation: Are your models fair and unbiased across different demographic groups? Fiddler AI and other tools offer capabilities to assess and mitigate bias.

- Auditing & Traceability: Can you trace every prediction back to the model version, training data, and code used? This is essential for transparent and responsible AI.

- Cost Optimization: Are you dynamically allocating resources to your AI infrastructure? The cost of AI inference dropped 280-fold between 2022 and 2024, enabling more continuous training and monitoring at scale, but smart resource management remains key.

- Access Control: Who has access to your models, data, and pipelines? Robust Role-Based Access Control (RBAC) is paramount.

Addressing Emerging Trends: LLMOps and Generative AI

The AI landscape is constantly evolving, with Large Language Models (LLMs) and Generative AI taking center stage. While traditional MLOps principles apply, LLMOps introduces unique considerations:

- Prompt Engineering & Versioning: Managing and versioning prompts becomes as critical as managing code.

- Model Alignment & Safety: Ensuring LLMs adhere to desired behaviors and avoid generating harmful content.

- Hallucination Detection: Specific metrics and monitoring for factual inaccuracies generated by LLMs.

- Retrieval Augmented Generation (RAG): Integrating external knowledge bases effectively and managing the lifecycle of these retrieval systems.

BenAI helps clients integrate these advanced AI paradigms into their existing MLOps frameworks, ensuring your organization stays at the forefront of AI innovation without compromising robustness or reliability.

The Business Case for MLOps: Justifying Your Investment

Investing in MLOps is not merely a technical undertaking; it's a strategic business decision with significant ROI. For businesses seeking to scale, MLOps enables:

- Accelerated Time-to-Market: Quickly deploy new AI capabilities.

- Reduced Operational Costs: Automate manual tasks and prevent costly downtime.

- Improved Model Performance: Proactively address drift, leading to more accurate predictions and better business outcomes.

- Enhanced Compliance & Trust: Build AI systems that are auditable, fair, and secure, mitigating reputational and regulatory risks.

The initial investment in MLOps pays dividends by turning speculative AI projects into reliable assets that continuously deliver value. For example, BenAI’s offerings can transform your marketing agency’s operations. Our AI marketing solutions leverage these MLOps principles to automate content creation pipelines or optimize LinkedIn outreach, ensuring your AI-driven strategies consistently perform and scale effectively. You can learn more about how we scale LinkedIn lead generation with AI automation and for AI LinkedIn campaign optimization.

Choosing Your MLOps Partner: Why BenAI Embodies the Trusted Solution

When evaluating MLOps solutions, you need a partner who understands both the technical intricacies and the strategic business implications. Many providers offer tools, but few offer the comprehensive partnership required to transition your organization into an AI-first entity.

BenAI stands apart with its focus on:

- Custom Implementation: We don't believe in one-size-fits-all. Our solutions are tailored to your unique infrastructure, operational needs, and business objectives.

- End-to-End Expertise: From data strategy to model monitoring and governance, we cover the entire MLOps lifecycle. Our consulting and training ensure your team is equipped for long-term success.

- Practical, Proven Systems: We focus on adopting proven AI systems that drive growth, automate tasks, and create capacity without increasing headcount. Our approach is grounded in real-world results.

- Continuous Guidance: MLOps is an ongoing journey. We provide the support, community, and expert advice you need to adapt to emerging trends and optimize your AI operations over time.

"BenAI is an absolute game-changer. They helped us integrate custom AI solutions that dramatically improved our operational efficiency," says Aman Saxena, a client at WISE. Ross Sedawie praises BenAI’s ability to unlock the potential of AI, stating that they "truly help businesses become AI-first." These are the outcomes you can expect when partnering with a team dedicated to your scalable AI success.

Frequently Asked Questions (FAQ)

What is MLOps, and why is it essential for scaling AI?

MLOps (Machine Learning Operations) is a set of practices that automates and standardizes the entire machine learning lifecycle, from data collection to model deployment and monitoring. It's essential for scaling AI because it ensures reproducibility, reliability, and continuous performance of ML models in production, reducing the high failure rate of AI projects.

How does MLOps differ from traditional DevOps?

While MLOps builds upon DevOps principles (CI/CD, automation, monitoring), it has unique complexities due to the nature of machine learning. MLOps adds considerations like data versioning, feature stores, experiment tracking, model registry, dealing with data and concept drift, and managing explainability and bias in models. Its focus isn't just code and infrastructure, but also data and models.

My organization is just starting with AI. Is MLOps relevant for us?

Absolutely. Even at early stages, establishing MLOps principles helps prevent future scaling headaches. Starting with a basic framework for data versioning, experiment tracking, and automated deployment will save significant time and resources as your AI initiatives grow. Think of it as building a strong foundation from the start.

What are the biggest challenges in implementing MLOps at scale?

Key challenges include managing complex data pipelines, ensuring data quality, preventing model drift, integrating disparate MLOps tools, building a culture of collaboration between data scientists and engineers, and ensuring robust governance and security. These challenges are amplified as the number of models and pipelines increases.

How can BenAI help my business with MLOps?

BenAI provides tailored AI implementations, training, and consulting to help your business adopt MLOps the "right way." We offer custom solutions for marketing and recruiting agencies and comprehensive enterprise solutions, guiding you through infrastructure setup, pipeline automation, custom monitoring, and strategic planning to ensure your AI operations are scalable, reliable, and cost-effective.

Your Roadmap to AI Scalability, Reliability, and ROI

The path to becoming an AI-first organization is a journey, not a destination. It requires strategic planning, robust tooling, and expert guidance. The statistics are clear: the MLOps market is booming, but the majority of AI projects still fail to reach production. This gap represents both a significant risk and an enormous opportunity.

By embracing MLOps, your organization can avoid the pitfalls many face and instead build a future where AI delivers consistent, measurable value. BenAI is here to ensure that your AI initiatives don't just succeed in isolation but thrive at scale, transforming your business into a true AI powerhouse.

Ready to move your AI from experimental pilots to production-grade operations? Reach out to BenAI today for a consultation. Your AI-first business starts here.

Join Our Growing AI Business Community

Get access to our AI Automations templates, 1:1 Tech support, 1:1 Solution Engineers, Step-by-step breakdowns and a community of forward-thinking business owners.

Latest Blogs

Explore our latest blog posts and insights.