Imagine an AI system making a critical decision: a medical diagnosis, a loan approval, or even flagging a citizen for surveillance. Now imagine that decision is flawed, biased, or leads to unintended harm. The consequences? Potentially catastrophic. In our rush towards AI-driven efficiency, the critical question isn’t if AI will make mistakes, but how we design systems to detect, correct, and prevent those mistakes—especially the ethical ones.

This is where Human-in-the-Loop (HITL) AI steps in, not just as a technical fix, but as a fundamental pillar of ethical strategy. It’s about more than just accuracy; it’s about aligning powerful algorithms with human values, ensuring accountability, and building trust in an increasingly automated world. For businesses looking to truly lead in the AI-first era, understanding and strategically implementing ethical HITL isn't optional – it's a strategic imperative.

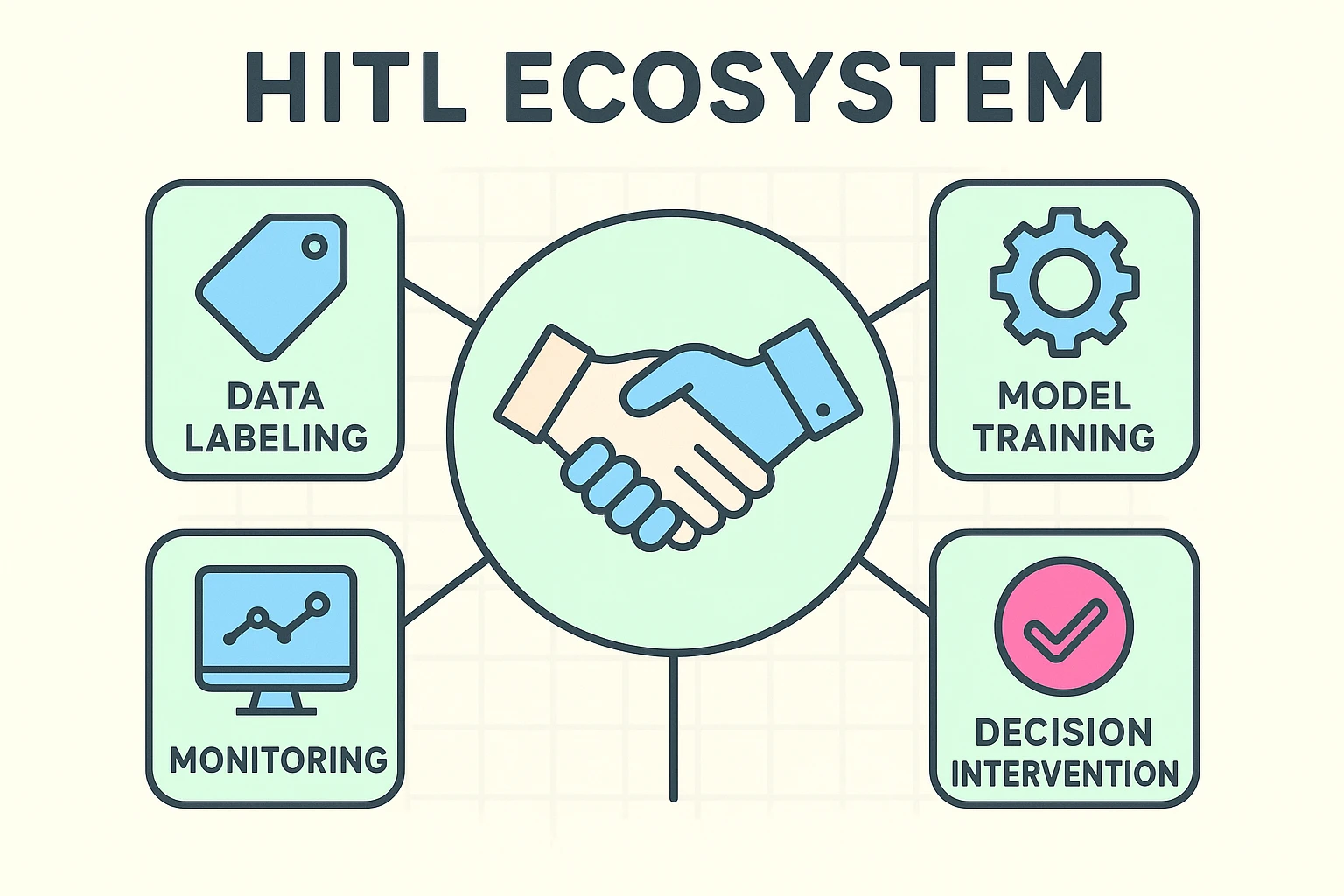

An at-a-glance HITL ecosystem: the central human–AI collaboration and four core intervention roles (data, training, decision points, monitoring) to guide ethical design.

Beyond the Buzzword: What is Human-in-the-Loop AI?

You've likely heard the term "Human-in-the-Loop AI" (HITL), often in discussions about improving AI accuracy or validating data. But what does it truly mean, especially in an ethical context? At its core, HITL refers to a system design where human intelligence is integrated into an AI learning or decision-making process. This isn't about replacing AI, but augmenting it, creating a symbiotic relationship that leverages the strengths of both.

Think of it as a quality control process for your AI, but with a conscience. Humans contribute at various stages:

- Data Labeling: Teaching AI by providing accurate, diverse, and ethically sourced data.

- Model Training & Validation: Reviewing AI outputs during development to identify errors, biases, and areas where the AI deviates from desired ethical norms.

- Decision Intervention: Overseeing critical AI decisions in real-time, providing an override or second opinion before the AI's output is finalized.

- Continuous Monitoring: Post-deployment, humans keep an eye on AI performance, identifying drift, new biases, or unexpected ethical issues.

HITL vs. Human-on-the-Loop (HOTL): A Crucial Distinction

While often used interchangeably, HITL and Human-on-the-Loop (HOTL) have distinct ethical implications.

- Human-in-the-Loop (HITL): Humans are actively involved, often making decisions or providing feedback that directly influences the AI's real-time operation or learning. This is about intervention.

- Human-on-the-Loop (HOTL): Humans monitor the AI system but only intervene when predefined thresholds or critical anomalies are detected. This is about oversight.

For ethical strategy, both are vital. HITL allows for proactive shaping and correction, while HOTL provides a crucial safety net. The right balance depends on the AI's application, its potential impact, and the level of risk involved.

The Ethical Imperative: Why HITL is More Than a "Nice-to-Have"

Many organizations view ethical AI as a future concern or a compliance burden. However, ethical breaches in AI can lead to severe reputational damage, legal penalties, and a profound loss of trust. Here’s why ethical HITL isn't just about avoiding problems, but actively building a more responsible and resilient AI strategy:

1. Mitigating Bias: The Human Antidote to Algorithmic Prejudice

AI systems learn from data. If that data reflects existing societal biases or lacks diverse representation, the AI will internalize and often amplify those biases. Imagine an AI recruitment tool overlooking qualified candidates from specific demographics simply because historical data showed similar patterns. This isn't just unfair; it's a business liability, potentially leading to a lack of diverse talent and missed opportunities.

Humans in the loop can:

- Audit data sources: Identifying and rectifying unrepresentative or biased datasets before they contaminate the AI model.

- Review biased outcomes: Spotting patterns of unfair or discriminatory decisions by the AI and providing corrective feedback.

- Introduce diverse perspectives: Human reviewers from varied backgrounds can ensure the AI isn't inadvertently biased against certain groups.

This active human involvement is crucial because, as research often shows, ethical AI is not just about using “good data.” It’s about continuous scrutiny, especially when addressing the limitations of AI in generating fair and unbiased outcomes, as explored in discussions around the challenges of AI LinkedIn outreach automation.

2. Ensuring Accountability and Transparency

When an AI system makes a harmful decision, who is responsible? Without clear human intervention points, accountability can become a murky, finger-pointing exercise. Ethical HITL helps to:

- Establish clear ownership: By designating human intervention points, you assign clear responsibility for decisions made or reviewed by humans. This brings an important human element to issues of liability.

- Improve explainability: Humans can demand and interpret why an AI made a certain recommendation, especially when working with AI-powered content marketing strategies or assessing AI readiness for strategic planning. This process, often aided by Explainable AI (XAI) techniques, helps to unpack the "black box" nature of complex algorithms.

- Build public trust: Knowing that "a human is still checking things" can significantly increase public and user confidence in AI-powered services.

3. Navigating Nuance and Context

AI excels at pattern recognition, but it often struggles with the subtleties of human ethics, unforeseen circumstances, or highly contextual situations. An AI might identify a valid sales lead, but a human may recognize that contacting them immediately after a public tragedy is insensitive and damaging to your brand, demonstrating a crucial ethical context.

In areas like content moderation, healthcare diagnostics, or even AI for quality control, humans bring:

- Emotional intelligence: Understanding the impact of decisions on individuals.

- Common sense reasoning: Applying real-world knowledge that AI might lack.

- Domain expertise: Bringing specialized knowledge to complex, edge cases where AI's pre-trained patterns fall short.

Building Ethical Strategy: Intervention Points and Practical Design

So, how do you actually design an ethical HITL strategy? It's not about randomly inserting humans; it's about thoughtful, strategic placement of intervention points across the AI lifecycle.

Key Stages for Ethical Intervention:

- Data Collection & Preparation: This is the bedrock. Biased data leads to biased AI. Humans critically review data sources, ensuring diversity, representativeness, and ethical sourcing. This includes identifying and preventing the inclusion of sensitive identifiable information, or ensuring consent for its use, forming a critical part of your AI data strategy.

- Model Training & Development: During training, humans validate partial outputs, debug potential biases, and define ethical guardrails. They might label ambiguous cases, ensuring the AI learns a nuanced ethical boundary. Without this iterative human input, an AI could learn and perpetuate harmful stereotypes.

- Deployment & Real-time Decision Making (HITL): For high-stakes applications (e.g., medical diagnoses, loan applications, criminal justice), humans act as gatekeepers, reviewing and potentially overriding AI recommendations. This is where active "Human-in-the-Loop" engagement often comes into play, ensuring critical decisions align with ethical guidelines and legal requirements, and mitigating automation bias.

- Post-Deployment Monitoring & Evaluation (HOTL): Once deployed, AI systems need continuous human oversight. Humans monitor performance metrics, flag unexpected behaviors, and identify new ethical risks or emergent biases that an AI might develop over time. This continuous feedback loop is essential for maintaining ethical alignment long-term.

A lifecycle flow highlighting where to insert human checkpoints for ethical oversight, with a flagged deployment checkpoint to show common high-risk moments.

Technical Specifications for Ethical HITL Design

Beyond the conceptual framework, there are technical elements crucial for effective ethical HITL:

- Explainable AI (XAI): Humans can't effectively oversee what they don't understand. XAI techniques make AI decisions interpretable to humans. This is vital for transparent oversight, especially when the human needs to understand why the AI recommended something to make an informed ethical review.

- User-Friendly Interfaces: HITL systems require intuitive interfaces that present AI outputs clearly, highlight uncertainties, and provide easy mechanisms for human feedback or override. Poor design can lead to fatigue, errors, and an undesirable "rubber-stamping" of AI decisions.

- Robust Logging and Auditing: Every human intervention, AI decision, and feedback loop needs to be meticulously logged. This creates an auditable trail, essential for accountability, debugging, and demonstrating compliance with ethical guidelines.

- Bias Detection Tools: Integrating automated tools that flag potential biases in data or model outputs can assist human reviewers, making their ethical oversight more efficient and comprehensive.

- Feedback Integration Mechanisms: The system must effectively integrate human feedback back into the AI's learning process, ensuring that ethical corrections lead to continuous improvement in the AI model.

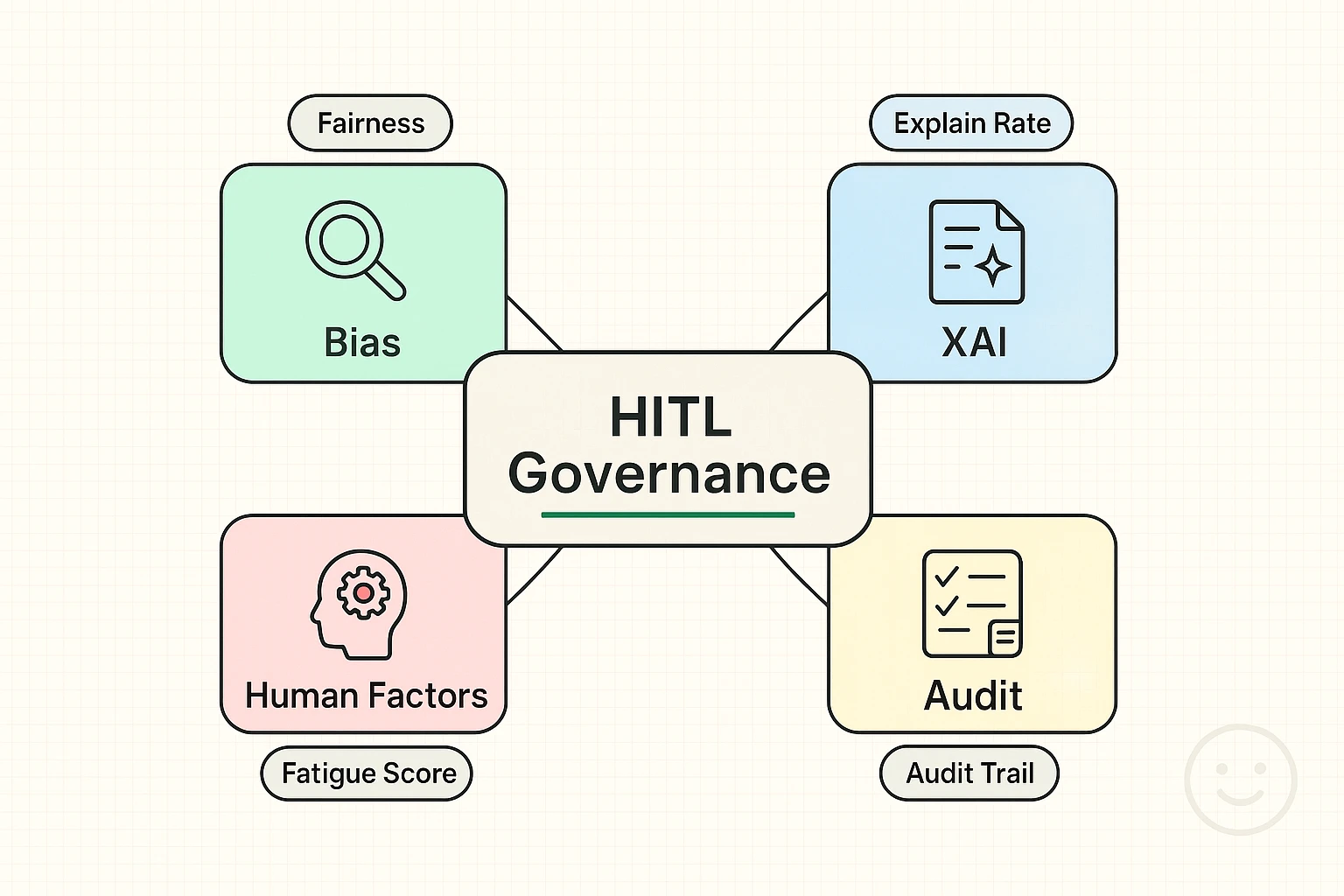

Ethical Governance and Measurement: Beyond Lip Service

Implementing HITL for ethical purposes requires a robust governance framework and clear metrics for success.

A governance framework linking ethical oversight to practical levers—bias detection, explainability, auditing, and human factors—plus example KPIs for measurement.

Human Factors in Oversight: The Double-Edged Sword

While humans are crucial, they are not infallible. Several human factors can inadvertently undermine ethical HITL:

- Cognitive Bias: Humans bring their own biases (e.g., confirmation bias, automation bias) that can influence their review of AI decisions.

- Oversight Fatigue: Reviewing countless AI outputs can be tedious, leading to reduced vigilance and errors.

- Lack of Training: Humans might not have the necessary ethical training or technical understanding to effectively oversee complex AI systems.

Mitigation Strategies:

- Targeted Training: Educate human reviewers on potential AI biases and the specific ethical guidelines they need to uphold.

- Interface Design: Design HITL interfaces to minimize cognitive load, highlight critical alerts, and prevent fatigue.

- Diverse Teams: Ensure human review teams are diverse in background and perspective to counteract individual biases.

- Appropriate Pacing: Don't overload human reviewers; balance efficiency with effective oversight.

Measuring Ethical Performance

How do you know if your ethical HITL strategy is working? You need measurable metrics:

- Bias Reduction Metrics: Track specific demographic disparities in AI outcomes and show clear reductions over time due to human intervention.

- Fairness Scores: Implement and monitor quantitative fairness metrics relevant to your domain.

- Human Override Rate (and justification): Analyze why humans override AI decisions. A high override rate might signal issues with the AI, while a low rate without clear justifications might point to automation bias in human reviewers.

- Adherence to Ethical Guidelines: Evaluate how often human interventions ensure compliance with internal ethical principles and external regulations.

- Transparency & Explainability Scores: While harder to quantify, assess the clarity of AI explanations for human reviewers and stakeholders.

- User/Stakeholder Feedback: Gather qualitative feedback on the perceived fairness and trustworthiness of your AI systems.

Future-Proofing Your Business with Ethical HITL

As AI becomes more pervasive, regulatory bodies worldwide are increasingly focusing on ethical AI guidelines. Businesses that proactively embed ethical HITL practices will be far better positioned to comply, innovate, and thrive. This is about more than just avoiding fines; it's about building a sustainable competitive advantage based on trust, responsibility, and innovation.

By consciously embedding human judgment and ethical oversight into your AI strategies, you’re not just creating more accurate systems; you’re building more responsible, equitable, and ultimately, more valuable AI.

Frequently Asked Questions (FAQ) about Ethical Human-in-the-Loop AI

Q1: What's the main difference between Human-in-the-Loop (HITL) and Human-on-the-Loop (HOTL)?

A1: HITL involves humans actively participating in the AI's learning or decision-making process, often providing direct feedback or making modifications in real-time. HOTL involves humans monitoring the AI system and only intervening if something goes wrong or a predefined threshold is crossed. For ethical strategy, HITL focuses on proactive shaping, while HOTL acts as a reactive safety net.

Q2: Can't AI simply learn to be ethical on its own through enough data?

A2: Not necessarily. AI learns from patterns in data, and if historical data contains biases or lacks ethical context, the AI will perpetuate or even amplify those issues. Ethical decision-making often involves nuance, values, and contextual understanding that current AI struggles to grasp independently. Humans are essential to inject and enforce those ethical considerations into the AI's learning and operation.

Q3: Isn't integrating humans into AI processes inefficient and slow?

A3: While it can introduce a bottleneck if poorly designed, ethical HITL isn't about slowing things down unnecessarily. It's about strategically placing human intervention where it matters most—for critical, high-impact, or ethically sensitive decisions. The goal is to maximize the efficiency of AI while ensuring ethical integrity, preventing costly mistakes that could be far more detrimental than a slightly slower process.

Q4: How do we prevent human biases from influencing the AI if humans are in the loop?

A4: This is a key challenge! Mitigating human bias in HITL involves several strategies:

- Training: Educating human reviewers on common cognitive biases and ethical guidelines.

- Diverse Teams: Employing a diverse group of human reviewers to offer varied perspectives.

- Clear Protocols: Establishing strict, transparent protocols for review and feedback.

- Bias Detection Tools: Using AI-powered tools to help humans identify and double-check for potential biases in both data and AI outputs.

Q5: What kind of practical tools or technologies support ethical HITL?

A5: Key technologies and tools include:

- Explainable AI (XAI): To help humans understand why an AI made a decision.

- AI Observability Platforms: For monitoring AI performance and identifying anomalies.

- Data Annotation Platforms: For efficient and structured human feedback on data.

- Automated Bias Detection Software: To flag potential biases for human review.

- Robust Auditing and Logging Systems: To create transparent records of AI decisions and human interventions.

Q6: If an AI makes a wrong decision even with human oversight, who is accountable?

A6: Establishing clear accountability is a cornerstone of ethical HITL governance. It typically falls on the human who was designated to oversee or intervene at that particular stage. The specific legal and ethical accountability can vary based on the AI's domain, regulatory frameworks, and how tightly the HITL system is designed, making comprehensive AI governance crucial.

Ready to Architect Your Ethical AI Future?

The journey to becoming an AI-first business is complex, but it doesn't have to be a leap of faith. By strategically integrating human expertise with AI capabilities through ethical Human-in-the-Loop design, you can build systems that are not only powerful and efficient but also fair, transparent, and trustworthy.

Don't let your AI strategy be an afterthought. Explore how tailored AI systems for marketing, recruiting, and broader enterprise solutions can transform your operations responsibly.

Join Our Growing AI Business Community

Get access to our AI Automations templates, 1:1 Tech support, 1:1 Solution Engineers, Step-by-step breakdowns and a community of forward-thinking business owners.

Latest Blogs

Explore our latest blog posts and insights.