How AI-Driven Analysis Transforms Website Crawlability and Indexation for MOFU Success

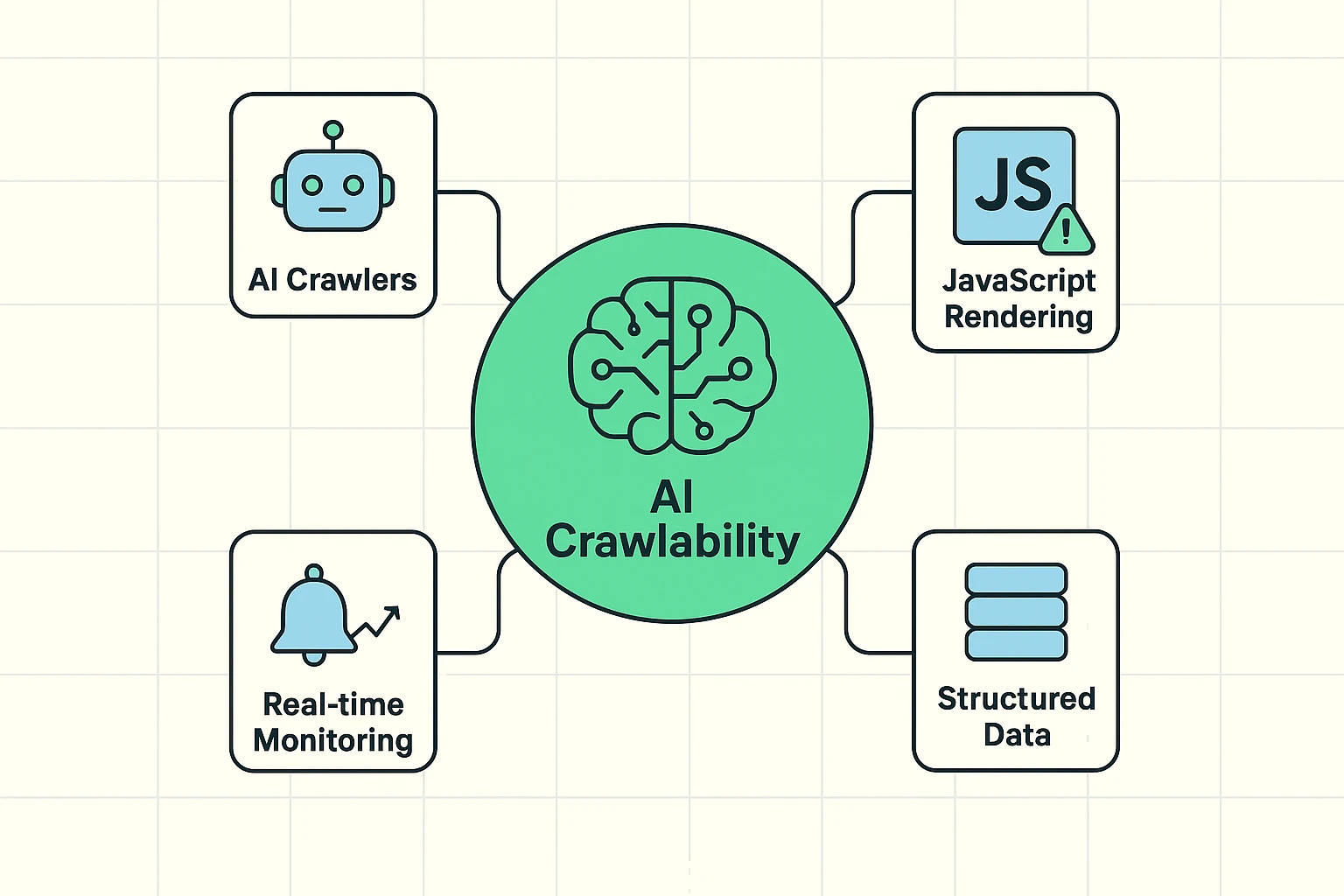

AI-driven analysis revolutionizes how websites achieve crawlability and indexation, fundamentally redefining search engine optimization (SEO) for middle-of-the-funnel (MOFU) decision-makers. This advanced approach moves beyond traditional SEO methods, offering precise insights into how AI crawlers interact with digital content. It emphasizes real-time monitoring, technically optimized content, and robust structured data implementations to ensure rapid and sustained indexation, which is crucial for capturing valuable search visibility and engagement. The landscape of search has changed dramatically, with AI crawler traffic increasing 96% year-over-year, and GPTBot’s share specifically rising from 5% to 30% of all crawler activity, according to Search Engine Land data, necessitating a shift in content strategy and technical execution.

What Distinguishes AI Crawlers from Traditional Bots?

AI crawlers, like GPTBot and Perplexity, exhibit distinct behaviors compared to traditional search engine bots such as Googlebot, necessitating specialized optimization strategies. These modern crawlers prioritize different elements of a webpage, emphasizing content quality, semantic relationships, and structured data, rather than solely relying on hyperlink structures. For instance, AI crawlers often do not render JavaScript (JS) content effectively, impacting the discoverability of dynamic elements and requiring alternative content delivery methods. Furthermore, their crawl frequency can be significantly higher than traditional bots, demanding real-time monitoring capabilities to track their activity.

How Do AI Crawlers Process JavaScript Content?

AI crawlers, particularly those powering large language models (LLMs), frequently encounter limitations when processing JavaScript-rendered content, leading to potential indexation issues. Unlike advanced traditional crawlers like Googlebot, which execute JavaScript to render full page content, many AI crawlers either do not execute JavaScript at all or possess limited rendering capabilities. This non-rendering often results in AI bots only perceiving the initial HTML, missing dynamic content, interactive elements, or even primary text loaded via JavaScript. Consequently, websites heavily reliant on client-side JavaScript for essential content delivery risk poor visibility within AI-driven search results, making server-side rendering or static HTML alternatives critical for accessibility.

What are the Differences in Crawl Frequency for AI Bots?

AI bots often crawl websites with substantially higher frequency than traditional crawlers, impacting server resources and highlighting the need for robust infrastructure. While Googlebot adjusts its crawl rate based on site authority and update frequency, AI crawlers, especially those building large language models, may visit pages more aggressively to gather new data points for continuous model training. This elevated crawl frequency means that content updates, new pages, and technical errors are detected more rapidly by AI systems, underscoring the importance of immediate remediation. However, a significant deviation exists: AI crawlers generally do not allow manual re-crawl requests, unlike Googlebot, which responds to submissions through Google Search Console. This limitation forces website owners to rely on continuous site health and technical SEO automation for optimal AI visibility.

Why is Real-Time Monitoring Crucial for AI Crawlability and Indexation?

Real-time monitoring is crucial for ensuring optimal AI crawlability and indexation, as it allows immediate detection and resolution of issues that impede AI bots from accessing and understanding website content. The rapid evolution of AI crawler behavior, coupled with their higher crawl frequency, demands a proactive approach to technical SEO. Implementing real-time monitoring provides immediate alerts for critical issues like server errors, broken links, or changes in content rendering that could negatively impact AI visibility. This immediate feedback loop enables quick fixes, preventing prolonged periods of poor indexation which directly impacts AI-driven search performance.

How Do Real-Time Monitoring Tools Alert to AI Crawler Issues?

Real-time monitoring tools identify and alert website administrators to AI crawler issues by continuously analyzing log files, server responses, and on-page changes, then flagging anomalies. These platforms leverage machine learning algorithms to distinguish between AI bot traffic, such as GPTBot, and traditional crawlers, providing granular insights into their specific interactions with a site. When a tool detects patterns indicative of a crawl error (e.g., a sudden increase in 404 errors for AI bots, or a failure to render critical content), it triggers immediate notifications via email, SMS, or integrated dashboards. This instant alerting system allows teams to address problems such as server overload, JavaScript rendering failures, or unintended robots.txt blocks specific to AI crawlers before they significantly impact indexation and search visibility.

What Metrics Do AI Crawl Monitoring Tools Prioritize?

AI crawl monitoring tools prioritize metrics that directly influence how AI crawlers perceive and index website content, focusing on accessibility, content integrity, and technical performance. Key metrics include the AI crawler visit rate, which indicates how frequently AI bots access specific pages; JavaScript rendering success rates, measuring how much dynamic content is visible to these bots; and structured data parsing errors, highlighting issues with schema implementation. These tools also track response codes for AI bots, identifying server errors or redirects that hinder crawlability, and content freshness signals, which prompt AI bots to re-evaluate page relevance. By prioritizing these specific indicators, webmasters gain precise insights into their site's compatibility with evolving AI search algorithms, enabling targeted optimization efforts.

How Does Structured Data Enhance AI Visibility and Indexing Success?

Structured data enhances AI visibility and indexing success by providing explicit semantic context that AI crawlers can readily interpret and utilize, thereby improving content understanding and discoverability. According to Search Engine Land, AI-generated content citations align 99.5% with top organic results, emphasizing AI SEO's dependence on strong traditional SEO signals, including structured data. This standardized format, such as Schema.org markups, allows website owners to label specific entities (products, reviews, events) and their attributes (price, rating, date) in a machine-readable way. AI crawlers use this enriched data to build a comprehensive knowledge graph of a website's content, which directly influences how content is presented in AI overviews, featured snippets, and other rich results, increasing its relevance and prominence in AI-driven search experiences.

What Specific Schema Types Benefit AI Crawlers Most?

Specific Schema types significantly benefit AI crawlers by providing direct, unambiguous information about content, which supports better understanding and richer display in AI search results. The most impactful types include Product schema for e-commerce, which details price, availability, and reviews; Article or BlogPosting schema for editorial content, clarifying author, publication date, and topic; and LocalBusiness schema for physical entities, specifying address, operating hours, and services. Additionally, FAQPage and HowTo schema directly answer common user questions and outline procedural steps, making content highly digestible for AI models. By implementing these structured data formats, websites enhance their content's machine readability, enabling AI crawlers to extract precise entities and relationships, thereby improving semantic indexing.

How Does Structured Data Impact AI-Generated Content Citations?

Structured data critically impacts AI-generated content citations by providing the foundational semantic framework that AI models use to accurately attribute and reference information from websites. When content is marked up with structured data, AI crawlers can more precisely identify key facts, figures, and entities, reducing ambiguity. This precision allows AI-driven search interfaces to cite content from specific, authoritative sources more reliably in their responses or AI Overviews. For example, a Recipe schema ensures an AI correctly identifies ingredients and steps, allowing it to cite that particular recipe with confidence. Without structured data, AI models rely more on natural language processing, which can be prone to misinterpretation or less precise citation, thereby diminishing a website's opportunity for direct attribution and visibility in the evolving AI search era.

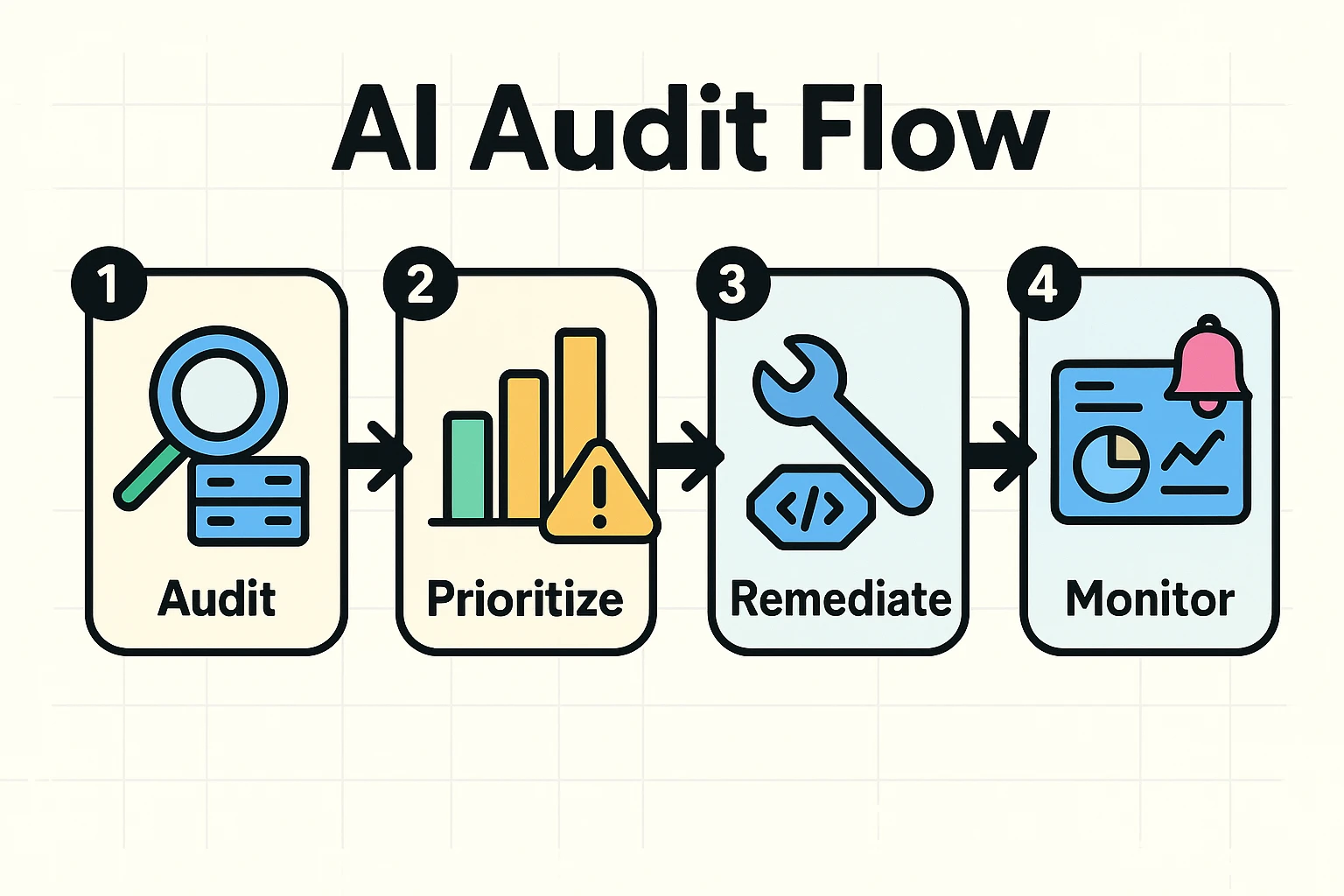

What AI-Powered Audit Solutions Address Crawlability and Indexation Problems?

AI-powered audit solutions effectively address crawlability and indexation problems by leveraging machine learning to perform deep site analysis, identify issues, and prioritize remediation efforts. Platforms like Conductor, Oncrawl, and Hack The SEO integrate AI to go beyond traditional technical SEO checks, offering advanced diagnostics for content quality, site structure, and real-time issue prioritization. These tools use AI to assess thousands of data points, including server logs, page performance metrics, and content semantics, providing actionable insights that human analysts might miss. They offer functionalities such as automated content audits, predictive analytics for indexation risk forecasting, and continuous monitoring, allowing businesses to maintain optimal site health in the face of evolving AI crawler behaviors. For example, Oncrawl provides AI-powered diagnostics with defined scoring metrics on content quality pillars, offering tactical, actionable advice directly linked to these scores. Learn how AI for SEO automation can streamline these audits.

How Do AI Audits Identify Content Quality Pillars?

AI audits identify content quality pillars by employing advanced natural language processing (NLP) and machine learning algorithms to analyze various linguistic and structural characteristics of content. These audits assess attributes such as semantic relevance to target topics, keyword optimization, grammatical correctness, readability, and overall comprehensiveness, which are critical for AI crawlers. For instance, Oncrawl's product updates leverage AI assessment of title structure, grammar, and keyword optimization to define content quality scores. The system cross-references content against top-ranking pages and entity graphs to determine topical authority and identify gaps, providing actionable insights for improvement. This allows AI audits to pinpoint specific areas where content can be enhanced to better align with the sophisticated understanding capabilities of AI search engines. Explore how AI keyword content gap analysis can further refine content strategy.

What Predictive Analytics Capabilities Do AI Audit Tools Offer?

AI audit tools offer predictive analytics capabilities that forecast potential indexation risks and opportunities, enabling proactive SEO strategy. These tools analyze historical crawl data, user behavior, and content changes to anticipate how future site updates or algorithm shifts might impact crawlability and indexation. For example, they can predict which pages are most likely to drop from index due to technical issues, or which content improvements could lead to increased AI visibility. By identifying patterns and trends, predictive analytics empower decision-makers to implement preventative measures, prioritize fixes based on estimated business impact, and allocate resources effectively, moving beyond reactive problem-solving to strategic future-proofing. Such insights are integral to AI SEO automation.

Implementing Technical SEO Best Practices for AI-Driven Crawlability

Implementing technical SEO best practices for AI-driven crawlability ensures that websites are structured and optimized to meet the unique demands of AI crawlers, facilitating efficient content discovery and indexation. These practices extend beyond traditional considerations, focusing on elements that directly influence how AI bots process and understand a site. Optimizing robots.txt files to guide AI crawlers, developing clear XML sitemaps to signal important content, and ensuring robust server performance are fundamental. Furthermore, adhering to Core Web Vitals (CWV) not only improves user experience but also signals site quality to AI systems. Effectively managing these technical aspects creates a conducive environment for AI crawlers, reducing barriers to indexation and improving overall visibility in AI-driven search results.

How to Optimize Robots.txt and Sitemaps for AI Crawlers?

Optimizing robots.txt files and XML sitemaps for AI crawlers involves strategic directives that guide bots to valuable content while restricting access to less critical or sensitive areas. For robots.txt, website owners should specify User-agent directives tailored for specific AI bots (e.g., User-agent: GPTBot) to control their crawl behavior, preventing unnecessary resource consumption or access to staging environments. Concurrently, XML sitemaps must be meticulously maintained, including only canonical URLs of high-value content that should be indexed by AI. This ensures AI crawlers efficiently discover and prioritize pages intended for public search visibility, avoiding crawl budget waste on irrelevant or duplicate content. An effective sitemap also includes lastmod tags to signal content freshness, encouraging AI bots to revisit and re-index updated information more frequently.

What Role Do Core Web Vitals Play in AI Indexation?

Core Web Vitals (CWV) play a significant role in AI indexation by acting as crucial indicators of page experience, which AI crawlers and search algorithms integrate into their ranking considerations. CWV metrics, Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS) directly measure how users perceive the loading, interactivity, and visual stability of a webpage. Pages performing well on CWV demonstrate a high-quality user experience, a factor that AI-driven search models prioritize when determining content relevance and authority. Although AI crawlers do not directly "experience" a page like a human, the underlying technical infrastructure and code quality that produce good CWV scores also contribute to a page's overall crawlability and rendering efficiency, making it easier for AI bots to process and index the content effectively.

Addressing Common Barriers to AI Indexation and Crawlability

Addressing common barriers to AI indexation and crawlability requires a nuanced understanding of how AI crawlers differ from traditional bots and proactive strategies to overcome technical and content-related obstacles. Key challenges include issues with JavaScript (JS) dependency, where dynamic content fails to render for AI bots, and the complexities of balancing gated content discoverability with AI crawl requirements. Without specific interventions, these barriers can severely limit a website's visibility within AI-driven search results, diminishing its competitive edge. Implementing solutions such as server-side rendering for JS content and strategic internal linking for gated assets becomes imperative for comprehensive AI indexation. The Ben AI blog provides further insights into these challenges.

How to Mitigate JavaScript Rendering Limitations for AI Bots?

Mitigating JavaScript rendering limitations for AI bots involves adopting server-side rendering (SSR), static site generation (SSG), or hydration techniques to ensure critical content is available in the initial HTML response. Since many AI crawlers do not execute JavaScript, relying solely on client-side rendering (CSR) means valuable content remains invisible to these bots. SSR processes JavaScript on the server, delivering fully formed HTML to the browser, which AI crawlers can easily parse. SSG generates static HTML files at build time, eliminating client-side rendering entirely. Hydration combines SSR with client-side JavaScript, allowing for interactive elements while maintaining initial crawlability. Implementing these solutions ensures that essential text, images, and structured data are accessible to AI crawlers from the first request, significantly improving indexation and visibility in AI-driven search. Find more on no-code AI technical SEO automation.

What Strategies Balance Gated Content with AI Crawl Needs?

Balancing gated content with AI crawl needs involves strategic approaches that ensure valuable, restricted content remains discoverable by AI bots without compromising access control for users. One effective strategy is to provide AI crawlers with a "clean" or ungated version of the content, often through dynamic rendering, where a server detects AI bot user-agents and serves a non-JavaScript, fully rendered HTML version. Another approach involves using robust internal linking from publicly accessible pages to the gated content's landing page, signaling its existence and relevance to AI crawlers, even if the content itself is behind a login. Additionally, leveraging structured data on introductory pages or summaries of gated content can provide AI bots with enough context to understand the value proposition, even if they cannot access the full text. This ensures AI systems recognize the content's existence and thematic relevance, potentially displaying it in search results with appropriate metadata or prompts for user access.

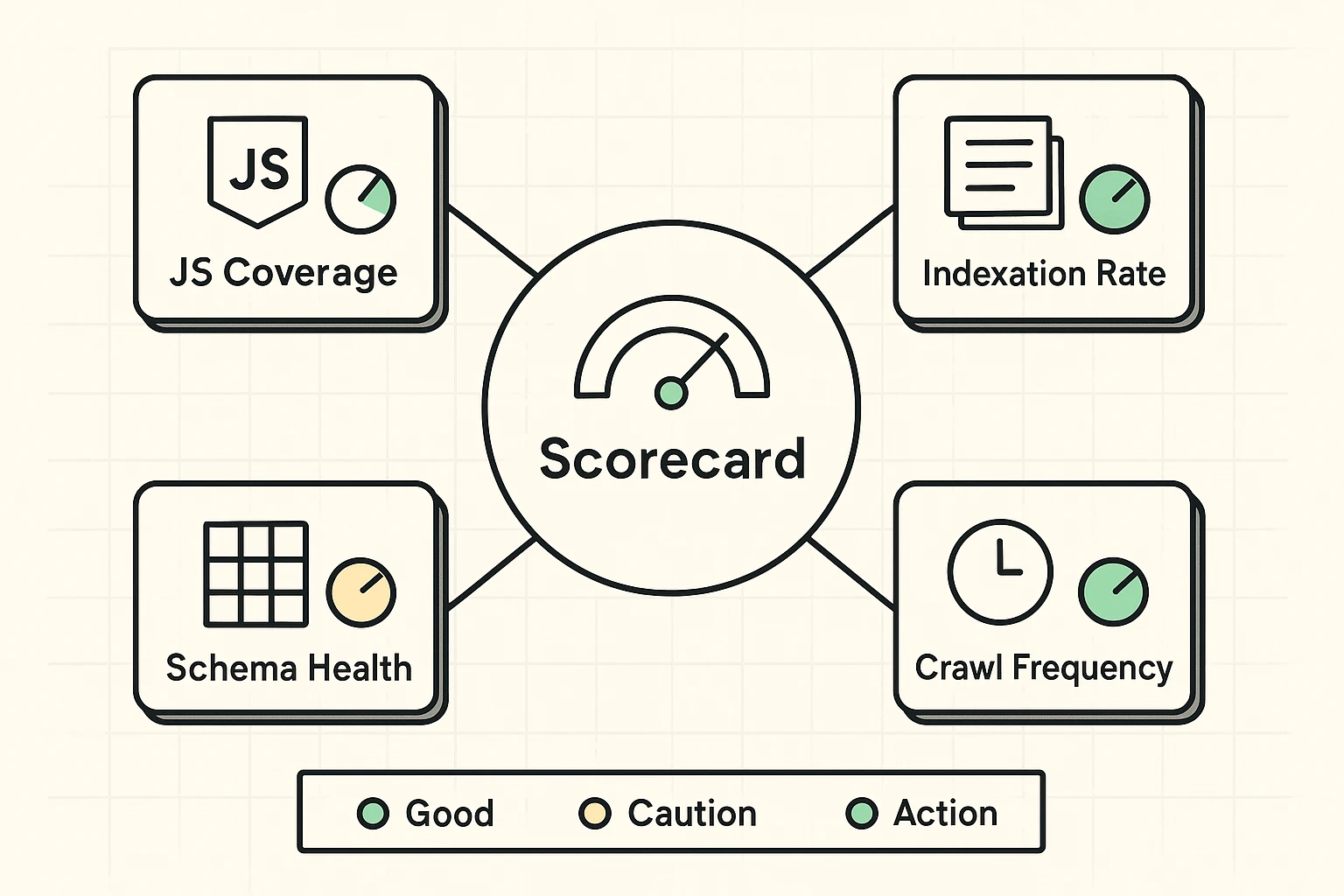

Leveraging an AI Crawlability & Indexation Scorecard for Prioritized Remediation

Leveraging an AI crawlability and indexation scorecard provides a systematic framework for evaluating website health through the lens of AI search algorithms, enabling prioritized remediation efforts. This diagnostic tool aggregates various metrics related to technical SEO, content quality, and AI crawler interactions, presenting them in an easily digestible format. The scorecard translates complex audit outputs into actionable insights, helping decision-makers identify critical issues that impede AI visibility and allocate resources efficiently. By assigning scores or grades to different aspects of crawlability (e.g., JavaScript rendering, structured data implementation, server response times), businesses can benchmark their performance, track improvements, and focus on fixes that yield the highest impact on AI-driven organic performance. This approach moves beyond generic SEO advice, offering a tailored roadmap for optimizing for the AI search era.

What Metrics Constitute a Comprehensive AI Crawlability Scorecard?

A comprehensive AI crawlability scorecard integrates several key metrics that collectively assess a website's readiness for AI-driven search, focusing on technical performance, content accessibility, and semantic clarity. Essential metrics include the percentage of JavaScript-rendered content successfully parsed by AI bots, the validity and completeness of structured data implementation, and the site's Core Web Vitals scores. The scorecard also evaluates server response times for AI crawlers, identifies robots.txt and noindex directives impacting AI access, and measures internal link equity distribution. Furthermore, it assesses content relevance and topical authority against AI's understanding models, offering a holistic view of how well a site aligns with AI crawler expectations and indexing priorities. These metrics provide a clear, quantifiable assessment of AI indexation health, guiding targeted optimization efforts.

How to Translate Scorecard Insights into Actionable Remediation Plans?

Translating scorecard insights into actionable remediation plans involves a structured process that prioritizes fixes based on their potential impact on AI crawlability and business goals. First, identify critical issues flagged by the scorecard, such as low JavaScript rendering scores or high structured data errors, which present significant barriers to AI bots. Next, assign a business impact score to each issue, considering factors like traffic potential, conversion rates, and competitive advantage. For example, a JavaScript rendering issue on a high-value product page would receive higher priority than on a rarely visited blog post. Then, develop specific, step-by-step implementation strategies for each prioritized fix, leveraging available AI SEO automation tools or technical SEO teams. Regularly monitor the scorecard after implementing changes to measure effectiveness and iterate on the strategy, ensuring continuous improvement in AI indexation.

Frequently Asked Questions

What makes AI crawlers different?

AI crawlers differ significantly from traditional bots primarily in their processing capabilities and interaction patterns, emphasizing content understanding over simple link following. They often prioritize content quality, semantic relationships, and structured data, rather than solely relying on hyperlink structures. For example, AI crawlers, such as GPTBot, often do not render JavaScript content, unlike more advanced traditional crawlers like Googlebot. Furthermore, AI crawlers can have a much higher crawl frequency, rapidly consuming and re-evaluating content. Crucially, they do not allow manual re-crawl requests, forcing webmasters to ensure continuous site health for optimal visibility.

How often do AI crawlers visit?

AI crawlers visit websites with a significantly higher frequency than traditional crawlers, impacting resource consumption and accelerating content indexing for AI models. While Googlebot adjusts its crawl rate based on various factors like site authority and update frequency, AI crawlers, particularly those training large language models, may access pages more aggressively to gather new data points. Search Engine Land reported that AI crawler traffic increased 96% year-over-year, indicating a rapid and frequent engagement with web content. This elevated crawl frequency means that content updates and technical changes are detected and processed by AI systems much faster.

Can I make AI crawlers re-crawl content?

No, you generally cannot directly make AI crawlers re-crawl content on demand, which contrasts with traditional search engines like Google that offer manual re-crawl requests via tools such as Google Search Console. AI crawlers typically operate autonomously, deciding when to revisit pages based on their own algorithms and previous crawl schedules. To encourage AI crawlers to re-index content, website owners must focus on maintaining excellent site health, ensuring content freshness, and signaling updates through accurate XML sitemaps and proper lastmod tags. This approach incentivizes AI bots to re-evaluate and re-index updated information naturally and efficiently.

Which AI SEO tools are best for crawlability?

The best AI SEO tools for crawlability offer comprehensive diagnostics, real-time monitoring, and actionable insights specifically tailored to AI crawler behaviors. Leading platforms include Conductor, Oncrawl, and Hack The SEO, which provide advanced functionalities beyond traditional SEO audits. Conductor excels in educational resources on AI crawler behavior and real-time monitoring. Oncrawl provides AI-powered diagnostics with defined scoring metrics on content quality pillars. Hack The SEO offers in-depth coverage of AI-powered audits detecting indexation problems, alongside automated fixes and predictive analytics. These tools integrate machine learning to identify JavaScript rendering issues, structured data errors, and other technical barriers that impede AI indexation, offering specific recommendations for remediation.

How to balance user experience with AI crawl requirements?

Balancing user experience (UX) with AI crawl requirements involves implementing technical solutions that serve both human visitors and AI bots effectively, avoiding trade-offs between speed, interactivity, and discoverability. Key strategies include utilizing server-side rendering (SSR) or static site generation (SSG) for JavaScript-heavy sites, ensuring that critical content is available in the initial HTML for AI crawlers without sacrificing dynamic UX for users. Prioritize Core Web Vitals, as these metrics simultaneously improve user experience and signal site quality to AI algorithms. Additionally, employ progressive enhancement, delivering basic content first and then layering on interactive elements. Implement structured data to explicitly define content for AI while simultaneously enhancing user engagement through rich snippets. This dual-focus approach ensures both seamless user journeys and optimal AI indexation.

Conclusion

AI-driven analysis of crawlability and indexation represents a pivotal shift in the SEO landscape, demanding a comprehensive, proactive strategy from decision-makers. The distinction between AI crawlers and traditional bots—particularly concerning JavaScript rendering, crawl frequency, and re-crawl capabilities—necessitates specialized tools and optimization techniques. Leveraging AI-powered audit solutions, real-time monitoring, and robust structured data implementation allows organizations to address common barriers to AI indexation effectively. Implementing an AI crawlability scorecard provides a clear framework for prioritizing remediation, ensuring that technical and content enhancements directly translate into improved visibility and engagement within AI-driven search results. Businesses must embrace these advanced methodologies not just to compete, but to dominate in the evolving AI search era, transforming challenges into distinct competitive advantages.

Join Our Growing AI Business Community

Get access to our AI Automations templates, 1:1 Tech support, 1:1 Solution Engineers, Step-by-step breakdowns and a community of forward-thinking business owners.

Latest Blogs

Explore our latest blog posts and insights.