You’re evaluating AI solutions, and the technical capabilities are impressive. But one challenge looms larger than any other: how do you ensure these powerful tools are deployed responsibly, ethically, and compliantly? It’s not just about what AI can do, but what it should do, and how its actions align with your values and legal obligations. This isn't theoretical in today's landscape; it's a make-or-break consideration for enterprises.

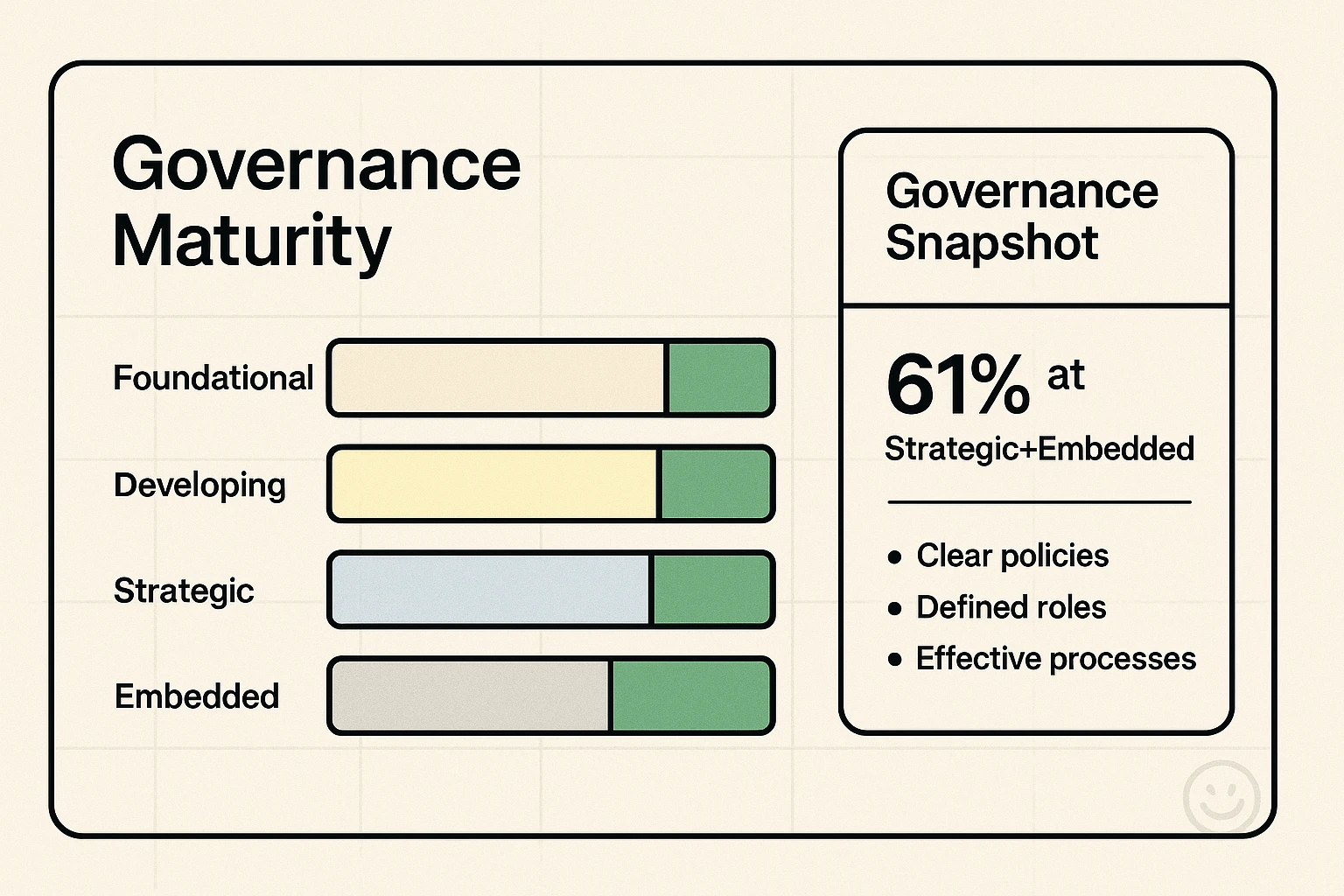

Ignoring ethical AI implementation and governance is no longer an option. The stakes are reputational, financial, and regulatory. Only 61% of organizations are at a strategic or embedded stage of responsible AI, leaving 39% still building foundational policies or in early training phases. This maturity gap is a clear indicator that most businesses are grappling with operationalizing ethical AI. The good news? Addressing this gap effectively transforms what could be a liability into a significant competitive advantage, with 58% of executives reporting improved ROI and organizational efficiency from responsible AI initiatives.

At BenAI, we understand that true AI transformation isn't just about speed or efficiency; it's about building trust and sustainability. We don't just provide AI solutions; we partner with you to implement them ethically and compliantly, ensuring your AI initiatives are future-proof and aligned with your core values.

The Imperative of Ethical AI: Beyond Compliance, Towards Competitive Advantage

For enterprise leaders, responsible AI isn't simply a buzzword—it's a strategic imperative. The public and experts alike have low confidence in companies to develop AI responsibly (60% of academics, 59% of the public, 55% of experts), underscoring a critical trust deficit. Failing to address these concerns can lead to significant reputational damage and financial liability. Conversely, prioritizing ethical AI can drive substantial organizational benefits.

Decoding Responsible AI Principles for Enterprise Leaders

At its core, responsible AI is built on foundational principles designed to foster trust and minimize harm. We break them down into:

- Fairness: Ensuring AI systems do not perpetuate or amplify discrimination. This means designing algorithms that treat all individuals and groups equitably, avoiding biased outcomes stemming from training data or design choices.

- Accountability: Establishing clear lines of responsibility for AI system outcomes. Who is liable when something goes wrong? This involves defining human oversight mechanisms and robust logging.

- Transparency: Making AI decision-making processes understandable and accessible when necessary. While not every technical detail needs to be open, the rationale behind critical decisions should be explainable.

- Robustness: Designing AI systems to be resilient against adversarial attacks and errors. This ensures reliability and prevents malicious manipulation.

- Privacy: Protecting sensitive user data throughout the AI lifecycle, adhering to strict data protection regulations.

Connecting these principles to tangible business benefits is crucial. Fairness reduces legal risk and expands market reach. Transparency builds customer loyalty and facilitates regulatory approval. Robustness enhances operational stability and reduces costly errors. Privacy ensures compliance and maintains user trust.

Strategic AI Readiness: Benchmarking Your Ethical Foundation

Organizations often struggle with where to begin their ethical AI journey. A comprehensive assessment of your current AI readiness—covering not just technical infrastructure but also governance, culture, and ethical considerations—is the first step.

The image below illustrates how such an assessment can benchmark your organization's responsible AI maturity. It helps you identify where you stand against industry best practices and pinpoints critical areas for improvement, providing a vendor-agnostic view to inform your governance priorities.

This kind of strategic planning involves assessing your AI opportunities while mitigating risks. To properly assess your AI readiness for strategic planning, it’s essential to consider all facets of your operations.

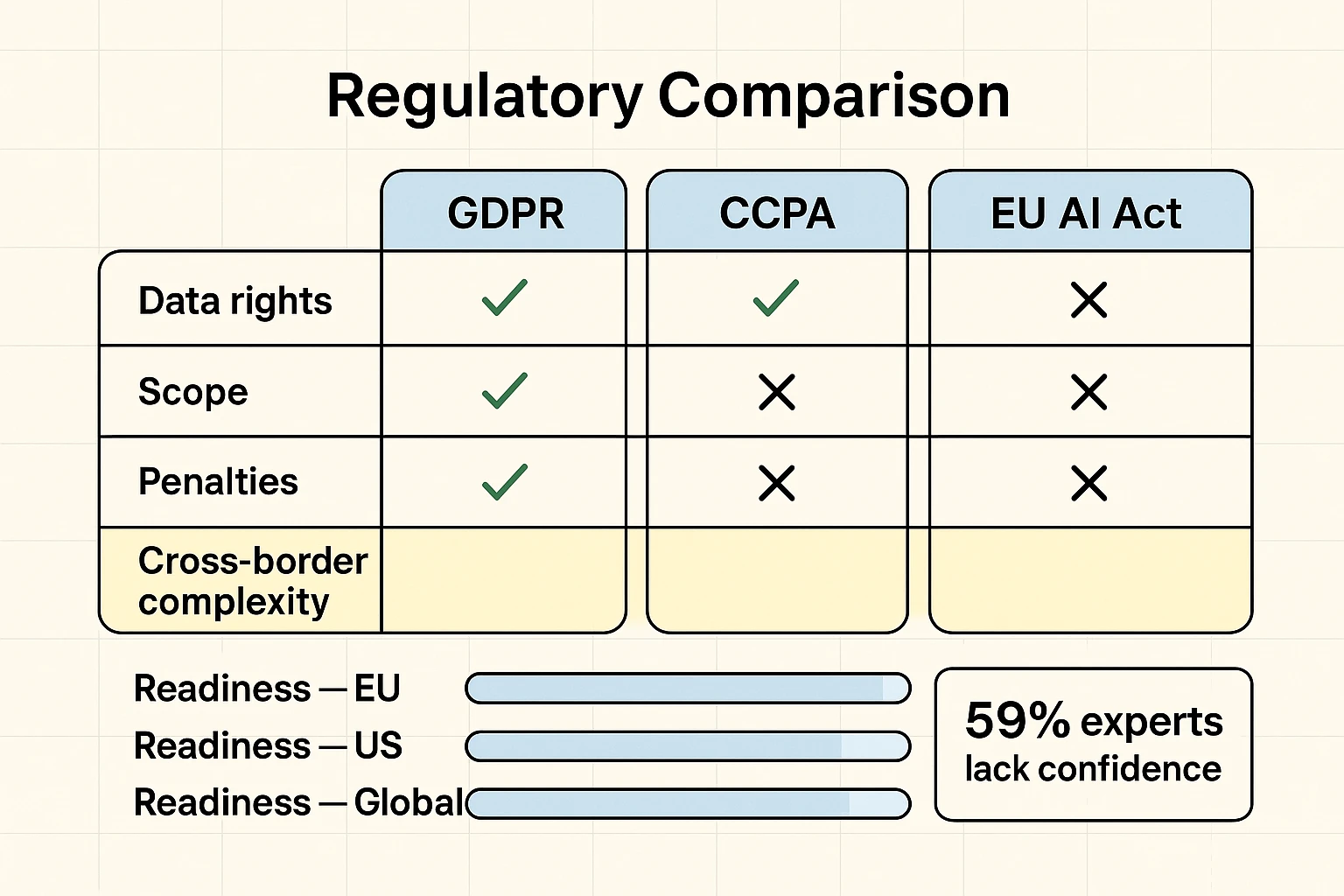

Navigating the Regulatory Labyrinth: GDPR, CCPA, EU AI Act, and Beyond

The regulatory landscape for AI is a complex, ever-evolving challenge, especially for multinational enterprises. Legislative mentions of AI increased by 21.3% across 75 countries since 2023, a nine-fold increase since 2016. In 2024 alone, US federal agencies introduced 59 AI-related regulations. This surge highlights the urgent need for a cohesive, future-proof compliance strategy.

Key Regulations and Their Enterprise Implications:

- GDPR (General Data Protection Regulation): While not exclusive to AI, GDPR's stringent requirements for data protection, consent, and the right to explanation profoundly impact how AI systems handle personal data, especially automated decision-making.

- CCPA (California Consumer Privacy Act) & CPRA: Similar to GDPR, these regulations focus on consumer data rights, impacting how AI-powered applications collect, process, and share data concerning California residents.

- EU AI Act: Poised to be one of the most comprehensive AI regulations globally, it takes a risk-based approach, categorizing AI systems into unacceptable, high-risk, limited-risk, and minimal-risk categories, with varying levels of legal requirements. High-risk systems will face strict obligations, including conformity assessments, risk management systems, data governance, and human oversight.

For decision-makers navigating this maze, understanding the nuances and intersections of these regulations is paramount. The following visual helps compare major AI regulations side-by-side, offering a readiness benchmark to prioritize cross-border compliance actions and resource allocation.

Crucially, the challenge often lies in operationalizing these regulations across diverse tech stacks and organizational structures. Many existing resources outline what the regulations are, but few offer vendor-agnostic playbooks for achieving enterprise-wide compliance. This is where BenAI steps in, providing actionable guidance and custom implementations that translate legal mandates into practical business processes.

The Engine of Trust: Implementing Robust AI Governance Frameworks

Translating abstract responsible AI principles into operational processes remains a significant barrier for 50% of executives. Building a robust AI governance framework isn't just about ticking boxes; it’s about creating a living, adaptive system that ensures continuous ethical oversight and responsible innovation.

Key Components of an Effective AI Governance Framework:

- Clear Roles and Responsibilities: Defining who is accountable for what throughout the AI lifecycle—from data scientists and developers to legal teams and executive leadership.

- AI Ethics Committee: Establishing a cross-functional committee (legal, technical, business, ethics) to provide oversight, shape policy, review risky projects, and provide recommendations.

- Risk Management System: Integrating AI-specific risks into your existing enterprise risk management framework, focusing on continuous monitoring and mitigation.

- Data Governance: Implementing strong policies for data collection, storage, usage, and anonymization, ensuring compliance with privacy regulations.

- Auditability and Documentation: Maintaining detailed records of AI system development, training data, performance metrics, and decision-making processes for internal oversight and external audits.

A practical committee template and risk snapshot, like the one below, can significantly aid governance teams in operationalizing AI ethics and linking recommendations directly to product decisions.

This structured approach moves beyond theoretical discussions, giving your governance team the tools to actively manage AI ethics.

Mitigating Bias & Ensuring Explainability (XAI) in Practice

Two of the most pressing technical challenges in ethical AI are bias mitigation and explainable AI (XAI). While many acknowledge the presence of bias, real-world examples showing how large companies successfully quantify and reduce it are scarce. Similarly, the concept of XAI is often theoretical, lacking practical implementation details for complex black-box models.

Advanced Strategies for Bias Mitigation:

- Data-Centric Approaches:

- Bias Detection: Implementing sophisticated tools to identify statistical biases, under-representation, or harmful correlations within training datasets (e.g., historical data perpetuating stereotypes).

- Data Remediation: Techniques like re-sampling, re-weighting, and data augmentation to balance skewed datasets and reduce bias before model training.

- Model-Centric Approaches:

- Algorithmic Fairness Constraints: Incorporating fairness as an optimization objective during model training, ensuring equitable outcomes across different demographic groups.

- Adversarial Debiasing: Utilizing adversarial networks to 'force' models to ignore sensitive attributes during prediction.

- Post-Deployment Monitoring: Continuous auditing of model outputs to detect emergent biases that may arise from shifts in real-world data or un foreseen interactions.

Achieving Explainable AI (XAI) That Resonates:

XAI isn't just for data scientists; it's about providing insights that business users, legal teams, and regulatory bodies can understand.

- Local Explainability: Understanding why a model made a specific prediction for a single instance (e.g., using LIME or SHAP values).

- Global Explainability: Understanding the overall behavior of a model and how different features influence its decisions.

- Counterfactual Explanations: Describing the smallest change to an input that would alter the model's prediction, offering insights into decision boundaries.

- Visualizations and Simplifications: Presenting complex model insights through intuitive dashboards and simplified narratives that highlight key drivers without overwhelming technical detail.

The interactive evaluation below illustrates a practical, operational workflow for detecting, mitigating, and monitoring bias while measuring explainability. This improves trust and reduces risk, offering a clear guide for technical and operational teams.

This holistic approach to bias and XAI goes beyond theoretical understanding, providing concrete methods for your teams to implement and monitor. For more on AI strategy, including how to structure your AI content, you might find our insights on AI automation content structuring helpful.

AI Risk Assessment: A Proactive Enterprise Approach

AI introduces new vectors of risk—reputational, operational, legal, and ethical—that demand a proactive assessment strategy. While frameworks like the NIST AI RMF provide a robust foundation, enterprises need granular, industry-specific methodologies and tools for continuous monitoring.

Methodologies for Identifying and Prioritizing AI-Related Risks:

- Threat Modeling for AI: Adapting traditional cybersecurity threat modeling to identify potential misuse or vulnerabilities specific to AI systems (e.g., data poisoning attacks, model inversion attacks).

- Impact Assessments: Conducting thorough assessments for each AI project to understand its potential positive and negative impacts on individuals, groups, and the organization.

- Scenario Planning: Developing "what-if" scenarios to anticipate emergent AI risks, particularly with rapidly evolving generative AI capabilities (e.g., deepfakes, AI-driven disinformation).

- Continuous Monitoring: Implementing automated systems to track model performance, data drift, and unexpected behaviors that could indicate new or escalating risks.

Identifying and proactively assessing these risks is crucial. For instance, in an AI-driven environment, even seemingly innocuous content can lead to unforeseen risks, making a robust content governance strategy essential. You may want to explore how to update underperforming articles efficiently with AI to mitigate content risks.

Building an Ethical AI Culture: Beyond Compliance

True ethical AI implementation extends beyond technical solutions and regulatory compliance; it requires cultivating an organizational culture where "Trust by Design" is paramount. This means embedding ethical considerations into every stage of the AI lifecycle and fostering a workforce that is AI-literate and ethically aware.

Strategies for Fostering an Ethical AI Culture:

- Leadership Commitment: Ethical AI must be championed from the top, demonstrating a clear organizational commitment to responsible practices.

- Comprehensive Training and Education: Providing ongoing training for all employees—from executives to developers—on AI ethics, bias, privacy, and responsible use cases.

- Cross-Functional Collaboration: Breaking down silos to ensure legal, ethical, technical, and business teams work together to address AI challenges.

- Whistleblower Mechanisms: Establishing safe and clear channels for employees to raise ethical concerns about AI projects without fear of retribution.

- Transparency in External Communications: Clearly communicating your ethical AI commitments and practices to customers and stakeholders, building long-term trust.

An ethical AI culture transforms compliance into a competitive differentiator, enabling you to innovate responsibly and build lasting relationships with your customers and the broader society.

The BenAI Advantage: Your Trusted Partner in Ethical AI Transformation

At BenAI, we do more than just implement AI; we ensure you implement it right. Our approach is rooted in practical, actionable guidance, addressing the operationalization challenges that larger, more theoretical competitors often miss. We provide:

- Operational Focus: Unlike many who explain what ethical AI is, we concentrate on how to implement it for large enterprises, with actionable steps, frameworks, and templates.

- Vendor-Agnostic Guidance: Our solutions are independent of specific AI platforms, making them relevant to your diverse technology stack.

- Regulatory Interoperability: We offer clear strategies for navigating complex, conflicting global AI regulations, a critical need for international businesses.

- Emergent Risk Preparedness: We proactively address the rapidly evolving landscape of AI risks, including the unique challenges of generative AI.

- Holistic Approach: We integrate technical, legal, and cultural aspects of ethical AI, recognizing that successful implementation requires cross-functional alignment.

Whether you're looking for custom AI implementations, training, and consulting or tailored solutions for marketing and recruiting, we guide you through the complexities, transforming your business into an AI-first, ethically sound enterprise.

Frequently Asked Questions about Ethical AI Implementation & Governance

Q1: Why is ethical AI implementation important beyond just legal compliance?

While compliance is crucial to avoid fines and legal issues, ethical AI goes further by building trust with customers, employees, and stakeholders. Unethical AI can lead to significant reputational damage, customer churn, and decreased employee morale. Moreover, responsible AI initiatives have been shown to improve ROI and organizational efficiency by 58%, and enhance customer experience and innovation by 55%, making it a clear competitive advantage.

Q2: How do global regulations like GDPR, CCPA, and the EU AI Act impact AI development in enterprises?

These regulations impose strict requirements on data privacy, consent, and transparency in AI systems. The EU AI Act, in particular, takes a risk-based approach, categorizing AI systems and imposing corresponding obligations on high-risk applications. For multinational enterprises, navigating the intersection of these laws requires a sophisticated and proactive compliance strategy to ensure that AI systems meet diverse legal standards across different jurisdictions.

Q3: What are the biggest challenges enterprises face in operationalizing ethical AI principles?

The primary challenge, cited by 50% of executives, is translating abstract responsible AI principles into concrete operational processes. This includes integrating ethical considerations into the AI development lifecycle, establishing clear accountability, managing and mitigating algorithmic bias, ensuring explainability (XAI), and fostering a culture of ethical AI across diverse teams.

Q4: How can an AI Ethics Committee help my organization?

An AI Ethics Committee serves as a critical oversight body, bringing together cross-functional expertise (legal, technical, business, ethics). It helps define mandates, review high-risk AI projects, establish ethical guidelines, and provide recommendations that link directly to product development and policy changes. This ensures that ethical considerations are embedded throughout your AI initiatives, fostering trust and reducing risk.

Q5: What role does BenAI play in helping businesses with ethical AI implementation?

BenAI acts as your trusted partner, offering practical, actionable guidance and custom implementations to bridge the gap between theoretical ethical principles and real-world operational challenges. We provide vendor-agnostic solutions for building robust AI governance frameworks, navigating complex regulations, mitigating bias, and ensuring explainability. Our goal is to transform your business into an AI-first entity that is both innovative and ethically sound.

Join Our Growing AI Business Community

Get access to our AI Automations templates, 1:1 Tech support, 1:1 Solution Engineers, Step-by-step breakdowns and a community of forward-thinking business owners.

Latest Blogs

Explore our latest blog posts and insights.