Facing a sudden dip in organic search traffic can feel like a punch to the gut—especially when your business relies on that digital lifeline. The immediate questions race through your mind: Is it an algorithm change? A technical glitch? Did a competitor just outmaneuver you? Or is it something deeper, a shift in user behavior you haven't accounted for?

At BenAI, we understand this anxiety. We work with businesses and agencies daily to navigate the complexities of search engine performance. The reality is, erratic organic traffic has become the "new normal" since early 2024, with many publishers experiencing median drops of 10% in 2025, and some large brands seeing as much as 40-80% losses. This isn't just a technical issue; it's a business challenge that demands a methodical, data-driven diagnostic approach.

You're evaluating solutions, and you need more than just general advice. You need a trusted partner who can provide clear frameworks, deep insights, and actionable strategies to not only diagnose these declines but also to build resilience for the future. This guide will walk you through the precise steps to diagnose and interpret organic traffic dips, highlighting how to differentiate between various types of loss, leverage your analytics tools effectively, and move from panic to proactive recovery.

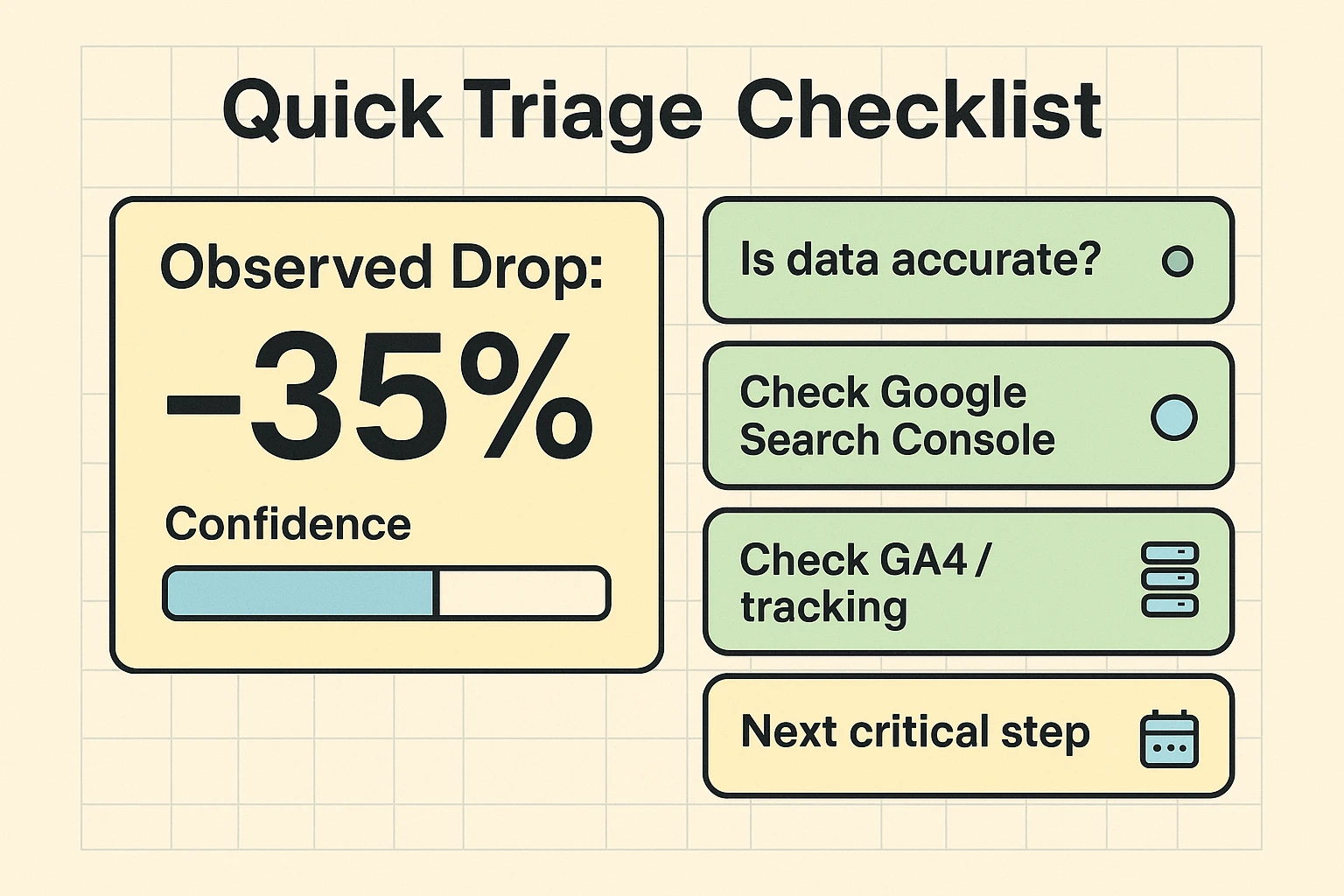

Phase 1: Initial Assessment & Data Validation – Don't Panic, Verify

Before diving into complex SEO audits, the very first step is to ensure you're working with accurate data and to rule out simple, often overlooked issues. This initial assessment is crucial for avoiding wild goose chases and provides a foundational understanding of the problem's scope.

Is Your Data Accurate? The First Line of Defense

Imagine spending hours analyzing a supposed traffic drop, only to realize your analytics tracking was broken. It happens more often than you think. Your diagnostic journey begins by questioning your data's integrity.

Verify Tracking Setup:

- Google Analytics 4 (GA4): Confirm your GA4 property is correctly implemented across all pages. Check for duplicate installations or missing code.

- Google Search Console (GSC): Ensure your GSC property is verified for all relevant versions of your domain (e.g., HTTP, HTTPS, www, non-www) and that data is actively flowing. Look for any manual actions or security issues reported directly by Google.

Check for Configuration Changes:

- Have any filters been applied in GA4 recently that might be skewing data?

- Are there any new exclusions?

- Has your GSC preferred domain setting been changed?

Quick Scan: Global vs. Localized Drop?

Once you trust your data, the next step is to understand the scope of the decline. Is it site-wide, indicating a broad issue, or is it isolated to specific pages, keywords, or types of queries? This distinction is critical for narrowing down potential causes.

- Segment your GA4 data: Look at traffic by landing page, device type, geographic location, and query type (branded vs. non-branded).

- Analyze GSC data: Use the "Performance" report to filter by page, query, and date ranges. Compare the affected period with a similar previous period (e.g., week-over-week, month-over-month, or year-over-year to account for seasonality).

A Conductor study highlights that Google makes over 5,000 algorithmic changes a year. Pinpointing whether a drop is due to a site-wide update or a localized issue can significantly guide your approach.

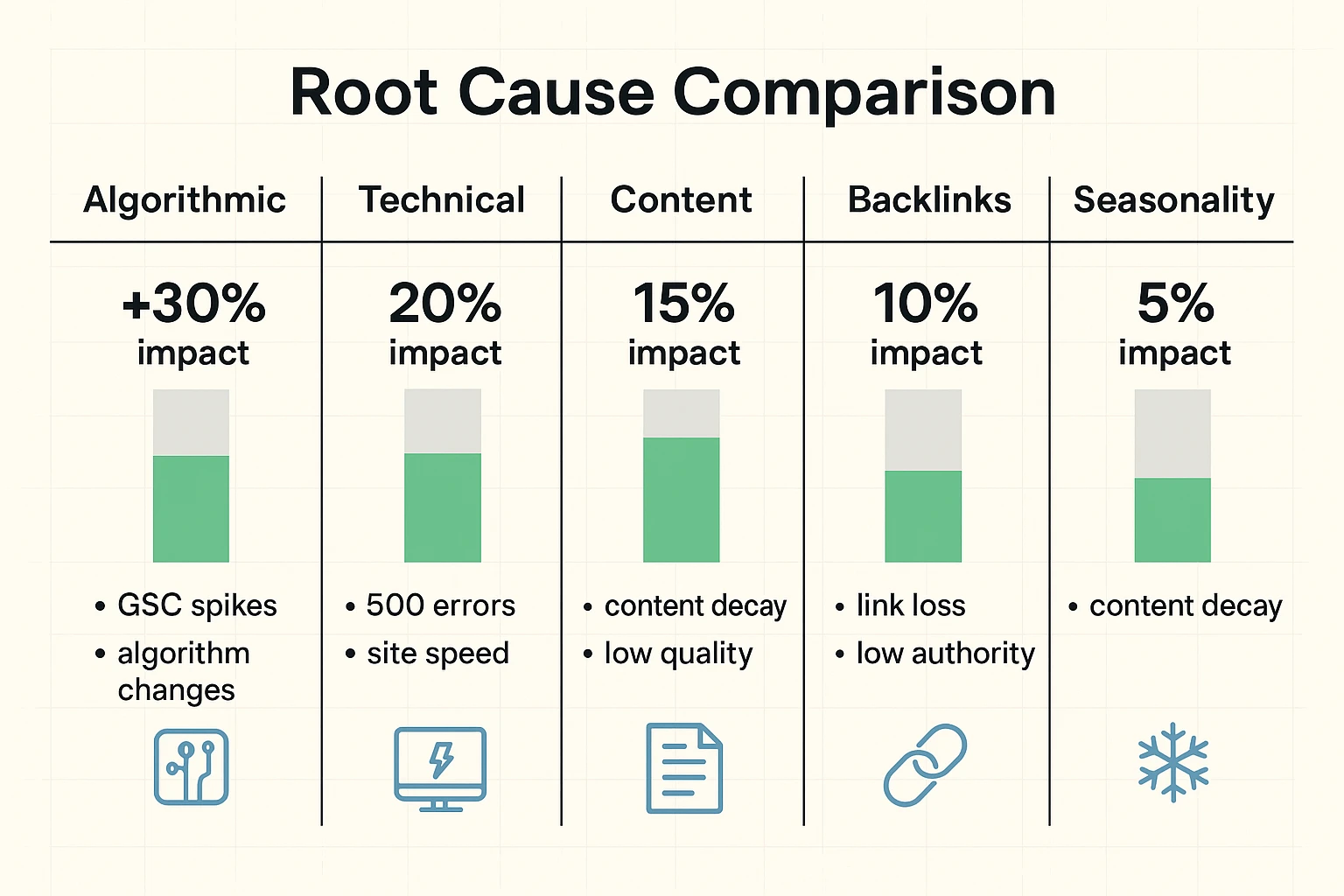

Phase 2: Decoding the Drop – Identifying the Root Cause

With accurate data and a clear understanding of the decline's scope, it's time for deeper analysis. Organic traffic drops rarely have a single, isolated cause. Often, it's a confluence of factors that amplify the impact. This phase requires methodical investigation across several key areas.

Algorithmic Updates: The Google Factor

Google's algorithms are constantly evolving, with significant core updates impacting search results. The official Google Search Central Blog and reputable SEO news sites like Search Engine Land are your go-to sources.

- Correlation with Updates: Check if your traffic drop aligns with any announced or widely observed algorithm updates. Use tools that track algorithm volatility.

- Understanding "Helpful Content": Post-early 2024, Google's Helpful Content System and E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) are paramount. Drops often correlate with content quality assessments. If your content isn't truly helpful, reliable, and people-first, or lacks clear author credentials, you might see a decline.

- AI Overviews (AIOs): This is a new and significant factor. Perplexity data shows AIOs can reduce organic CTR for relevant queries by an alarming 61%, and overall CTR by 47% when present. Industries like Real Estate, Restaurants, and Retail show exceptionally high vulnerability (206% to 273%) to organic traffic decline due to AIOs. This means even if your site ranks, users might not click through because the answer is provided directly in the SERP. We cover how to prepare your content for these shifts in our guide to AI Keyword Content Gap Analysis.

Technical Glitches & Indexing Issues: The Site's Health

Technical SEO problems are silent killers of organic traffic. While often less visible than content issues, their impact can be profound.

- Crawl Errors & Indexing Issues:

- GSC "Index Coverage" Report: Look for significant increases in "Error" or "Excluded" pages.

- Robots.txt & Noindex Tags: Check if your robots.txt file is inadvertently blocking important sections of your site, or if

noindextags have been accidentally applied to live pages. These are common culprits after site migrations or major updates. For advanced detection, BenAI leverages AI to enhance AI Crawlability & Indexation strategies.

- Site Speed & Core Web Vitals: Google prioritizes user experience. Slow loading times, poor mobile responsiveness, and subpar Core Web Vitals (Largest Contentful Paint, Cumulative Layout Shift, First Input Delay) can negatively impact rankings.

- Broken Links & Redirects: Internal broken links create dead ends for crawlers and users. Incorrect or chained redirects can waste crawl budget and dilute link equity.

- Server Issues: 5xx errors (server errors), prolonged downtime, or even an aggressive firewall blocking Googlebot can lead to rapid de-indexing.

- Hacking & Security: A site compromise can lead to content injection, redirects to spam sites, or even GSC manual actions. Always check the "Security & Manual Actions" report in GSC.

Content & User Experience: Are You Meeting User Needs?

Even without a technical issue, your content's relevance and presentation can lead to stagnation or decline.

- Content Decay: Previously high-performing content can lose relevance over time. This isn't just about freshness; it's about whether your content still addresses current user intent and covers topics comprehensively, especially in an era where 60% of all searches now end without a click.

- Duplicate Content & Cannibalization: Identical or near-identical content across multiple URLs can confuse search engines. Keyword cannibalization occurs when multiple pages on your site target the same keywords, competing against each other rather than external competitors.

- On-page Optimization: Missing meta descriptions, poor heading structures, or lack of schema markup can hinder search engines' understanding of your content. Implementing AI Schema Markup Automation can ensure your content is properly structured for optimal indexing.

- User Engagement Signals: While not direct ranking factors, high bounce rates, low dwell times, and poor CTR can indicate that your content isn't satisfying user intent, directly or indirectly signaling lower quality to search engines.

Backlinks & Off-Page Factors: External Signals

The external signals pointing to your site remain crucial.

- Lost Backlinks: Have you lost high-quality backlinks from authoritative sites? Use a backlink analysis tool to monitor your profile changes.

- Toxic Backlinks & Penalties: An influx of spammy or unnatural backlinks can trigger a manual penalty from Google or a devaluing by the algorithm.

- Competitive Shifts: Are your competitors investing heavily in their SEO, gaining high-quality links, or publishing superior content? The competitive landscape is never static.

Seasonality & Search Intent Shifts: Market Dynamics

Sometimes, a perceived drop isn't an issue with your site at all, but rather a natural fluctuation in market demand or evolving user behavior.

- Seasonality: Use Google Trends to analyze historical search interest for your primary keywords. Many industries experience predictable peaks and valleys.

- Changes in User Intent: How people search and what they expect from SERPs changes. Are users now looking for different types of information or comparing products differently? A notable example is how a generic search for "birds of prey" can dramatically shift between animal enthusiasts and fans of a movie title based on current trends.

Phase 3: Crafting Your Recovery Strategy

Once you've methodically diagnosed the root causes, the next critical step is to develop a prioritized and actionable recovery plan. This isn't just about fixing problems; it's about making strategic decisions that yield the highest impact.

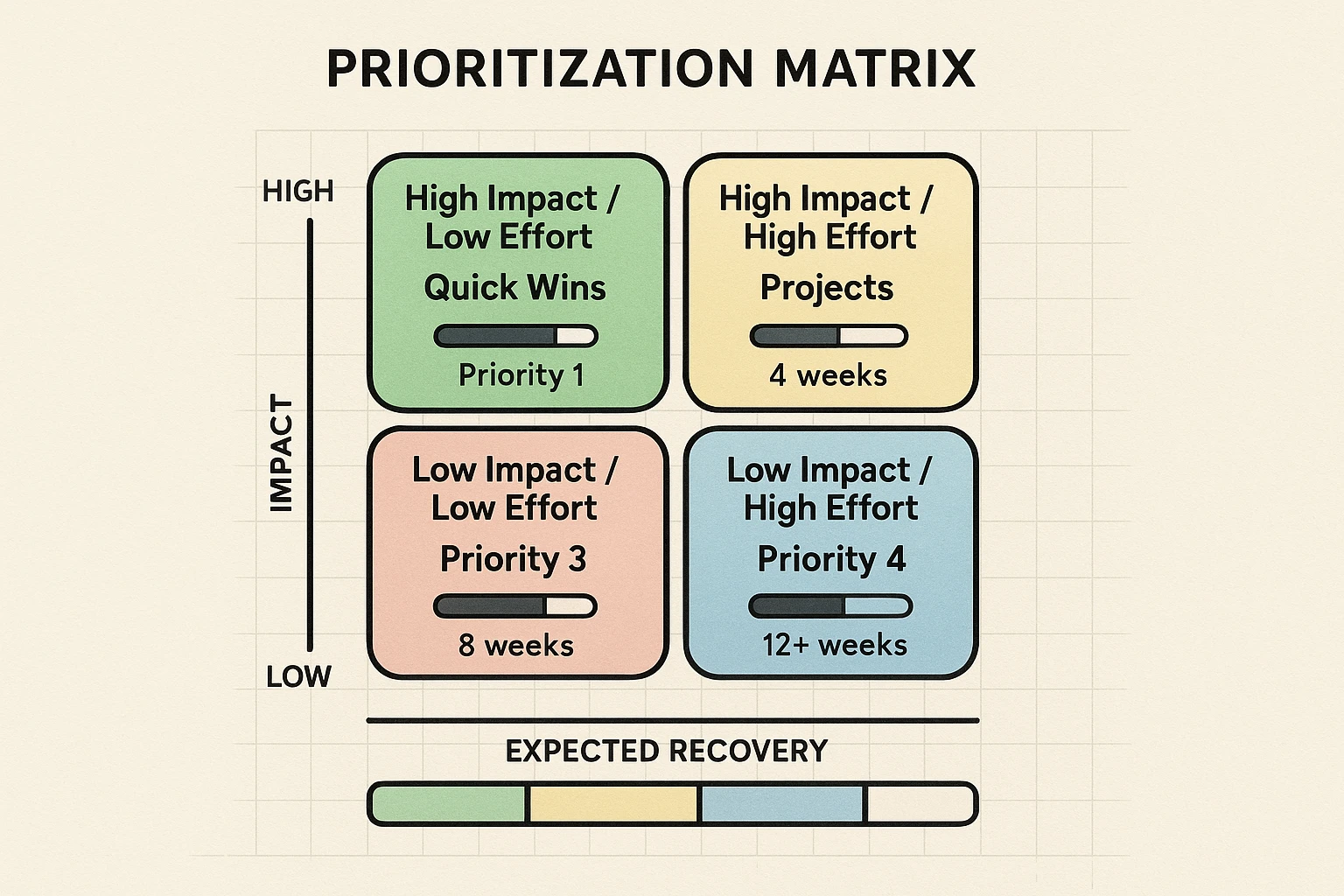

Prioritization Matrix: Focus on Impact

You've likely found multiple issues. Attempting to fix everything at once is inefficient and often ineffective. A prioritization matrix helps you apply your resources where they matter most.

- High Impact, Low Effort: Tackle these first. These are often quick technical fixes (e.g., correcting a robots.txt entry, fixing critical broken internal links, re-publishing content with a new date).

- High Impact, High Effort: These require significant resources but promise substantial returns (e.g., content overhaul for E-E-A-T, major technical SEO audit and implementation like improving Core Web Vitals, or a comprehensive backlink clean-up).

- Low Impact, Low Effort: Address these if time and resources allow, but don't let them derail your focus from high-impact items.

- Low Impact, High Effort: De-prioritize or defer these. They're rarely worth the investment during a recovery phase.

Actionable Playbooks: Your Roadmap to Recovery

Your recovery strategy needs concrete steps. Here are playbooks for common areas:

- Technical SEO Recovery:

- Crawlability/Indexability: Review GSC's Index Coverage reports daily. Submit sitemaps after major changes. Ensure all critical pages are crawlable and indexable.

- Site Health: Implement identified fixes for site speed, mobile-friendliness, and Core Web Vitals. Conduct regular technical audits (BenAI offers solutions for No-Code AI Technical SEO Automation to streamline this).

- Schema Markup: Ensure structured data is correctly implemented for key content types to help search engines understand your content better. Explore AI Schema Markup Automation for efficiency.

- Content Refresh & Optimization Workflow:

- E-E-A-T First: Audit your content for helpfulness, originality, and clear author expertise. Update outdated information, add recent statistics, and strengthen your internal linking strategy. Content agents can help with this. We have more on this in our guide to Automated on-Page SEO with AI.

- User Intent Adaptation: Re-evaluate keywords against current user intent, especially concerning AIOs and zero-click searches. Can your content answer questions directly while still prompting clicks for more in-depth information?

- Content Gap Analysis: Identify topics or angles your competitors cover but you don't. Use this to create new, valuable content.

- Backlink Audit & Outreach:

- Disallow Harmful Links: Use GSC's disavow tool for clearly toxic backlinks.

- Re-earn Lost Links: Identify crucial lost backlinks and reach out to webmasters to request reinstatement.

- Targeted Outreach: Proactively build high-quality, relevant backlinks from authoritative sources.

Communicating the Diagnosis & Plan

Transparency is key, especially with clients or internal stakeholders. Communicate your findings clearly, outlining the root causes, your prioritized action plan, estimated timelines, and expected impact. Focus on data, not speculation.

Phase 4: Long-Term Prevention: Building Resilience

While recovery is urgent, the ultimate goal is to prevent future drops and build a robust, AI-first online presence. This requires a shift from reactive problem-solving to proactive monitoring and strategic adaptation.

Continuous Monitoring Best Practices

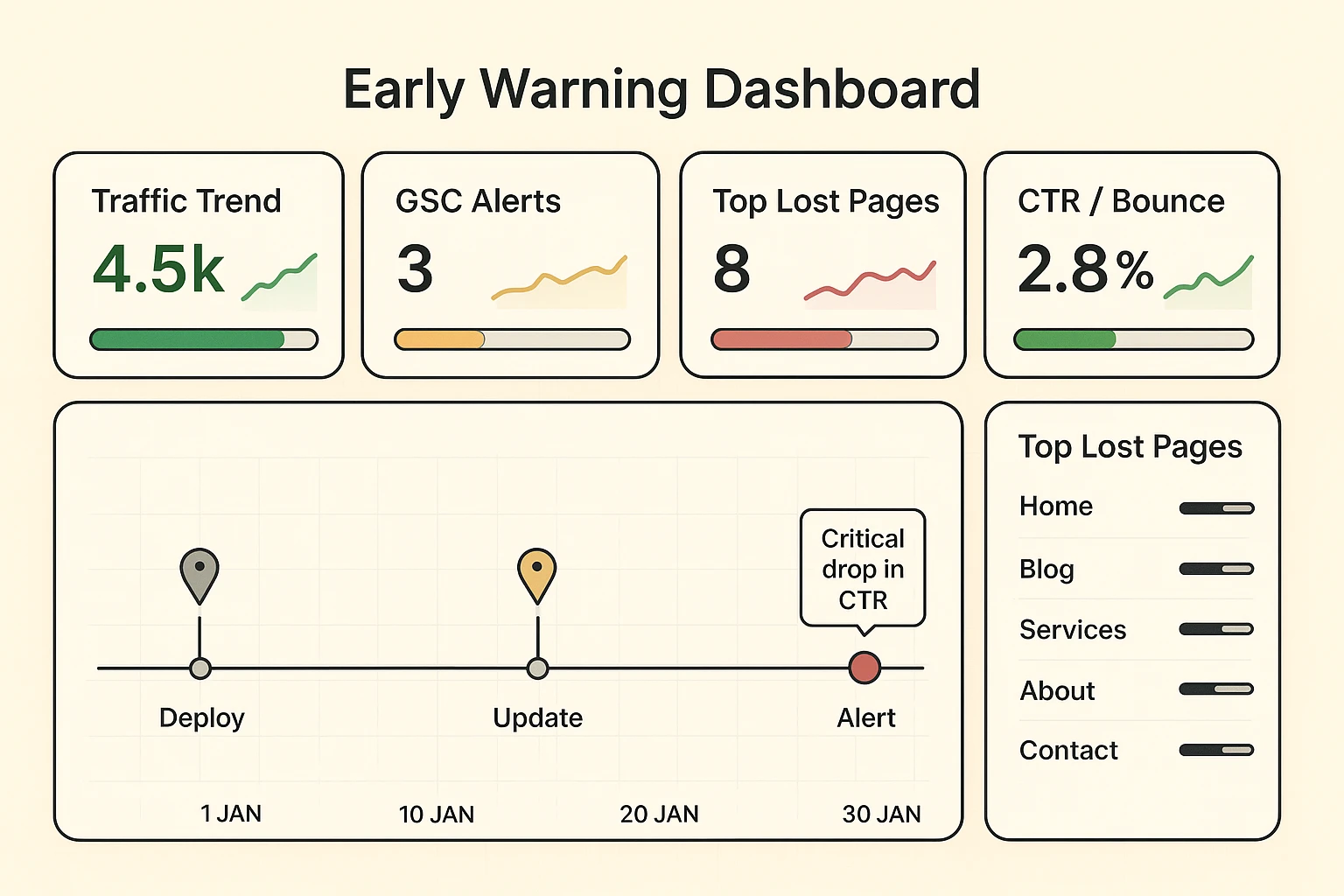

Set up automated anomaly detection and regular reporting to catch issues early.

- Google Search Console Alerts: Configure GSC to send emails for new manual actions, security issues, or critical errors.

- Custom GA4 Alerts: Set up alerts for significant drops in organic traffic, specific page performance, or keyword rankings.

- Third-Party Tools: Many SEO tools offer custom monitoring dashboards and alerts for rankings, backlinks, and technical health.

- Internal Reporting Dashboards: Create a centralized dashboard that correlates traffic trends, GSC alerts, and recent website deployments. This offers an early warning system and helps trace potential causes quickly.

Regular Content Audits & Refreshes

Content isn't a "set it and forget it" asset. Implement a schedule for auditing and refreshing your content to maintain relevance and E-E-A-T. BenAI's services for reducing manual SEO workflows with AI can significantly automate this process.

Importance of a Diversified Traffic Strategy

While organic search is vital, relying solely on one channel is risky. Diversify your traffic sources through paid ads, social media, email marketing, and direct engagement to build a more resilient online presence.

Staying Updated with Google's Guidelines & Industry Trends

The SEO landscape is constantly shifting. Regularly consume content from official Google sources, attend industry webinars, and follow thought leaders. Understanding trends like the impact of AI Overviews and the increasing prevalence of zero-click searches (60% of all searches now end without a click) is critical for long-term health. For a comprehensive overview, refer to our AI SEO Automation Guide.

Frequently Asked Questions

Q1: How quickly should I expect to recover organic traffic after implementing fixes?

Recovery timelines vary significantly based on the severity of the issue, the nature of the fix, and how quickly Google re-crawls and re-indexes your site. Minor technical fixes might show improvements within days or weeks. However, recovering from a major algorithmic penalty or a large content overhaul could take months. Continuous monitoring and patience are key.

Q2: My organic traffic dropped, but my conversions increased. What does this mean?

This is an interesting, and often positive, scenario. Perplexity data shows that some publishers have seen conversion value per visitor increase (e.g., revenue up 10.9% despite a 12.7% traffic drop). This suggests that while you might be getting less overall traffic, the traffic you are receiving is more qualified and intentional. It could be due to more precise targeting, a shift in user intent, or simply Google sending higher-quality leads. Focus on optimizing for these higher-converting users.

Q3: How do I differentiate between an algorithmic drop and a manual penalty?

An algorithmic drop usually presents as a broad decline across many pages or keywords, often correlating with an announced Google update. A manual penalty, however, will be explicitly stated in your Google Search Console under "Security & Manual Actions." Manual actions are direct interventions by Google's spam team and require specific actions to rectify.

Q4: Should I use Google Search Console or Google Analytics 4 for diagnosing drops?

Both are indispensable and should be used in tandem. GSC is your source of truth for how Google sees your site (impressions, clicks, crawl errors, indexing status, manual actions). GA4 provides insights into user behavior after they land on your site (engagement, conversions, bounce rate). GSC helps you understand why you might be losing visibility, while GA4 helps you understand the quality of the traffic you're getting.

Q5: Can AI help prevent or diagnose traffic drops?

Absolutely. AI can significantly enhance your ability to prevent and diagnose traffic drops. AI-powered tools can:

- Automate monitoring: Rapidly detect anomalies in traffic patterns.

- Improve technical SEO: Identify crawlability issues, optimize site structure, and suggest schema markup improvements. We leverage AI for Automated Internal Linking Strategies.

- Content optimization: Analyze content gaps, suggest freshness updates, and ensure E-E-A-T compliance.

- Competitive analysis: Monitor competitor movements and identify shifts in the SERP landscape.At BenAI, our core mission is to help businesses become "AI-first," building proven AI systems and offering tailored implementations to drive growth and efficiency.

Empower Your Decision with BenAI

Navigating the complexities of organic traffic declines requires more than just tools—it demands expertise, a methodical approach, and a partner who understands the dynamic nature of search. At BenAI, we empower businesses with the insights and solutions to not only recover from traffic drops but to build a robust, future-proof digital presence.

If you're facing a significant organic traffic decline and need authoritative guidance to interpret your data and implement an effective recovery strategy, we invite you to connect with our experts. Let's transform your challenge into an opportunity for growth and resilience.

Join Our Growing AI Business Community

Get access to our AI Automations templates, 1:1 Tech support, 1:1 Solution Engineers, Step-by-step breakdowns and a community of forward-thinking business owners.

Latest Blogs

Explore our latest blog posts and insights.